the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Improving heat transfer predictions in heterogeneous riparian zones using transfer learning techniques

Aohan Jin

Wenguang Shi

Jun Du

Renjie Zhou

Hongbin Zhan

Yao Huang

Xuan Gu

Data-driven deep learning models usually perform well in terms of improving computational efficiency for predicting heat transfer processes in heterogeneous riparian zones. However, traditional deep learning models often suffer from accuracy when data availability is limited. In this study, a novel physics-informed deep transfer learning (PDTL) approach is proposed to improve the accuracy of spatiotemporal temperature distribution predictions. The proposed PDTL model integrates the physical mechanisms described by an analytical model into the standard deep neural network (DNN) model using a transfer learning technique. To test the robustness of the proposed PDTL model, we analyze the influence of the number of observation points at different locations, streambed heterogeneity, and observation noise levels on the mean squared error MSE values between the observed and predicted temperature fields. Results indicate that the PDTL model significantly outperforms the DNN model in scenarios with scarce training data, and the MSE values decrease with increasing observation points for both PDTL and DNN models. Furthermore, increasing streambed heterogeneity and observation noise levels raises the MSE values of the PDTL and DNN models, with the PDTL model exhibiting greater robustness than the DNN model, highlighting its potential for practical applications in riparian zone management.

- Article

(11548 KB) - Full-text XML

-

Supplement

(992 KB) - BibTeX

- EndNote

Understanding heat transfer processes in riparian zones is critical for evaluating physical and biochemical processes during surface water–groundwater interactions, such as contaminant transport (Elliott and Brooks, 1997; Schmidt et al., 2011), water resource management (Bukaveckas, 2007; Fleckenstein et al., 2010), and aquatic ecosystem regulation (Ren et al., 2018; Halloran et al., 2016). As a primary source of uncertainty in riparian zone modeling, the inherent heterogeneity of the streambed stands out as a pivotal factor in accurately modeling groundwater flow and heat transfer processes (Karan et al., 2014; Brunner et al., 2017). However, given the intricacies of streambed heterogeneity, data acquisition in heterogeneous riparian zones is often time-consuming and costly (Zhang et al., 2023; Kalbus et al., 2006). Consequently, achieving accurate predictions of heat transfer processes in heterogeneous riparian zones with limited observation data remains challenging.

Over the past few decades, there has been a substantial increase in efforts toward simulating heat transfer processes in riparian zones, which can be categorized into two groups: physics-based models and data-driven models (Barclay et al., 2023; Feigl et al., 2021; Heavilin and Neilson, 2012). Typically, the physics-based models employ partial differential equations to characterize heat transfer dynamics within riparian zones, such as the convection–diffusion equation (Chen et al., 2018; Keery et al., 2007), which aims to simulate and forecast temperature variations within riparian zones. Resolving the convection–diffusion equation generally involves two approaches: analytical and numerical models. Analytical models provide a precise mathematical representation of heat transfer dynamics and offer fundamental insights into physical processes within riparian zones, but their applicability is often limited to rather simplified and idealized scenarios (Keery et al., 2007; Bandai and Ghezzehei, 2021; Zhou and Zhang, 2022). Numerical models, which rely on discretizing governing equations and solving them iteratively, are able to handle more intricate scenarios and address unsteady flows effectively (Cui and Zhu, 2018; Ren et al., 2019, 2023). Nevertheless, numerical models are constrained by the uncertainties of model structures and the prerequisites of streambed characteristic parameters (Heavilin and Neilson, 2012; Shi et al., 2023).

Data-driven models, unlike physics-based models, can create a direct mapping between input and output variables without explicit knowledge of underlying physical processes governing the system (Zhou and Zhang, 2023; Callaham et al., 2021). In recent years, data-driven models have achieved significant advancements and emerged as a successful alternative in hydrological and environmental modeling (Zhou et al., 2024; Cao et al., 2022; Wade et al., 2023). However, their deficiency in incorporating physical principles restricts their capability to delineate explicit computational processes as physics-based models, posing a challenge to achieve enhanced extrapolation capabilities (Read et al., 2019; Cho and Kim, 2022). Meanwhile, data-driven models typically require massive amounts of data for training and may yield results that defy established physical laws due to the lack of physical principles (Read et al., 2019; Xie et al., 2022). The strengths and weaknesses inherent in both data-driven and physically based models are evident across various research domains (Kim et al., 2021; Wang et al., 2023). Consequently, there is an increasing inclination towards integrating physical processes into data-driven models, which enables these models to extract patterns and laws from both observation data and underlying physical principles (Zhao et al., 2021; Karpatne et al., 2017).

Typically, several methods exist to integrate two divergent models: one is to employ a deep learning framework to substitute either a submodule or an intermediary parameter in physically based models (Jiang et al., 2020; Zhao et al., 2019; Cho and Kim, 2022; Arcomano et al., 2022), and the other involves integrating physical models to furnish additional constraints or penalizations to the deep learning framework (Kamrava et al., 2021; Raissi et al., 2019; Yeung et al., 2022). Additionally, transfer learning provides a feasible approach for integrating analytical and deep learning models, where knowledge is transferred from a distinct but relevant source domain to enhance the efficacy of the target domain (Zhang et al., 2023; Chen et al., 2021). This approach can diminish the requirement for extensive training data in the target domain, which is considered to be a major barrier of deep learning applications. By leveraging knowledge gained from pre-training models, it accelerates the learning process and enhances model performance (Guo et al., 2023; Jiang and Durlofsky, 2023). Recently, the use of the transfer learning technique has gained attention in the field of hydrological modeling (Zhang et al., 2023; Cao et al., 2022; Chen et al., 2021; Vandaele et al., 2021; Willard et al., 2021). For example, Xiong et al. (2022) developed a long short-term memory (LSTM) model of daily dissolved inorganic nitrogen concentrations and fluxes in the coastal watershed located in southeastern China. They retrained this model using multi-watershed data and successfully applied it to seven diverse watersheds through a transfer learning approach. Zhang et al. (2023) used the transfer learning technique to integrate the deep learning model and analytical models for predicting groundwater flow in aquifers and obtained satisfactory prediction performance for complex scenarios.

In this study, we introduce a novel physics-informed deep transfer learning (PDTL) approach that incorporates physical information from analytical models into a deep learning framework using the transfer learning technique. The proposed PDTL model is implemented to predict the spatiotemporal temperature distribution in heterogeneous riparian zones by leveraging analytical solutions, deep learning models, and transfer learning techniques. The analytical model is used to efficiently produce physically consistent heat distribution patterns and data in homogeneous riparian zones, which serve as the training data for the pre-training deep learning model. Subsequently, the weights and biases learned from the pre-training model are transferred to a new deep learning model under heterogeneous scenarios through transfer learning. By integrating insights from analytical models with the approximation capability of deep learning models, the PDTL model achieves improved efficiency and performance. Notably, the newly proposed approach demonstrates significant performance improvement, even with scarce observational data. This innovative approach provides for accurate and efficient modeling of complex heat transfer processes in heterogeneous environments, even with limited observation data.

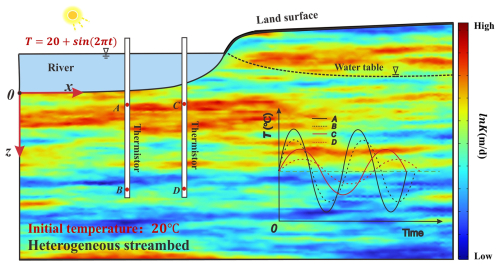

2.1 Conceptual model

The two-dimensional (2D) conceptual model of the heat transfer process in a heterogeneous streambed is depicted in Fig. 1. The coordinate system originates at the center of the river, with the x axis oriented horizontally from left to right along the streambed. The z axis is located vertically downward along the left inlet boundary of the system and perpendicular to the x axis. It is postulated that the thermal properties of the streambed are uniform. The river has a width of 2L. Heat originated from the river, with its temperature represented by an arbitrary function. The initial and boundary conditions are depicted in Fig. 1. An initial temperature of 20 °C is prescribed. The boundary conditions on the left, right, and bottom sides are all specified as no heat flux boundary. The top boundary condition at (L=0.32 m in this study) is represented by a sinusoidal temperature signal ranging between 19 and 21 °C (i.e., ). Meanwhile, the top boundary condition at x>L is held constant at a temperature of 20 °C. For this conceptual framework, two modeling approaches are employed. The analytical model provides exact solutions for heat transfer in homogeneous streambeds with uniform hydraulic conductivity and thermal properties (Shi et al., 2023). It generates physically consistent temperature distributions efficiently for the pre-training phase of our PDTL model. Meanwhile, the numerical model extends this solution to heterogeneous streambeds with spatially variable hydraulic conductivity, accommodating complex flow paths created by streambed heterogeneity. The details of the analytical solution for the homogeneous streambed and the numerical solution for the heterogeneous streambed are available in the Supplement.

2.2 Deep neural network (DNN)

The deep neural network (DNN) is a multi-layer feed-forward network with an input layer, multiple hidden layers, and an output layer. The backpropagation algorithm is utilized to minimize the mean error of the output and has been proven to be crucial in enhancing convergence (Jin et al., 2024). Assuming the presence of m hidden layers, the input and output vectors are denoted by X and O, respectively. The forward equations of the DNN model can be represented as follows:

where Hi represents the output of the ith hidden layer and W and b represent the weight matrices and bias vectors, respectively. Typically, W and b can amalgamate as the parameter set , and tanh refers to the tanh activation function. To mitigate the impact of dimensionality during the training process, the temperature field dataset is normalized to through the following equation in the pre-training process:

where D denotes data utilized in the DNN model and Dmax and Dmin denote the maximum and minimum values and are computed with reference to the training samples exclusively. The same values of Dmax and Dmin are employed to normalize the testing samples to prevent data leakage (Zuo et al., 2020).

2.3 Deep collocation method

The collocation technique uses a collection of randomly distributed points to minimize the loss function while adhering to a specified set of constraints. This method demonstrates a degree of immunity against instabilities, such as explosion or vanishing gradients encountered in DNNs, offering a feasible strategy for training DNNs (Guo et al., 2023). By incorporating the collocation points throughout the model domain, along with the physical principles of the boundary and initial conditions, the learning process aims to minimize the following loss functions:

where L(θ) refers to the total loss; MSED, MSEBC, and MSEIC represent the mean squared error (MSE) of the model domain, boundary conditions, and initial conditions, respectively; ND, NBC, and NIC are the number of data points for different terms; and ξD, ξBC, and ξIC are scaling factors to normalize loss terms. According to the study of He and Tartakovsky (2021), the values of ξD, ξBC, and ξIC in this study are set to 1, 10, and 10, respectively. represents the values estimated by the DNN model; is the solution of the 2D analytical model with boundary conditions and initial conditions T(xy,0). The objective is to find the parameter set θ that minimizes the loss function. L(θ) considers the constraints of physical principles and imposes penalties for initial and boundary conditions. It allows the neural networks to learn the underlying physical principles and the boundary and initial conditions rather than simply memorizing the training data, thus improving the efficiency and accuracy (He and Tartakovsky, 2021; Raissi et al., 2020; Tartakovsky et al., 2020).

2.4 Transfer learning

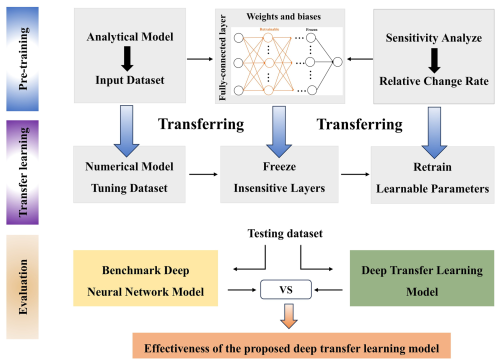

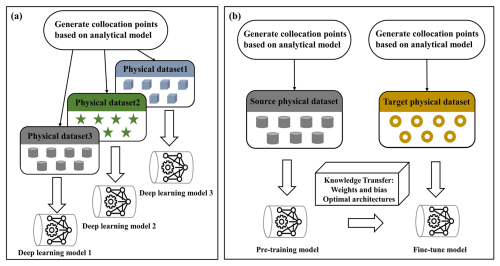

As depicted in Fig. 2, traditional deep learning models are allocated to distinct learning tasks, which require each model to be trained independently from scratch, leading to high computational demands and the need for substantial amounts of training data for each task. In contrast, the transfer learning technique offers an efficient alternative. By employing a pre-trained model that is then fine-tuned for predicting heat transfer in heterogeneous streambeds, transfer learning can significantly reduce the computational burden and the need for large datasets. This is achieved by leveraging the knowledge gained from the source domain and applying it to the target domain, thereby accelerating the learning process and improving the model performance.

Figure 2Schematic diagram of the pre-training and fine-tuning methods in the transfer learning model (revised from Guo et al., 2023). (a) Traditional machine learning method. (b) Transfer learning method.

The transfer learning technique involves training a model to establish a mapping between the input vector X and the observed data O derived from a target dataset , . It assumes that both the source and target tasks share similar parameters or prior distributions of the hyperparameters. The pre-training model is established utilizing the dataset from the source tasks , Xs∈X, Os∈O}, which is generated through analytical or numerical models. In this study, the source and target datasets are spatiotemporal distributions of temperature fields in homogeneous and heterogeneous streambeds, respectively. The analytical model developed by Shi et al. (2023) is employed to provide a training dataset for pre-training. However, for the heterogeneous streambed, the analytical model is not available; the numerical models are employed to generate the fine-tuning dataset and serve as the benchmarks to evaluate the performance of the proposed PDTL model. The parameter θT for the fine-tuning model is acquired through the optimization of the loss function delineated by

where n∗ denotes the number of training datasets employed to fine-tune the pre-training model and ft() denotes the predictive function of the fine-tuning model.

The flowchart of the newly proposed framework is summarized in Fig. 3: the PDTL model is developed by initially generating an input dataset using the analytical model for heat transfer in homogeneous streambeds. The dataset is subsequently employed to pre-train a DNN model with physical constraints, focusing on learning the weights and biases of the fully connected layer. Next, the data of the observation points in the corresponding numerical model for heat transfer in heterogeneous streambeds are utilized to fine-tune the pre-trained DNN model by transferring the learned insensitive layers (i.e., freezing their weights and biases) and retraining the learnable parameters of the remaining layers. Finally, the effectiveness of the PDTL model is evaluated by comparing its performance against a traditional DNN model with a different number of observation points, which evaluates the model's ability to predict the spatiotemporal temperature distribution in heterogeneous streambeds.

3.1 Pre-training process

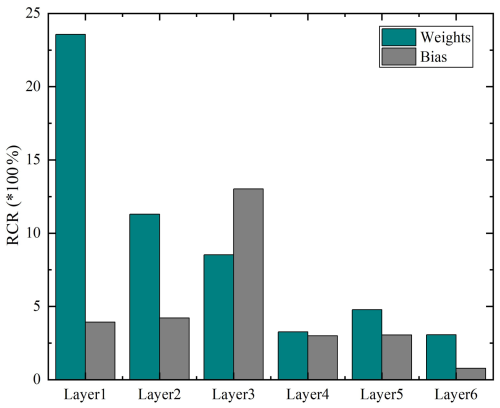

In this study, the pre-training model is a DNN model with six hidden layers, each containing 16 neurons. To evaluate the sensitivity of weights and biases to hydraulic conductivity and to identify which layers should be trainable or remain frozen, two pre-training models with identical structures but varying hydraulic conductivities are constructed. Both datasets consist of a 100×100 grid with 100 time steps generated by the 2D analytical model, where 80 % of the dataset is utilized for training and the remaining 20 % is utilized for testing. qx and qz depend on hydraulic conductivities in x and z directions. In this section, qx and qz are set to , and , for these two models, respectively. The input data consist of spatial locations (x,y) and time t, while the output data consist of the corresponding temperature. Results indicate that the predictions of the two pre-training models closely align with the analytical model with average MSE values of and , respectively. Similarly to the works of Hu et al. (2020) and Zhang et al. (2023), the difference in weights and biases between the two pre-training models is evaluated using the relative change rate (RCR):

where θ1i and θ2i are parameter matrixes in two pre-training models, respectively, and I is the number of elements in the parameter matrix. For enhanced comparability and credibility, each of the two pre-training models undergoes 20 training processes. Figure 4 presents the average RCR of weights and biases across all layers for the two pre-training models over 20 trials. The RCR of biases shows consistent stability across all layers, except for layer 3. In contrast, variations in weights are more prominent, particularly in layers 1, 2, and 3, which underscores the heightened sensitivity of these layers to hydraulic conductivity. Consequently, layers 1, 2, and 3 of the pre-training models are marked as trainable, while the remaining layers are held frozen in the following analysis. Notably, the convergence criteria are defined as a threshold of 3000 iterations with a minimum gradient alteration of throughout the training phase (Zhang et al., 2023; Wang et al., 2021).

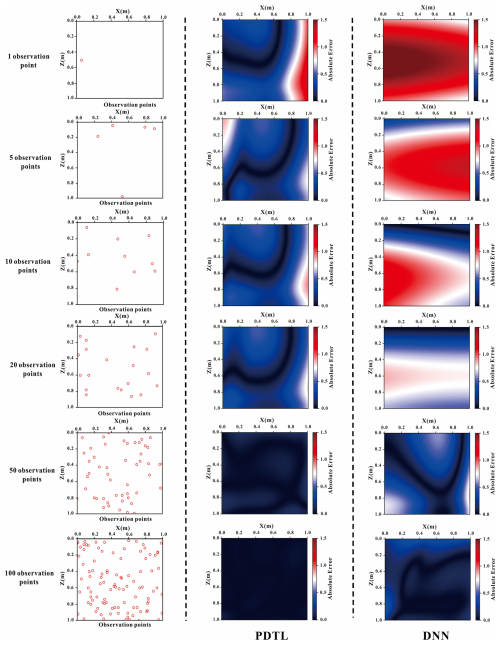

3.2 Spatial and temporal performance for the homogeneous scenario

The spatiotemporal distribution of temperature in homogeneous streambeds is obtained by the analytical model. In this study, we use 1, 5, 10, 20, 50, and 100 observation points, each with 100 time steps. The hydrological parameters are set at and , with all other parameters being consistent with those presented in Fig. 1. The temperature data from these observation points are used as training data for PDTL and DNN. The reference temperature field on 0.5d (i.e., the 50th time step) is employed as testing data. Figure 5 illustrates the absolute errors of the PDTL and DNN models for the homogeneous riparian zone. The results suggest that the PDTL model aligns well with the reference temperature field, whereas the DNN model tends to struggle in accurately capturing the reference temperature field. This highlights the significant improvement in the performance of the deep learning model facilitated by prior knowledge of the analytical solution and physical information. The pre-training model incorporates physical knowledge to provide superior initial parameters (weights and biases), which narrows the search space during the fine-tuning process. In contrast, the DNN model randomly initializes these parameters and requires more training points to explore the entire parameter space. To further demonstrate the predictive performance of the proposed model in time series, Fig. 6 shows the temperature time series predicted by the PDTL and DNN models at a given observation point (x=0.5m, y=0.5m). Results indicate that the PDTL model predicts the temperature fluctuation trend better compared to the DNN model. Especially for the sparse dataset with a few observation points, the average MSE of the PDTL model with 5 observation points is approximately 3.2 times lower than that of the DNN model. As shown in Fig. S2 in the Supplement, there is no significant difference in the performance of the PDTL and DNN models when the number of observation points increases to 200. Notably, the performance of the PDTL model appears to be less sensitive to the number of observation points. We attribute this phenomenon to two factors: (1) randomly selected observation points lead to optimal performance when the observation points are in proximity to the test point and vice versa; (2) the PDTL model demonstrates the capacity to integrate substantial information from the analytical model, which diminishes the requirement for the number of observation points.

Figure 5Absolute errors between the predicted temperature field and reference temperature field using PDTL and DNN models for the homogeneous streambed.

Figure 6Comparisons of the predicted temperature (blue curves) and reference temperature (red curves) using PDTL and DNN models for the homogeneous streambed.

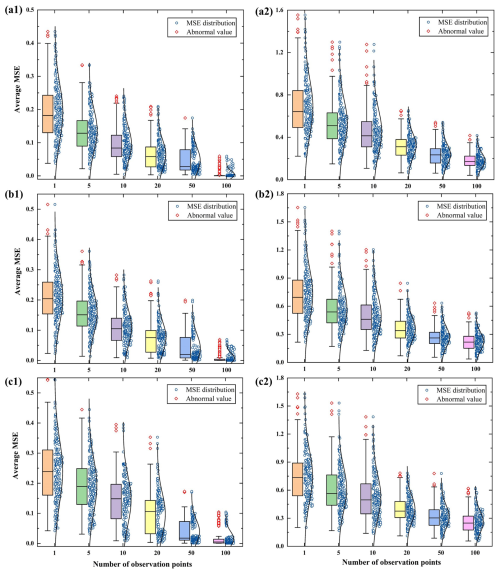

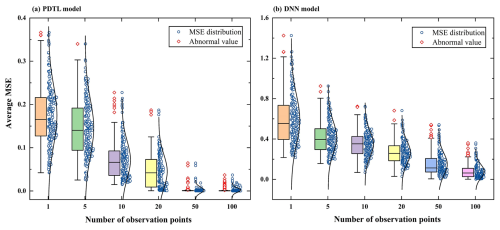

Figure 7MSE distribution of normalized results from PDTL and DNN models plotted against the number of observation points for the homogeneous streambed. (a) PDTL model. (b) DNN model.

The choice of observation points can influence the outcomes of the proposed PDTL model. To mitigate the effect of their positions, each observation point is randomly generated 200 times. The distributions of the average MSE for both the PDTL and DNN models across diverse numbers of observation points are illustrated in Fig. 7. Results reveal that both the interquartile range and the mean values of MSE for the PDTL model are considerably smaller than those of the DNN model. As an illustration, when considering 10 observation points, the average MSE for the PDTL model is approximately 0.11, whereas that for the DNN model is 0.54. Furthermore, there is a significant reduction in both interquartile range and mean values of MSE of the PDTL model, and the interquartile range and mean values of MSE of the PDTL model tend to stabilize as the number of observation points exceeds 50. On the contrary, the interquartile range and mean values of MSE of the DNN model consistently decrease with an increasing number of observation points, displaying a consistent pattern as observed in Fig. 6. It should be emphasized that the PDTL model can still produce satisfactory results even with sparse data. Even with more than 50 observation points, the DNN model still underperforms the PDTL model, which can be attributed to the following reasons: (1) due to the lack of prior physical knowledge, the DNN model may require more data to learn relatively complex patterns; (2) both the PDTL and DNN models follow the identical convergence criterion with a restricted number of epochs during the fine-tuning process, which may result in incomplete training for the DNN model.

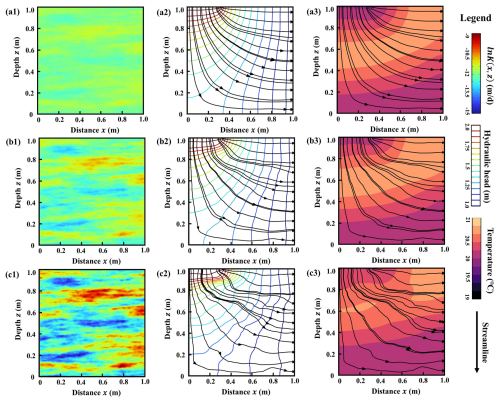

Figure 8Heat map and the contours of hydraulic head, temperature field, and streamlines for different K fields. Panels (a1)–(c1) show the heat map of K fields, panels (a2)–(c2) show the contours of hydraulic head and streamlines at 0.5d, and panels (a3)–(c3) show the contours of temperature field and streamlines at 0.5d for , 0.5, and 1.0, respectively.

3.3 Effects of nonuniform flow on heat transfer

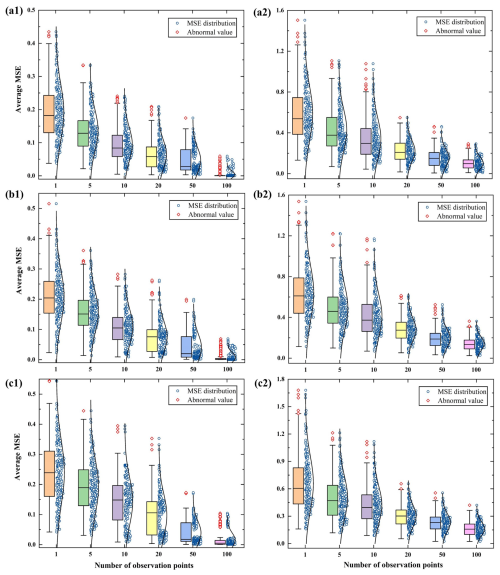

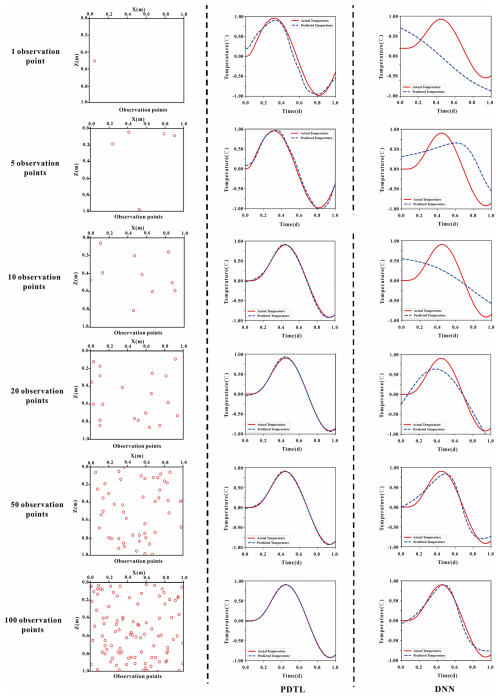

In this section, we evaluate the performance of the PDTL model in predicting the spatiotemporal temperature distribution in heterogeneous streambeds. The heterogeneous lnK field is generated by the exponential covariance function with mean μ=0; correlation length l=0.1 m in both the x and z directions; and variance , 0.5, and 1.0, respectively. Accordingly, three scenarios with low to high heterogeneity are created. Figure 8 depicts the random lnK fields and references flow fields of the three scenarios. The other parameters remain consistent with those of the homogeneous streambed. The temperature distribution in the heterogeneous streambed is estimated using the numerical model. Temperature time series of 1, 5, 10, 20, 50, and 100 observation points are extracted to fine-tune both the PDTL and DNN models.

To mitigate the impacts of random sampling during the fine-tuning process, 200 stochastic simulations are performed. The distribution of the average MSE for both the PDTL and DNN models in three distinct heterogeneous streambeds from low to high heterogeneity are shown in Fig. 9. One can find that the average MSE of the PDTL model is consistently minimal and significantly lower than that of the DNN model. Besides, with the same number of observation points, a decrease in corresponds to a reduction in average MSE. These findings can be explained by the fact that the proposed PDTL model exhibits a strong ability to transfer knowledge between two datasets with similar structures or features. A decreased indicates less heterogeneity in the lnK field, resulting in a temperature field that more closely resembles those generated by the analytical model. We attribute this improvement in the PDTL model to the enhanced initial parameters of the DNN model through the incorporation of physical knowledge during the fine-tuning process. For both the PDTL and DNN models, the interquartile ranges and mean values of MSE decrease as the number of observation points increases. Notably, by leveraging the insights from the analytical model, the PDTL model can effectively predict the temperature distribution in heterogeneous streambeds, even with sparse observation points (e.g., 5 observation points). In contrast, while the DNN model exhibits improved performance with an increased number of observation points, its performance heavily relies on this factor, showing unsatisfactory outcomes with fewer observation points. When the number of observation points reaches 50, the interquartile range and MSE of the PDTL model exhibit marginal changes, but the interquartile range and MSE of the DNN model still decrease significantly. Furthermore, there is no significant difference in the performance of the PDTL and DNN models in heterogeneous scenarios when the number of observation points increases to 200, as shown in Figs. S3 and S4 in the Supplement. The average MSE of the PDTL model is approximately 2.8–18.4 times smaller than that of the DNN model with the same observation points, which further demonstrates the capability of the PDTL model to transfer knowledge from homogeneous environments to heterogeneous environments.

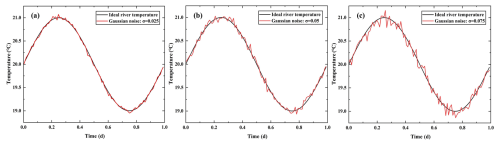

3.4 Effects of river temperature uncertainty

In this section, we evaluate the effectiveness of the PDTL model in the context of river temperature observation noises, which may arise from suboptimal field conditions or sensor resolution limitations (Chen et al., 2022; Shi et al., 2023). Specifically, the white Gaussian noise is introduced at the top boundary:

where φNoise and σNoise denote the mean and variance of white Gaussian noise, respectively, and normrnd() denotes the Gaussian distribution. In this section, φNoise is set to 0 °C and σNoise is set to 0.025, 0.05, and 0.075 °C, as shown in Fig. 10. Similarly, the heterogeneous lnK field of streambed is generated by the exponential covariance function with μ=0, l=0.1 m in both x and z directions and . The temperature time series from diverse numbers of observation points (1, 5, 10, 20, 50, and 100) are utilized as training datasets for both PDTL and DNN models. Additionally, 200 stochastic simulations are conducted to mitigate the influence of random sampling of observation points during the fine-tuning process.

Figure 10Time series diagram of river temperature under different observation noises. (a) σ=0.025 °C; (b) σ=0.05 °C; (c) σ=0.075 °C.

Figure 11 shows the distributions of the average MSE for both the PDTL and DNN models under different noise levels. It is observed that the PDTL and DNN models exhibit sensitivity to noise, and the elevated noise levels result in diminished model performance. Nevertheless, the PDTL model is less impacted by river temperature uncertainty compared to the DNN model. For instance, in cases of 10 observation points, the average MSE of the DNN model varies from 0.59–0.45 as σ decreases from 0.075–0.025. In contrast, the average MSE of the PDTL model ranges only from 0.11–0.08 under the same conditions, demonstrating the superior robustness of the PDTL model over the DNN model.

This study investigates the effects of streambed heterogeneity, temperature observation noises, and the number of observation points at different locations on the performance of the proposed PDTL model. Results indicate that the proposed PDTL model exhibits robust prediction performance with significantly reduced interquartile range and MSE, particularly in scenarios with sparse data. These findings suggest that integrating analytical knowledge effectively decreases model uncertainties. Compared to conventional data-driven models, which often require extensive training data, the PDTL model leverages analytical knowledge to improve accuracy while reducing uncertainty. This highlights its potential advantages in environmental and hydrological studies where data collection is often constrained. A key strength of this framework is its transferability to other applications beyond heat transfer in riparian zones. By integrating analytical knowledge with a data-driven approach, it can be extended to solute transport processes and heat transfer in other heterogeneous porous media, such as groundwater contaminant migration and CO2 geological storage. This versatility highlights the framework's potential for broader applications across various fields within environmental and hydrological studies. However, scientists should carefully consider the choice of training data and the assumptions underlying the analytical solutions when applying this framework to different settings.

Despite its advantages, it is imperative to recognize several constraints associated with the PDTL model proposed in this study. Firstly, the incapacity for extrapolation of the PDTL model restricts its applicability. As it lacks observation points outside the training domain, the PDTL model tends to face limitations concerning extrapolative tasks. Secondly, this study centers on modeling heat transfer problems in heterogeneous riparian zones, and the effectiveness of the PDTL model may be influenced by the selection of the K value. Thirdly, using locations as inputs may limit the model's transferability to other sites and weaken its direct connection to measurable physical variables. Future work will incorporate additional physically measurable parameters, such as surface temperature, river–aquifer fluxes, or hydraulic gradients, to enhance the model's generalizability and physical relevance. Finally, analytical models usually require regular spatial domains, while real-world study areas (e.g., watersheds) often feature irregular spatial domains. The effectiveness of the PDTL model may be influenced by discrepancies between the temperature field in the real-world area and the simplified analytical solution, especially near the boundary. Future research should systematically compare transfer-learning-based models with conventional models regarding computational efficiency, predictive accuracy, and adaptability to diverse hydrological settings. Additionally, efforts should focus on improving the framework's ability to handle irregular spatial domains through coordinate transformations, domain padding, or hybrid numerical–analytical datasets and on refining its extrapolation capability. Addressing these challenges will further enhance the applicability of the PDTL model in environmental and hydrological research.

In this study, we propose a novel physics-informed deep transfer learning (PDTL) approach, which enhances DNN models by integrating physical mechanisms described by an analytical model using a transfer learning technique. The proposed PDTL model is tested against the DNN model under different heterogeneous streambeds and observation noise levels. Results indicate that the PDTL model significantly improves the robustness and accuracy in predicting the spatiotemporal temperature distribution in heterogeneous streambeds by incorporating knowledge transferred from pre-trained DNN models. Importantly, the PDTL model maintains satisfactory performance even with sparse training data and high uncertainties in geological conditions and observations, making it a promising tool for practical applications in riparian zone management. This is particularly relevant in situations where data acquisition is often challenging and costly, highlighting the potential impact of our research. The main conclusions are summarized as follows:

-

The hydraulic conductivity primarily influences the parameters of the shallow layers in the DNN model, rendering it visible for using a transfer learning approach in predicting spatiotemporal temperature distribution in heterogeneous streambeds.

-

The accuracy of predicted temperature fields for both the PDTL and DNN models improves with an increased number of observation points, and the PDTL model significantly outperforms the DNN model for both homogeneous and heterogeneous scenarios.

-

The PDTL model demonstrates stronger robustness in dealing with observation noise compared to the DNN model and performs satisfactorily even with sparse training data.

-

The successful application of the PDTL model for predicting the spatiotemporal temperature distribution in heterogeneous streambeds indicates its pronounced advantages and prospects for estimating surface water and groundwater interaction fluxes in such heterogeneous riparian zones.

The Python codes of the PDTL and DNN models are made available for download from a public repository at https://doi.org/10.5281/zenodo.17209290.

The supplement related to this article is available online at https://doi.org/10.5194/hess-29-5251-2025-supplement.

Methodology, software, visualization, writing (original draft): AJ. Methodology, validation, writing (review and editing): WS. Conceptualization, validation, writing (review and editing): JD. Conceptualization, validation, writing (review and editing): RZ. Validation, writing (review and editing): HZ. Writing (review and editing): YH. Conceptualization, writing (review and editing), funding acquisition, project administration: QW. Writing (review and editing): XG.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims made in the text, published maps, institutional affiliations, or any other geographical representation in this paper. While Copernicus Publications makes every effort to include appropriate place names, the final responsibility lies with the authors.

The authors thank the editor and the three anonymous reviewers for their constructive comments that helped us improve the quality of the article.

This research has been supported by the National Natural Science Foundation of China (Grant 42222704 and 42502228); the Guangxi Science and Technology Major Program (Grant AB25069394); the Joint Funds of the National Natural Science Foundation of China (Grant U24A20596); the Natural Science Foundation of Hubei Province (grant no. 2025AFB012), the Postdoctoral Fellowship Program of China Postdoctoral Science Foundation (grant no. GZB20250729); the China Postdoctoral Science Foundation (grant no. 2025M773160); and China Postdoctoral Science Foundation - Hubei Joint Support Program (grant no. 2025T027HB).

This paper was edited by Heng Dai and reviewed by three anonymous referees.

Arcomano, T., Szunyogh, I., Wikner, A., Pathak, J., Hunt, B. R., and Ott, E.: A hybrid approach to atmospheric modeling that combines machine learning with a physics-based numerical model, J. Adv. Model. Earth Sy., 14, e2021MS002712, https://doi.org/10.1029/2021MS002712, 2022.

Bandai, T. and Ghezzehei, T. A.: Physics-informed neural networks with monotonicity constraints for Richardson-Richards equation: Estimation of constitutive relationships and soil water flux density from volumetric water content measurements, Water Resour. Res., 57, e2020WR027642, https://doi.org/10.1029/2020WR027642, 2021.

Barclay, J. R., Topp, S. N., Koenig, L. E., Sleckman, M. J., and Appling, A. P.: Train, inform, borrow, or combine? Approaches to process-guided deep learning for groundwater-influenced stream temperature prediction, Water Resour. Res., 59, e2023WR035327, https://doi.org/10.1029/2023WR035327, 2023.

Brunner, P., Therrien, R., Renard, P., Simmons, C. T., and Franssen, H.-J. H.: Advances in understanding river-groundwater interactions, Rev. Geophys., 55, 818–854, https://doi.org/10.1002/2017RG000556, 2017.

Bukaveckas, P. A.: Effects of channel restoration on water velocity, transient storage, and nutrient uptake in a channelized stream, Environ. Sci. Technol., 41, 1570–1576, https://doi.org/10.1021/es061618x, 2007.

Callaham, J. L., Koch, J. V., Brunton, B. W., Kutz, J. N., and Brunton, S. L.: Learning dominant physical processes with data-driven balance models, Nat. Commun., 12, 1016, https://doi.org/10.1038/s41467-021-21331-z, 2021.

Cao, H., Xie, X., Shi, J., Jiang, G., and Wang, Y.: Siamese network-based transfer learning model to predict geogenic contaminated groundwaters, Environ. Sci. Technol., 56, 11071–11079, https://doi.org/10.1021/acs.est.1c08682, 2022.

Chen, K., Zhan, H., and Wang, Q.: An innovative solution of diurnal heat transport in streambeds with arbitrary initial condition and implications to the estimation of water flux and thermal diffusivity under transient condition, J. Hydrol., 567, 361–369, https://doi.org/10.1016/j.jhydrol.2018.10.008, 2018.

Chen, K., Chen, X., Song, X., Briggs, M. A., Jiang, P., Shuai, P., Hammond, G., Zhan, H., and Zachara, J. M.: Using ensemble data assimilation to estimate transient hydrologic exchange flow under highly dynamic flow conditions, Water Resour. Res., 58, e2021WR030735, https://doi.org/10.1029/2021WR030735, 2022.

Chen, Z., Xu, H., Jiang, P., Yu, S., Lin, G., Bychkov, I., Hmelnov, A., Ruzhnikov, G., Zhu, N., and Liu, Z.: A transfer Learning-based LSTM strategy for imputing large-scale consecutive missing data and its application in a water quality prediction system, J. Hydrol., 602, 126573, https://doi.org/10.1016/j.jhydrol.2021.126573, 2021.

Cho, K. and Kim, Y.: Improving streamflow prediction in the WRF-Hydro model with LSTM networks, J. Hydrol., 605, 127297, https://doi.org/10.1016/j.jhydrol.2021.127297, 2022.

Cui, G. and Zhu, J.: Prediction of unsaturated flow and water backfill during infiltration in layered soils, J. Hydrol., 557, 509–521, https://doi.org/10.1016/j.jhydrol.2017.12.050, 2018.

Elliott, A. H. and Brooks, N. H.: Transfer of nonsorbing solutes to a streambed with bed forms: Laboratory experiments, Water Resour. Res., 33, 137–151, https://doi.org/10.1029/96WR02783, 1997.

Feigl, M., Lebiedzinski, K., Herrnegger, M., and Schulz, K.: Machine-learning methods for stream water temperature prediction, Hydrol. Earth Syst. Sci., 25, 2951–2977, https://doi.org/10.5194/hess-25-2951-2021, 2021.

Fleckenstein, J. H., Krause, S., Hannah, D. M., and Boano, F.: Groundwater-surface water interactions: New methods and models to improve understanding of processes and dynamics, Adv. Water Resour., 33, 1291–1295, https://doi.org/10.1016/j.advwatres.2010.09.011, 2010.

Guo, H., Zhuang, X., Alajlan, N., and Rabczuk, T.: Physics-informed deep learning for melting heat transfer analysis with model-based transfer learning, Computers and Mathematics with Applications, 143, 303–317, https://doi.org/10.1016/j.camwa.2023.05.014, 2023.

Halloran, L. J. S., Rau, G. C., and Andersen, M. S.: Heat as a tracer to quantify processes and properties in the vadose zone: A review, Earth-Sci. Rev., 159, 358–373, https://doi.org/10.1016/j.earscirev.2016.06.009, 2016.

He, Q. and Tartakovsky, A. M.: Physics-informed neural network method for forward and backward advection-dispersion equations, Water Resour. Res., 57, e2020WR029479, https://doi.org/10.1029/2020WR029479, 2021.

Heavilin, J. E. and Neilson, B. T.: An analytical solution to main channel heat transport with surface heat flux, Adv. Water Resour., 47, 67–75, https://doi.org/10.1016/j.advwatres.2012.06.006, 2012.

Hu, J., Tang, J., and Lin, Y.: A novel wind power probabilistic forecasting approach based on joint quantile regression and multi-objective optimization, Renewable Energy, 149, 141–164, https://doi.org/10.1016/j.renene.2019.11.143, 2020.

Jiang, S. and Durlofsky, L. J.: Use of multifidelity training data and transfer learning for efficient construction of subsurface flow surrogate models, J. Comput. Phys., 474, 111800, https://doi.org/10.1016/j.jcp.2022.111800, 2023.

Jiang, S., Zheng, Y., and Solomatine, D.: Improving AI system awareness of geoscience knowledge: Symbiotic integration of physical approaches and deep learning, Geophys. Res. Lett., 47, 733–745, https://doi.org/10.1029/2020GL088229, 2020.

Jin, A., Wang, Q., Zhan, H., and Zhou, R.: Comparative performance assessment of physical-based and data-driven machine-learning models for simulating streamflow: A case study in three catchments across the US, J. Hydrol. Eng., 29, 05024004, https://doi.org/10.1061/JHYEFF.HEENG-6118, 2024.

Jin, A.: Python codes for ”Improving heat transfer predictions in heterogeneous riparian zones using transfer learning techniques”, Zenodo [code], https://doi.org/10.5281/zenodo.17209290, 2025.

Kalbus, E., Reinstorf, F., and Schirmer, M.: Measuring methods for groundwater – surface water interactions: a review, Hydrol. Earth Syst. Sci., 10, 873–887, https://doi.org/10.5194/hess-10-873-2006, 2006.

Kamrava, S., Sahimi, M., and Tahmasebi, P.: Simulating fluid flow in complex porous materials by integrating the governing equations with deep-layered machines, npj Computational Materials, 7, 127, https://doi.org/10.1038/s41524-021-00598-2, 2021.

Karan, S., Engesgaard, P., and Rasmussen, J.: Dynamic streambed fluxes during rainfall–runoff events, Water Resour. Res., 50, 2293–2311, https://doi.org/10.1002/2013WR014155, 2014.

Karpatne, A., Atluri, G., Faghmous, J. H., Steinbach, M., Banerjee, A., Ganguly, A., Shekhar, S., Samatova, N., and Kumar, V.: Theory-guided data science: A new paradigm for scientific discovery from data, IEEE Transactions on Knowledge and Data Engineering, 29, 2318–2331, https://doi.org/10.1109/TKDE.2017.2720168, 2017.

Keery, J., Binley, A., Crook, N., and Smith, J. W. N.: Temporal and spatial variability of groundwater-surface water fluxes: Development and application of an analytical method using temperature time series, J. Hydrol., 336, 1–16, https://doi.org/10.1016/j.jhydrol.2006.12.003, 2007.

Kim, T., Yang, T., Gao, S., Zhang, L., Ding, Z., Wen, X., Gourley, J. J., and Hong, Y.: Can artificial intelligence and data-driven machine learning models match or even replace process-driven hydrologic models for streamflow simulation?: A case study of four watersheds with different hydro-climatic regions across the CONUS, J. Hydrol., 598, 126423, https://doi.org/10.1016/j.jhydrol.2021.126423, 2021.

Raissi, M., Perdikaris, P., and Karniadakis, G. E.: Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations, J. Comput. Phys., 378, 686–707, https://doi.org/10.1016/j.jcp.2018.10.045, 2019.

Raissi, M., Yazdani, A., and Karniadakis, G. E.: Hidden fluid mechanics: Learning velocity and pressure fields from flow visualizations, Science, 367, 1026–1030, https://doi.org/10.1126/science.aaw4741, 2020.

Read, J. S., Jia, X., Willard, J., Appling, A. P., Zwart, J. A., Oliver, S. K., Karpatne, A., Hansen, G. J. A., Hanson, P. C., Watkins, W., Steinbach, M., and Kumar, V.: Process-guided deep learning predictions of lake water temperature, Water Resour. Res., 55, 9173–9190, https://doi.org/10.1029/2019WR024922, 2019.

Ren, J., Wang, X., Shen, Z., Zhao, J., Yang, J., Ye, M., Zhou, Y., and Wang, Z.: Heat tracer test in a riparian zone: Laboratory experiments and numerical modelling, J. Hydrol., 563, 560–575, https://doi.org/10.1016/j.jhydrol.2018.06.030, 2018.

Ren, J., Zhang, W., Yang, J., and Zhou, Y.: Using water temperature series and hydraulic heads to quantify hyporheic exchange in the riparian zone, Hydrogeol. J., 27, 1419–1437, https://doi.org/10.1007/s10040-019-01934-z, 2019.

Ren, J., Zhuang, T., Wang, D., and Dai, J.: Water flow and heat transport in the hyporheic zone of island riparian: A field experiment and numerical simulation, J. Coastal Res., 39, 848–861, https://doi.org/10.2112/JCOASTRES-D-22-00120.1, 2023.

Schmidt, C., Martienssen, M., and Kalbus, E.: Influence of water flux and redox conditions on chlorobenzene concentrations in a contaminated streambed, Hydrol. Process., 25, 234–245, https://doi.org/10.1002/hyp.7839, 2011.

Shi, W., Zhan, H., Wang, Q., and Xie, X.: A two-dimensional closed-form analytical solution for heat transport with nonvertical flow in riparian zones, Water Resour. Res., 59, e2022WR034059, https://doi.org/10.1029/2022WR034059, 2023.

Tartakovsky, A. M., Marrero, C. O., Perdikaris, P., Tartakovsky, G. D., and Barajas-Solano, D.: Physics-informed deep neural networks for learning parameters and constitutive relationships in subsurface flow problems, Water Resour. Res., 56, e2019WR026731, https://doi.org/10.1029/2019WR026731, 2020.

Vandaele, R., Dance, S. L., and Ojha, V.: Deep learning for automated river-level monitoring through river-camera images: an approach based on water segmentation and transfer learning, Hydrol. Earth Syst. Sci., 25, 4435–4453, https://doi.org/10.5194/hess-25-4435-2021, 2021.

Wade, J., Kelleher, C., and Hannah, D. M.: Machine learning unravels controls on river water temperature regime dynamics, J. Hydrol., 623, 129821, https://doi.org/10.1016/j.jhydrol.2023.129821, 2023.

Wang, N., Chang, H., and Zhang, D.: Deep-Learning-Based Inverse Modeling Approaches: A Subsurface Flow Example, Water Resources Research, 126, e2020JB020549, https://doi.org/10.1029/2020JB020549, 2021.

Wang, Y., Wang, W., Ma, Z., Zhao, M., Li, W., Hou, X., Li, J., Ye, F., and Ma, W.: A deep learning approach based on physical constraints for predicting soil moisture in unsaturated zones, Water Resour. Res., 59, e2023WR035194, https://doi.org/10.1029/2023WR035194, 2023.

Willard, J. D., Read, J. S., Appling, A. P., Oliver, S. K., Jia, X., and Kumar, V.: Predicting water temperature dynamics of unmonitored lakes with meta-transfer learning, Water Resour. Res., 57, e2021WR029579, https://doi.org/10.1029/2021WR029579, 2021.

Xie, W., Kimura, M., Takaki, K., Asada, Y., Iida, T., and Jia, X.: Interpretable framework of physics-guided neural network with attention mechanism: Simulating paddy field water temperature variations, Water Resour. Res., 58, e2021WR030493, https://doi.org/10.1029/2021WR030493, 2022.

Xiong, R., Zheng, Y., Chen, N., Tian, Q., Liu, W., Han, F., Jiang, S., Lu, M., and Zheng, Y.: Predicting dynamic riverine nitrogen export in unmonitored watersheds: Leveraging insights of AI from data-rich regions, Environ. Sci. Technol., 56, 10530–10542, https://doi.org/10.1021/acs.est.2c02232, 2022.

Yeung, Y.-H., Barajas-Solano, D. A., and Tartakovsky, A. M.: Physics-informed machine learning method for large-scale data assimilation problems, Water Resour. Res., 58, e2021WR031023, https://doi.org/10.1029/2021WR031023, 2022.

Zhang, J., Liang, X., Zeng, L., Chen, X., Ma, E., Zhou, Y., and Zhang, Y.-K.: Deep transfer learning for groundwater flow in heterogeneous aquifers using a simple analytical model, J. Hydrol., 626, 130293, https://doi.org/10.1016/j.jhydrol.2023.130293, 2023.

Zhao, C., Yang, L., and Hao, S.: Physics-informed learning of governing equations from scarce data, Nat. Commun., 12, 6136, https://doi.org/10.1038/s41467-021-26434-1, 2021.

Zhao, W. L., Gentine, P., Reichstein, M., Zhang, Y., Zhou, S., Wen, Y., Lin, C., Li, X., and Qiu, G. Y.: Physics-Constrained Machine Learning of Evapotranspiration, Geophys. Res. Lett., 46, 14496–14507, https://doi.org/10.1029/2019GL085291, 2019.

Zhou, R. and Zhang, Y.: On the role of the architecture for spring discharge prediction with deep learning approaches, Hydrol. Process., 36, e14737, https://doi.org/10.1002/hyp.14737, 2022.

Zhou, R. and Zhang, Y.: Linear and nonlinear ensemble deep learning models for karst spring discharge forecasting, J. Hydrol., 627, 130394, https://doi.org/10.1016/j.jhydrol.2023.130394, 2023.

Zhou, R., Zhang, Y., Wang, Q., Jin, A., and Shi, W.: A hybrid self-adaptive DWT-WaveNet-LSTM deep learning architecture for karst spring forecasting, J. Hydrol., 634, 131128, https://doi.org/10.1016/j.jhydrol.2024.131128, 2024.

Zuo, G., Luo, J., Wang, N., Lian, Y., and He, X.: Two-stage variational mode decomposition and support vector regression for streamflow forecasting, Hydrol. Earth Syst. Sci., 24, 5491–5518, https://doi.org/10.5194/hess-24-5491-2020, 2020.