the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Quantifying new water fractions and transit time distributions using ensemble hydrograph separation: theory and benchmark tests

Decades of hydrograph separation studies have estimated the proportions of recent precipitation in streamflow using end-member mixing of chemical or isotopic tracers. Here I propose an ensemble approach to hydrograph separation that uses regressions between tracer fluctuations in precipitation and discharge to estimate the average fraction of new water (e.g., same-day or same-week precipitation) in streamflow across an ensemble of time steps. The points comprising this ensemble can be selected to isolate conditions of particular interest, making it possible to study how the new water fraction varies as a function of catchment and storm characteristics. Even when new water fractions are highly variable over time, one can show mathematically (and confirm with benchmark tests) that ensemble hydrograph separation will accurately estimate their average. Because ensemble hydrograph separation is based on correlations between tracer fluctuations rather than on tracer mass balances, it does not require that the end-member signatures are constant over time, or that all the end-members are sampled or even known, and it is relatively unaffected by evaporative isotopic fractionation.

Ensemble hydrograph separation can also be extended to a multiple regression that estimates the average (or “marginal”) transit time distribution (TTD) directly from observational data. This approach can estimate both “backward” transit time distributions (the fraction of streamflow that originated as rainfall at different lag times) and “forward” transit time distributions (the fraction of rainfall that will become future streamflow at different lag times), with and without volume-weighting, up to a user-determined maximum time lag. The approach makes no assumption about the shapes of the transit time distributions, nor does it assume that they are time-invariant, and it does not require continuous time series of tracer measurements. Benchmark tests with a nonlinear, nonstationary catchment model confirm that ensemble hydrograph separation reliably quantifies both new water fractions and transit time distributions across widely varying catchment behaviors, using either daily or weekly tracer concentrations as input. Numerical experiments with the benchmark model also illustrate how ensemble hydrograph separation can be used to quantify the effects of rainfall intensity, flow regime, and antecedent wetness on new water fractions and transit time distributions.

Please read the corrigendum first before continuing.

-

Notice on corrigendum

The requested paper has a corresponding corrigendum published. Please read the corrigendum first before downloading the article.

-

Article

(7794 KB)

- Corrigendum

-

Supplement

(180 KB)

-

The requested paper has a corresponding corrigendum published. Please read the corrigendum first before downloading the article.

- Article

(7794 KB) - Full-text XML

- Corrigendum

-

Supplement

(180 KB) - BibTeX

- EndNote

For nearly 50 years, chemical and isotopic tracers have been used to quantify the relative contributions of different water sources to streamflow following precipitation events (Pinder and Jones, 1969; Hubert et al., 1969); see also reviews by Buttle (1994) and Klaus and McDonnell (2013), and references therein. As reviewed by Klaus and McDonnell (2013), chemical and isotopic hydrograph separation studies have led to many important insights into runoff generation. Foremost among these has been the realization that even at stormflow peaks, stream discharge is often composed primarily of “old” catchment storage rather than “new” recent precipitation (Sklash et al., 1976; Sklash, 1990; Neal and Rosier, 1990; Buttle, 1994). The previous dominant paradigm, based on little more than intuition, had held that because streamflow responds promptly to rainfall, the storm hydrograph must consist primarily of precipitation that reaches the channel quickly. Isotope hydrograph separations showed that this intuition is often wrong, because the isotopic signatures of stormflow often resemble baseflow or groundwater rather than recent precipitation. These observations have not only overthrown the previous dominant paradigm, but also launched decades of research aimed at unraveling the paradox of how catchments store water for weeks or months, but release it within minutes following the onset of rainfall (Kirchner, 2003).

The foundations of conventional two-component hydrograph separation are straightforward. If one assumes that streamflow is a mixture of two end-members of fixed composition, which I will call for simplicity “new” and “old” water, then at any time j the mass balance for the water itself is

and the mass balance for a conservative tracer is

where Q denotes water flux and C denotes the concentration of a passive chemical tracer or the δ value of 18O or 2H. One can straightforwardly solve Eqs. (1) and (2) to express the fraction of new water in streamflow at any time j as

In typical applications, the new water is recent precipitation and the tracer signature of the old water is obtained from pre-event baseflow, which is generally assumed to originate from long-term groundwater storage.

The assumptions underlying conventional hydrograph separation can be summarized as follows:

-

Streamflow is a mixture formed entirely from the sampled end-members; contributions from other possible streamflow sources (such as vadose zone water or surface storage) are negligible.

-

The samples of the end-members are representative (e.g., the sampled precipitation accurately reflects all precipitation, and the sampled baseflow reflects all pre-event water).

-

The tracer signatures of the end-members are constant through time, or their variations can be taken into account.

-

The tracer signatures of the end-members are significantly different from one another.

As reviewed by Rodhe (1987), Sklash (1990), Buttle (1994), and Klaus and McDonnell (2013), each of these assumptions can be problematic in practice:

-

Hydrograph separation studies often lead to implausible (including negative) inferred contributions of new water, and such anomalous results are sometimes attributed to contributions from un-sampled end-members (e.g., von Freyberg et al., 2017). In such cases, assumption no. 1 is clearly not met.

-

The isotopic composition of precipitation can vary considerably within an event, both spatially and temporally, even in small catchments (e.g., McDonnell et al., 1990; McGuire et al., 2005; Fischer et al., 2017; von Freyberg et al., 2017). Likewise, the isotopic signature of the baseflow or groundwater end-member has been shown to vary in space and time during snowmelt and rainfall events (e.g., Hooper and Shoemaker, 1986; Rodhe, 1987; Bishop, 1991; McDonnell et al., 1991). In these cases, assumptions no. 2 and 3 are not met. Various schemes have been proposed to address this spatial and temporal variability by weighting the isotopic compositions of individual samples, but the validity of these schemes typically rests on strong assumptions about the nature of the runoff generation process and the heterogeneity to be averaged over.

-

When the difference between Cnew and Cold is not large compared to their uncertainties, Eq. (3) becomes unstable and the resulting hydrograph separations become unreliable. This problem can be detected using Gaussian error propagation (Genereux, 1998), but Bansah and Ali (2017) report that less than 20 % of the hydrograph separation studies they reviewed have used it.

One can agree with Buttle (1994) that “despite frequent violations of some of its underlying assumptions, the isotopic hydrograph separation approach has proven to be sufficiently robust to be applied to the study of runoff generation in an increasing number of basins,” at least as a characterization of the community's widespread acceptance of the technique. Nonetheless, there is clearly room for new and different ways to quantify stormflow generation. In addition, weekly or even daily isotope measurements are now becoming available for many catchments, sometimes spanning periods of many years, and despite their many uses (particularly for calibrating hydrological models) there is an obvious need for new ways to extract hydrological insights from such time series.

Here I propose a new method for using isotopes and other conservative tracers to quantify the origins of streamflow. This method is based on statistical correlations among tracer fluctuations in streamflow and one or more candidate water sources, rather than mass balances. As such, it exploits the temporal variability in candidate end-members, rather than requiring them to be constant. It also does not require strict mass balance and thus is relatively insensitive to the presence of unmeasured end-members. Because this method quantifies the average proportions of source waters in streamflow across an ensemble of events or time steps, it does not answer the same question that traditional hydrograph separation does (namely, how fractions of new and old water change over time during individual storm events). Instead, it can answer new and different questions, such as how the average fractions of new and old water vary with stream discharge or precipitation intensity, antecedent moisture, etc. The proposed method is designed to provide insights into stormflow generation from regularly sampled time series, even if those time series have gaps and even if they are sampled at frequencies much lower than the storm response timescale of the catchment.

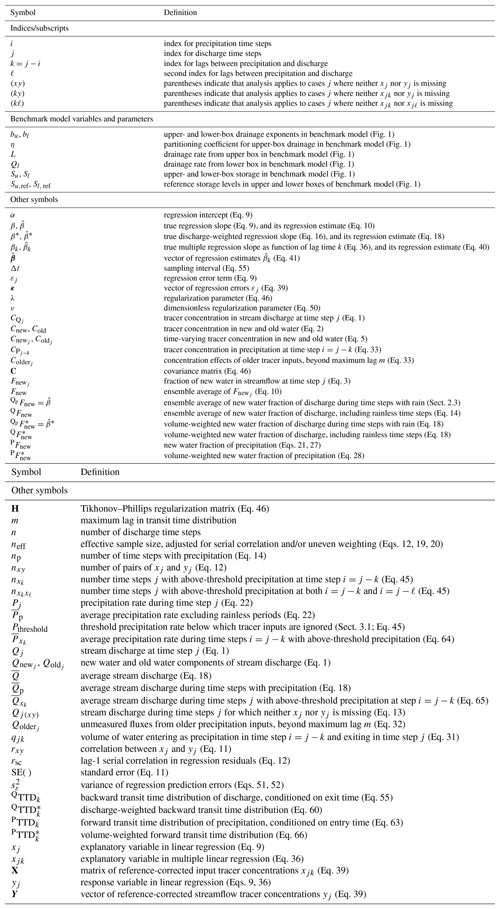

The purpose of this paper is to describe the method, document its mathematical foundations, and test it against a benchmark model, in which the method's results can be verified by age tracking. Applications to real-world catchments will follow in future papers. Because the proposed method is new and thus must be fully documented, several parts of the presentation (most notably Sect. 4.2–4.4 and Appendix B) necessarily contain strong doses of math. The math can be skipped, or lightly skimmed, by those who only need a general sense of the analysis. A table of symbols is provided at the end of the text.

Here I propose a new type of hydrograph separation based on correlations between tracer fluctuations in streamflow and in one or more end-members. This new approach to hydrograph separation does not have the same goal as conventional hydrograph separation. It does not estimate the contributions of end-members to streamflow for each time step (as in Eq. 3). Instead, it estimates the average end-member contributions to streamflow over an ensemble of time steps – hence its name, ensemble hydrograph separation. The ensemble of time steps may be chosen to reflect different catchment conditions and thus used to map out how those catchment conditions influence end-member contributions to streamflow.

2.1 Basic equations

I will first illustrate this approach with a simple example of a time-varying mixing model. Let us assume that we have measured tracer concentrations in streamflow, and in at least one contributing end-member, over an ensemble of time intervals j. The simplest possible mass balance for the water that makes up streamflow would be

where Qnew represents the water flux in streamflow Q that originates from recent precipitation (or, potentially, any other end-member in which tracers can be measured) during time interval j. All other contributions to streamflow are lumped together as Qold. Conservative mixing implies that

where CQ and Cnew are the tracer concentrations in the stream and the new water, and Cold is the tracer signature of all other sources that contribute to streamflow. Combining Eqs. (4) and (5), we directly obtain

where is the fractional contribution of Qnew to streamflow Q. Equation (6) can be rewritten as

which in turn could be rearranged as a conventional mixing model (Eq. 3), with the important difference that the new and old water concentrations are time-varying rather than constant. If we represent the old water composition using the streamwater concentration during the previous time step, Eq. (7) becomes

The lagged concentration serves as a reference level for measuring fluctuations in precipitation and streamflow tracer concentrations and the correlations between them. Thus, it is not necessary that consists entirely of old water as defined in conventional hydrograph separations (i.e., groundwater or baseflow water). It is only necessary that contains no new water (that is, no precipitation that fell during time step j), and this condition is automatically met because is measured during the previous time step. The net effect of is to factor out the legacy effects of previous tracer inputs and to filter out long-term variations in CQ that could otherwise lead to spurious correlations with Cnew.

The ensemble hydrograph separation approach is based on the observation that Eq. (8) is almost equivalent to the conventional linear regression equation,

where the intercept α and the error term εj can be viewed as subsuming any bias or random error introduced by measurement noise, evapoconcentration effects, and so forth. The analogy between Eqs. (9) and (8) suggests that it may be possible to estimate the average value of from the regression slope of a scatterplot of the streamflow concentration against the new water concentration , both expressed relative to the lagged streamflow concentration .

However, astute readers will notice an important difference between Eqs. (8) and (9): in Eq. (9), the regression slope β is a constant, whereas in Eq. (8) varies from one time step to the next. It is not obvious how an estimate of the (constant) slope β will be related to the (non-constant) or whether this relationship could be affected by the other variables in Eq. (8). The answer to this question can be derived analytically and tested using numerical experiments (see Appendix A). As explained in Appendix A, the regression slope in a scatterplot of versus (Fig. A1d) will closely approximate the average value of (averaged over the ensemble of time steps j), under rather general conditions:

-

The slope of the relationship between and , times the mean of , should be small compared to the average Fnew. This will usually be true for conservative tracers, for two reasons. First, because all streamflow is ultimately derived from new water, mass conservation implies that the mean of should usually be small. Second, unless there is a correlation between storm size and tracer concentration (not just between storm size and tracer variance), the slope of the relationship between and should also be small. Thus the product of these two small terms should be small.

-

Points with large leverage in the scatterplot (i.e., with values far above and below the mean) should not be systematically associated with either high or low values of . Such a systematic association is unlikely unless large storms (which are likely to generate large new water fractions) are also associated with both very high and very low tracer concentrations.

-

As expected for typical sampling and measurement errors, the error term εj should not be strongly correlated with .

Thus the analysis in Appendix A shows that a reasonable estimate of the ensemble average of Fnew should, under typical conditions, be obtainable from the regression slope of a plot of versus (i.e., Eq. 9; Fig. A1d).

The least-squares solution of Eq. (9) can be expressed in several equivalent ways. For consistency with the analysis that will be developed in Sect. 4 below, I will use the following formulation, which is mathematically equivalent to those more commonly seen:

where is the least-squares estimate of β, and Fnew is the average of the over the ensemble of points j. Values of yj that lack a corresponding xj, or vice versa (due to sampling gaps, for example, or lack of precipitation), are omitted.

2.2 Uncertainties

The uncertainty in Fnew, expressed as a standard error, can be written as

where sx and sy are the standard deviations of x and y, rxy is the correlation between them, and neff is the effective sample size, which can be adjusted to account for serial correlation in the residuals (Bayley and Hammersley, 1946; Brooks and Carruthers, 1953; Matalas and Langbein, 1962):

where nxy is the number of pairs of xj and yj, and rsc is the lag-1 serial correlation in the regression residuals . For large nxy, Eq. (12) can be approximated as (Mitchell et al., 1966)

where for all positive rsc, Eq. (13) is conservative (it underestimates neff from Eq. 12), and for rsc=0.5 and nxy>50, for example, Eqs. (12) and (13) differ by less than 3 %. If the scatterplot of versus contains outliers, a robust fitting technique such as iteratively reweighted least squares (IRLS) may yield more reliable estimates of Fnew than ordinary least-squares regression. However, the analyses presented here are based on outlier-free synthetic data generated from a benchmark model (see Sect. 3), so in this paper I have used conventional least squares (Eqs. 10–11) instead.

2.3 New water fraction for time steps with precipitation

The meaning of the new water fraction Fnew depends on how the new water and streamwater are sampled. For example, if the new water concentrations Cnew are measured in daily bulk precipitation samples and the stream water concentrations CQ are measured in instantaneous grab samples taken at the end of each 24 h precipitation sampling period, then Fnew will estimate the average fraction of streamflow that is composed of precipitation from the preceding 24 h. If the sampling interval is weekly instead of daily, then Fnew will estimate the average fraction of streamflow that consists of precipitation from the preceding week. This will generally be larger than the Fnew calculated from daily sampling, for the obvious reason that on average more precipitation will have fallen during the previous week than during the previous 24 h, so this precipitation will comprise a larger fraction of streamflow. Also, if the weekly streamflow concentrations are measured in integrated composite samples rather than instantaneous grab samples, then Fnew will estimate the fraction of same-week precipitation in average weekly streamflow rather than in the instantaneous end-of-week streamflow. The general rule is: Fnew should generally estimate whatever new water has been sampled as Cnew, expressed as a fraction of whatever streamflow has been sampled as CQ.

In all of these cases, from Eq. (10) estimates the average fraction of new water in streamflow during time steps with precipitation, because time steps without precipitation lack a new water tracer concentration and thus must be left out from the regression in Eq. (9). Using Qp to denote discharge during periods with precipitation, we can represent this event new water fraction as .

2.4 New water fraction for all time steps

Periods without precipitation will inherently lack same-day (or same-week) precipitation in streamflow. Thus we can calculate the average fraction of new water in streamflow during all time steps, including those without precipitation, as

where QFnew is the new water fraction of all discharge, is the new water fraction of discharge during time steps with precipitation (as estimated by the regression slope , from Eq. 10), and np∕n is the fraction of time steps that have precipitation. The ratio np∕n in Eq. (14) accounts for the fact that during time steps without rain, the new water contribution to streamflow is inherently zero. The same ratio is also used to estimate the uncertainty in QFnew:

2.5 Volume-weighted new water fractions

The regression derived through Eqs. (4)–(9) gives each time interval j equal weight. As a result, from Eq. (10) can be interpreted as estimating the time-weighted average new water fraction. Alternatively, one can estimate the volume-weighted new water fraction,

where and are the volume-weighted means of x and y (averaged over all j for which xj and yj are not missing),

and the notation j∈(xy) indicates sums taken over all j for which xj and yj are not missing. Equations (16)–(17) yield the slope coefficient for linear regressions like Eq. (9), but with each point weighted by the discharge Qj. We can denote the weighted regression slope as , the volume-weighted new water fraction of time intervals with precipitation, where the asterisk indicates volume-weighting.

If, instead, one wants to estimate the new water fraction in all discharge (during periods with and without precipitation), following the approach in Sect. 2.4 one simply rescales this regression slope by the sum of discharge during time steps with precipitation, divided by total discharge:

where is the volume-weighted new water fraction of all discharge, is the fitted regression slope from Eq. (16), is the average discharge for time steps with precipitation, is the average discharge for all time steps (including during rainless periods), and np∕n is the fraction of time steps with rain.

Because the volume-weighting will typically be uneven, the effective sample size will typically be smaller than n; for example, in the extreme case that one sample had nearly all the weight and the other samples had nearly none, the effective sample size would be roughly 1 instead of nxy. Thus, uncertainty estimates for these volume-weighted new water fractions should take account of the unevenness of the weighting. One can account for uneven weighting by calculating the effective sample size, following Kish (1995), as

where the notation Qj(xy) indicates discharge at time steps j for which pairs of xj and yj exist. Equation (19) evaluates to nxy (as it should) in the case of evenly weighted samples and declines toward 1 (as it should) if a single sample has much greater weight than the others. To obtain an estimate of the effective sample size that accounts for both serial correlation and uneven weighting, one can multiply the expressions in Eqs. (19) and (12) or (13). Combining these approaches, one can estimate the standard error of as

where is the fitted regression slope from Eq. (16).

2.6 New water fraction of precipitation

One can also express the flux of new water as a fraction of precipitation rather than discharge. Recently, von Freyberg et al. (2018) have noted, in the context of conventional hydrograph separation, that expressing event water as a proportion of precipitation rather than discharge may lead to different insights into catchment storm response. Analogously, within the ensemble hydrograph separation framework we can estimate the new water fraction of precipitation, denoted PFnew, as

where is the new water fraction of discharge during time steps with precipitation (as estimated by the regression slope , from Eq. 10), and and are the average discharge and precipitation during these time steps. An alternative strategy is to recast Eq. (8) by multiplying both sides by Qj∕Pj, such that the Fnew on the right-hand side now expresses new water as a fraction of precipitation,

This yields a linear regression similar to Eq. (9), but with yj rescaled,

where the regression slope , which can be calculated from Eq. (10) with the new values yj, should approximate the average new water fraction of precipitation PFnew.

The approaches represented by Eqs. (21) and (22)–(23) are not equivalent. Equation (21) is based on the ad hoc assumption – which is verified by the benchmark tests in Sect. 3.3–3.5 – that the average of (new water in streamflow, as a fraction of precipitation) should approximate the average (new water in streamflow, as a fraction of discharge), rescaled by the ratio of average discharge to average precipitation . This is only an approximation, of course; it relies on the approximation that appears in the middle of the following chain of expressions:

where the “p” subscripts on the angled brackets indicate averages taken only over time intervals with precipitation. Whether this is a good approximation will depend on how Pj, Qj, and are distributed, and how they are correlated with one another. By contrast, the approach outlined in Eqs. (22)–(23) is based on the exact substitution of for , which requires no approximations. The same substitution also leads to two other algebraically equivalent formulations of Eq. (22),

and

But although Eqs. (22), (25), and (26) are algebraically equivalent, their statistical behavior is different when they are used as regression equations to estimate the average value of PFnew. The regression estimate of PFnew depends on the distributions of Pj, Qj, and and their correlations with each other, and benchmark testing shows that Eq. (22) yields reasonably accurate estimates of PFnew, but Eqs. (25) and (26) do not. One can also note that the approach outlined in Eq. (21) – the other approach that is successful in benchmark tests – represents an ad hoc time averaging of Pj and Qj in Eq. (22), because it is formally equivalent to

The precise interpretation of PFnew depends on how streamflow is sampled. If the streamflow tracer concentrations come from integrated composite samples over each day or week, then PFnew can be interpreted as the fraction of precipitation that becomes same-day or same-week streamflow. If the streamflow tracer concentrations instead come from instantaneous grab samples (as is more typical), then PFnew can be interpreted as the rate of new water discharge at that time (typically the end of the precipitation sampling interval), as a fraction of the average rate of precipitation. Adapting terminology from the literature of transit time distributions (TTDs), we can call PFnew the “forward” new water fraction because it represents the fraction of precipitation that will exit as streamflow soon (during the same time step), and call and QFnew “backward” new water fractions because they represent the fraction of streamflow that entered the catchment a short time ago. Although the backward new water fraction of discharge comes in two forms ( or QFnew), depending on whether one includes or excludes rainless periods, the forward new water fraction PFnew can only be defined for time steps with precipitation (otherwise PFnew represents the ratio between zero new water and zero precipitation and thus is undefined).

Readers should keep in mind that although PFnew represents the fraction of precipitation that becomes same-day (or same-week) streamflow, different fractions of precipitation may leave the catchment the same day (or week) by other pathways, most notably by evapotranspiration. One could also estimate PFnew for water that leaves the catchment by evapotranspiration if one had tracer time series for evapotranspiration fluxes, but at present such time series are not available. Thus, to echo the principle outlined in Sect. 2.3 above, the new water fraction of precipitation does not represent the forward new water fraction for all possible pathways, but only whatever pathway has been sampled.

2.7 Volume-weighted new water fraction of precipitation

The new water fraction of precipitation as estimated by Eq. (21) is a time-weighted average, in which each day with precipitation counts equally. One may also want to estimate the volume-weighted new water fraction of precipitation, which we can denote as , in keeping with the naming conventions used above. We can estimate at least two different ways. The first method involves recognizing that we are seeking the ratio between the total volume of new water – that is, same-day precipitation reaching streamflow – and the total volume of precipitation. This will equal the volume-weighted new water fraction of discharge (total new water divided by total discharge, which has already been derived in Sect. 2.5 above), rescaled by the ratio of total discharge to total precipitation:

where and are the average rates of discharge and precipitation (averaged over all time steps), is the average discharge on days with rain, and np∕n is the fraction of time steps with rain. An alternative strategy, which yields nearly equivalent results in benchmark tests, precipitation-weights the regression for PFnew (Eq. 22), yielding

where and are the precipitation-weighted means of x and y (averaged over all j for which xj and yj are not missing),

and where the regression slope approximates the precipitation-weighted average forward new water fraction .

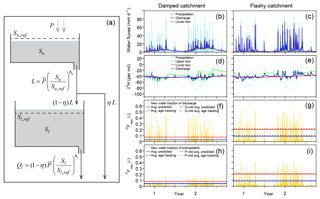

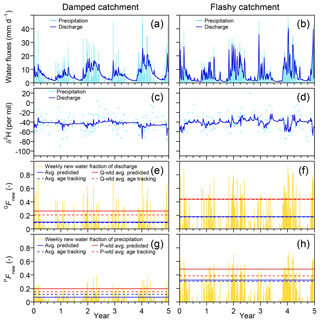

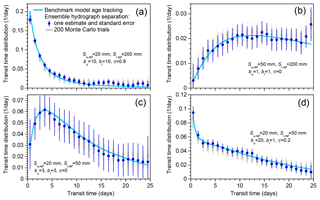

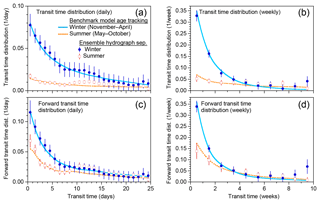

Figure 1Schematic diagram of the benchmark model (a), with 2-year excerpts from illustrative simulations of its behavior (b–i). Model parameters for simulations of damped catchment response (b, d, f, h) are mm, mm, bu=10, bl=3, and η=0.3. For simulations of flashy catchment response (c, e, g, i), all but one of the parameters are the same; only η is changed to 0.8 and a different random realization of precipitation isotopes is used. The same daily precipitation time series (Smith River, Mediterranean climate) is used in both cases. The isotopic composition of streamflow exhibits complex dynamics over multiple timescales (blue line in d, e), as dominance shifts between the upper and lower boxes (green and orange lines, respectively, in d, e). Like the discharge and its isotopic composition, the fraction of discharge comprised of same-day precipitation (the new water fraction of discharge, QFnew, f, g) exhibits complex nonstationary dynamics. Nonetheless, its long-term average (dashed blue line) is well predicted by ensemble hydrograph separation (solid blue line); the same is true of the discharge-weighted average (dashed and solid red lines). The fraction of precipitation appearing in same-day discharge (the forward new water fraction, PFnew, h, i) is somewhat less variable, but both its average and precipitation-weighted average are also well predicted by ensemble hydrograph separation (solid and dashed blue and red lines). In several cases the dashed and solid lines cannot be distinguished because they overlap.

3.1 Benchmark model

To test the methods outlined in Sect. 2 above, I use synthetic data generated by a simple two-box lumped-parameter catchment model. This model is documented in greater detail in Kirchner (2016a) and will be described only briefly here. As shown in Fig. 1a, drainage L from the upper box is a power function of the storage Su within the box; a fraction η of this drainage flows directly to streamflow, and the complementary fraction 1−η recharges the lower box, which drains to streamflow at a rate Ql that is a power function of its storage Sl. The model's behavior is determined by five parameters: the equilibrium storage levels Su, ref and Sl, ref in the upper and lower boxes, their drainage exponents bu and bl, and the drainage partitioning coefficient η. For simplicity, evapotranspiration is not explicitly simulated; instead, the precipitation inputs can be considered to be effective precipitation, net of evapotranspiration losses. Discharge from both boxes is assumed to be non-age selective, meaning that discharge is taken proportionally from each part of the age distribution. Tracer concentrations and mean ages are tracked under the assumption that the boxes are each well mixed but also distinct from one another, so their tracer concentrations and water ages will differ. Water ages and tracer concentrations are also tracked in daily age bins up to an age of 70 days, and mean water ages are tracked in both the upper and lower boxes.

The model operates at a daily time step, with the storage evolution of the lower box calculated by a weighted combination of the partly implicit trapezoidal method (for greater accuracy) and the fully implicit backward Euler method (for guaranteed stability). Unlike in Kirchner (2016a), here the storage evolution of the upper box is calculated by forward Euler integration at 50 sub-daily time steps of 0.02 days (roughly 30 min) each. At this time step, forward Euler integration is stable across the entire parameter ranges used in this paper and is more accurate than daily time steps of trapezoidal or backward Euler integration (which are still adequate for the lower box, where storage volumes change more slowly). Following Kirchner (2016a), the model is driven with three different real-world daily rainfall time series, representing a range of climatic regimes: a humid maritime climate with frequent rainfall and moderate seasonality (Plynlimon, Wales; Köppen climate zone Cfb), a Mediterranean climate marked by wet winters and very dry summers (Smith River, California, USA; Köppen climate zone Csb), and a humid temperate climate with very little seasonal variation in average rainfall (Broad River, Georgia, USA; Köppen climate zone Cfa). Synthetic daily precipitation tracer (deuterium) concentrations are generated randomly from a normal distribution with a standard deviation of 20 ‰ and a lag-1 serial correlation of 0.5, superimposed on a seasonal cycle with an amplitude of 10 ‰. The model is initialized at the equilibrium storage levels Su, ref and Sl, ref, with age distributions and tracer concentrations corresponding to steady-state equilibrium values at the mean input fluxes of water and tracer. The model is then run for a 1-year spin-up period; the results reported here are from 5-year simulations following this spin-up period.

For the simulations shown here, the drainage exponents bu and bl are randomly chosen from uniform distributions of logarithms spanning the range of 1–20, and the partitioning coefficient η is randomly chosen from a uniform distribution ranging from 0.1 to 0.9. The reference storage levels Su,ref and Sl, ref are randomly chosen from a uniform distribution of logarithms spanning the ranges of 50–200 mm and 200–2000 mm, respectively. These parameter distributions encompass a wide range of possible behaviors, including both strong and damped response to rainfall inputs.

I illustrate the behavior of the model using two particular parameter sets, one that gives damped response to precipitation ( mm, mm, bu=10, bl=3, and η=0.3) and one that gives a more rapid response (the same parameters, except η=0.8). These parameter values are not preferable to others in any particular way; they simply generate strongly contrasting streamflow and tracer responses that look plausible as examples of small catchment behavior. They can be interpreted as the behavior of two contrasting model catchments, which for simplicity (but with some linguistic imprecision) I will call the “damped catchment” and the “flashy catchment”, as shorthand for “model catchment with parameters giving more damped response” and “model catchment with parameters giving more flashy response”.

The model also simulates the sampling process and its associated errors. I assume that tracer concentrations cannot be measured when precipitation rates are below a threshold of Pthreshold=1 mm day−1, such that tracer samples below this threshold will be missing. I further assume that 5 % of all other precipitation tracer measurements, and 5 % of all streamflow tracer measurements, will be lost at random times due to sampling or analysis failures. I have also added Gaussian random errors (with a standard deviation of 1 ‰) to all tracer measurements.

3.2 Benchmark model behavior

Panels b–i of Fig. 1 show 2 years of simulated daily behavior driven by the Smith River daily precipitation record applied to the damped and flashy catchment parameter sets. The simulated stream discharge responds promptly to rainfall inputs, and unsurprisingly the discharge response is larger in the flashy catchment (Fig. 1b, c). The streamflow isotopic response is strongly damped in both catchments, with isotope ratios between events returning to a relatively stable baseline value composed mostly of discharge from the lower box (Fig. 1d, e). Like the stream discharge and the isotope tracer time series, the instantaneous new water fractions (determined by age tracking within the model) also exhibit complex nonstationary dynamics (Fig. 1f–i). Despite the complexity of the modeled time-series behavior, ensemble hydrograph separation (Eqs. 14, 18, 21, and 28) accurately predicts the averages of these new water fractions, both unweighted and time-weighted, as can be seen by comparing the dashed and solid lines (which sometimes overlap) in Fig. 1f–i.

It should be emphasized that the ensemble hydrograph separation and the benchmark model are completely independent of one another. The ensemble hydrograph separation does not know (or assume) anything about the internal workings of the benchmark model; it knows only the input and output water fluxes and their isotope signatures. This is crucial for it to work in the real world, where any particular assumptions about the processes driving runoff could potentially be violated. Likewise, the benchmark model is not designed to conform to the assumptions underlying the ensemble hydrograph separation method. It would be relatively trivial to model a tracer time series assuming that new water constituted a fixed fraction of discharge, and then demonstrate that this fraction can be retrieved from the tracer behavior. What Fig. 1 demonstrates is much less obvious, and more important: that even when the new water fraction is highly dynamic and nonstationary, an appropriate analysis of tracer behavior can accurately estimate its mean.

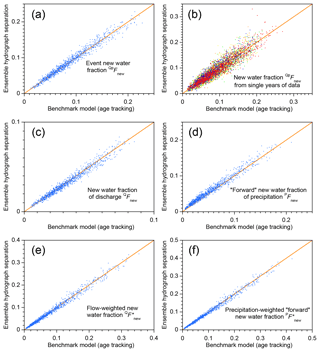

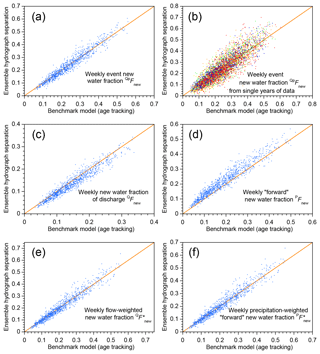

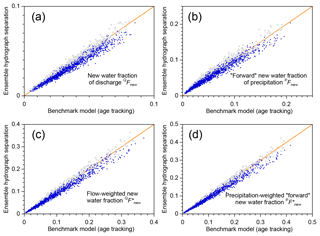

3.3 Benchmark tests: random parameter sets

This result holds not just for the two parameter sets shown in Fig. 1, but throughout the parameter ranges that are tested in the benchmark model. The scatterplots shown in Fig. 2 show new water fractions estimated by ensemble hydrograph separation, compared to the true average new water fractions determined by age tracking in the benchmark model, for 1000 random parameter sets spanning the parameter ranges described in Sect. 3.1. Figure 2 shows that ensemble hydrograph separation yields reasonably accurate estimates of average event new water fractions (Fig. 2a, b), new water fractions of discharge (Fig. 2c) and precipitation (Fig. 2d), and volume-weighted new water fractions (Fig. 2e, f). Estimates derived from single years of data (Fig. 2b) understandably exhibit greater scatter than those derived from 5 years of data (Fig. 2a), but in all of the plots shown in Fig. 2 there is no evidence of significant bias (the data clouds cluster around the 1:1 lines). The scatter of the points around the 1:1 line generally agrees with the standard errors estimated from Eqs. (11), (15), and (20), suggesting that these uncertainty estimates are also reliable.

Figure 2New water fractions predicted from tracer dynamics using ensemble hydrograph separation, compared to averages of time-varying new water fractions determined from age tracking in the benchmark model. Diagonal lines show perfect agreement. Each scatterplot shows 1000 points, each of which represents an individual catchment, with its own individual random set of model parameters (i.e., catchment characteristics), randomly generated precipitation tracer time series, and random set of measurement errors and missing values (see Sect. 3.1). The daily precipitation amounts are the same (Smith River time series; Mediterranean climate) in each case. The event new water fraction (a, b) is the average fraction of new (same-day) water in streamflow during time steps with precipitation, as described in Sect. 2.3. Panel (a) shows event new water fractions estimated from 5 years of simulated tracer data; panel (b) shows the same quantity estimated from single years (each year is denoted by a different color). Averaging over the 5 years reduces both the range and the scatter, compared to the single-year estimates. The new water fraction of discharge (c) is the fraction of same-day precipitation in streamflow, averaged over all time steps including rainless periods (Eq. 14, Sect. 2.4); its flow-weighted counterpart (e) is calculated using Eqs. (16)–(18) of Sect. 2.5. The forward new water fraction (the fraction of precipitation that becomes same-day streamflow; d) is calculated using Eq. (21), and its precipitation-weighted counterpart (f) is calculated using Eq. (28). In all cases there is little evidence of bias, and the scatter around the 1:1 line is relatively small.

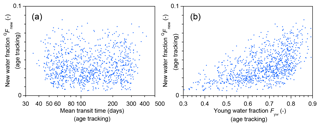

Mean transit times have often been estimated in the catchment hydrology literature, often under the assumption that they should also be correlated with other timescales of catchment transport and mixing as well. This naturally leads to the question, in the context of the present study, of whether there is a systematic relationship between mean transit times and new water fractions, such that they could potentially be predicted from one another. The benchmark model allows a direct test of this conjecture, because it tracks mean water ages as well as new water fractions. Figure 3a shows that, across the 1000 random parameter sets from Fig. 2, the relationship between new water fractions and mean transit times is a nearly perfect shotgun blast: mean transit times vary from about 40 to 400 days and new water fractions vary from nearly zero to nearly 0.1, with almost no correlation between them. Both of these quantities are estimated from age tracking in the benchmark model, so their lack of any systematic relationship does not arise from difficulties in estimating either of them from tracer data. It instead arises because the upper tails of transit time distributions (reflecting the amounts of streamflow with very old ages) exert strong influence on mean transit times, but have no effect on new water fractions (reflecting same-day streamflow).

I have recently proposed the “young water fraction”, the fraction of streamflow younger than about 2.3 months, as a more robust metric of water age than the mean transit time (Kirchner, 2016b). Figure 3b shows that, like the mean transit time, the young water fraction is also a poor predictor of the new water fraction, beyond the obvious constraint that new water (≤1 day old) must be a small fraction of young water (≤69 days old). The new water fraction will only be correlated with the young water fraction or mean transit time if the shape of the underlying transit time distribution is held constant, which is not the case for the 1000 random parameter sets considered here and is not likely to be true in real-world catchments either.

Figure 3Average new water fractions (same-day precipitation in streamflow) for the 1000 simulated catchments (i.e., 1000 model parameter sets) shown in Fig. 2, compared to the catchment mean transit time and the young water fraction Fyw (the fraction of streamflow younger than 2.3 months). All values plotted here are determined from age tracking within the benchmark model, and thus are true values, without any errors associated with estimating these quantities from tracer data. Neither mean transit time nor the young water fraction can reliably predict the fraction of new water in streamflow.

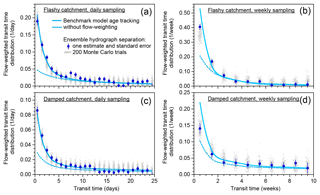

3.4 Benchmark tests: weekly tracer sampling

Many long-term water isotope time series have been sampled at weekly intervals. Can new water fractions be estimated reliably from such sparsely sampled records? To find out, I aggregated the benchmark model's daily time series to weekly intervals, volume-weighting the isotopic composition of precipitation to simulate the effects of weekly bulk precipitation sampling, and subsampling streamflow isotopes every seventh day to simulate weekly grab sampling. I then performed ensemble hydrograph separation on the aggregated weekly data, using the methods presented in Sect. 2.

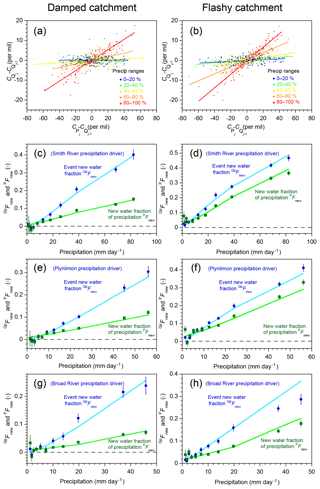

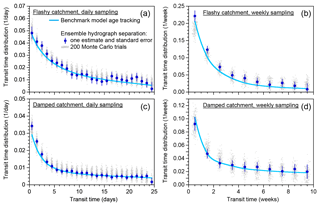

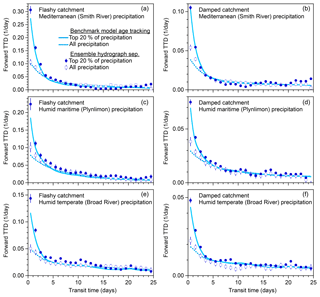

Figure 4Illustrative simulations of weekly water fluxes, deuterium concentrations, and new water fractions. The benchmark model, precipitation forcing, and parameter values are identical to those in Fig. 1. Although the isotope tracer concentrations and new water fractions exhibit complex nonstationary dynamics, ensemble hydrograph separation yields reasonable estimates of the average backward and forward weekly new water fractions, as shown in (e, f) and (g, h), respectively. Panels (a) and (b) show weekly average rates of precipitation and discharge. Panels (c) and (d) show the weekly volume-weighted isotopic composition of precipitation (mimicking what would be collected in a weekly rain sample) and the instantaneous composition of discharge at the end of each week (mimicking what would be collected in a weekly grab sample). Panels (e) and (f) show the fraction of discharge that is composed of same-week precipitation (the weekly new water fraction; yellow lines), as determined from model age tracking, and its long-term average (dashed blue line), compared to the new water fraction predicted by ensemble hydrograph separation (solid blue line) from the weekly samples shown in (b). Panels (g) and (h) show the fraction of precipitation that becomes same-week discharge (the weekly new water fraction of precipitation, or forward new water fraction, yellow lines), as determined from model age tracking, and its long-term average (dashed blue line), compared to the new water fraction predicted by ensemble hydrograph separation (solid blue line). Discharge-weighted and precipitation-weighted average new water fractions, and their predicted values, are shown by red solid and dashed lines.

Figure 5New water fractions estimated from weekly tracer dynamics using ensemble hydrograph separation, compared to averages of time-varying new water fractions determined from age tracking in the benchmark model. Plots are similar to those in Fig. 2, except here they are derived from simulated weekly sampling of tracer concentrations in precipitation and streamflow. Diagonal lines show perfect agreement. Each scatterplot shows 1000 points, each representing an individual random set of parameters, a randomly generated precipitation tracer time series, and a random set of measurement errors and missing values (see Sect. 3.1). The daily precipitation amounts are the same (Smith River time series) in each case. The event new water fraction (a, b) is the average fraction of new (same-day) water in streamflow during time steps with precipitation, as described in Sect. 2.3. Panel (a) shows event new water fractions estimated from 5 years of simulated weekly tracer data; panel (b) shows the same quantity estimated from single years of simulated weekly tracer data (each year is denoted by a different color). Averaging over the 5 years reduces scatter compared to the individual-year estimates. The new water fraction of discharge (c) is the fraction of same-day precipitation in streamflow, averaged over all time steps including rainless periods (Eq. 14, Sect. 2.4); its flow-weighted counterpart (e) is calculated using Eqs. (16)–(18) of Sect. 2.5. The forward new water fraction (the fraction of precipitation that becomes same-day streamflow; d) is calculated using Eq. (21), and its precipitation-weighted counterpart (f) is calculated using Eq. (28). There is only slight visual evidence of bias, and the scatter around the 1:1 line is small compared to the range spanned by the new water fractions.

Figure 4 shows the behavior of the benchmark model at weekly resolution for both the damped and flashy catchments. At the weekly timescale, the benchmark model exhibits complex nonstationary dynamics in discharge (panels a, b), water isotopes (panels c, d), and new water fractions (panels e, h). Nonetheless – and even though the weekly sampling timescale is much longer than the timescales of hydrologic response in the system – ensemble hydrograph separation yields reasonable estimates for the mean new water fractions of both precipitation and discharge (both unweighted and flow-weighted), as one can see by comparing the dashed and solid lines in Fig. 4e–h.

A comparison of Figs. 1 and 4 shows that the isotopic signature of precipitation is less variable among the weekly samples than among the daily samples, reflecting the fact that the weekly bulk samples of precipitation will inherently average over the sub-weekly variability in daily rainfall. By contrast, the weekly grab samples of streamflow lose all information about what is happening on shorter timescales. The new water fractions calculated from the weekly data are distinctly higher than those calculated from the daily data, owing to the fact that the definition of new water depends on the sampling frequency: the proportion of water ≤7 days old (new under weekly sampling) can never be less than the proportion ≤1 day old (new under daily sampling).

Figure 5 shows scatterplots comparing new water fractions estimated by ensemble hydrograph separation and those determined by age tracking in the benchmark model, analogous to Fig. 2 but for weekly instead of daily sampling. The weekly new water fractions are larger than the daily ones, for the reasons described above, and exhibit more scatter because they are based on fewer data points than their daily counterparts are. A small overestimation bias is visually evident in Fig. 2d and an even smaller underestimation bias is evident in Fig. 2c. These reservations notwithstanding, Fig. 5 shows that ensemble hydrograph separation can reliably predict new water fractions of both discharge and precipitation, with and without volume-weighting, based on weekly tracer samples.

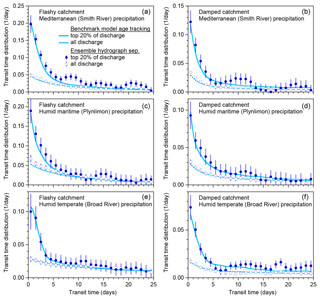

3.5 Variations in new water fractions with discharge, precipitation, and seasonality

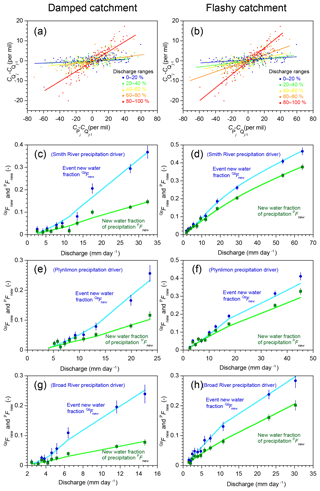

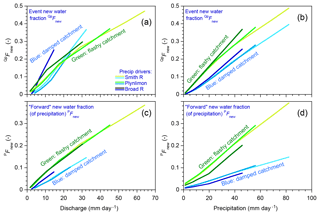

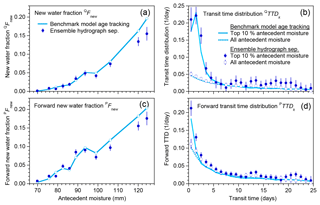

Ensemble hydrograph separation does not require continuous data as input, so it can be used to estimate Fnew values for (potentially discontinuous) subsets of a time series that reflect conditions of particular interest. For example, if we split the time series shown in Fig. 1 into several discharge ranges, we can see that at higher flows, tracer fluctuations in the stream are more strongly correlated with tracer fluctuations in precipitation (Fig. 6a, b). Each of the regression slopes in Fig. 6a, b defines the event new water fraction for the corresponding discharge range. Repeating this analysis for each 10 % interval of the discharge distribution (0th–10th percentile, 10th–20th percentile, etc.), plus the 95th–100th percentile, yields the profiles of as functions of discharge, as shown by the blue dots in Fig. 6c–h. The green squares show the corresponding forward new water fractions PFnew for comparison. The light blue and light green lines show the corresponding true new water fractions determined by age tracking in the benchmark model.

Figure 6Variations in new water fractions across ranges of discharge. (a, b) Relationship between tracer concentrations in precipitation and streamflow in the benchmark model run shown in Fig. 1, stratified by percentiles of the frequency distribution of discharge, for damped and rapid response parameter sets. In these coordinates, the slopes of the regression lines through the ensembles of points estimate their average event new water fractions (Eq. 10; Sect. 2.3). (c–h) Variation in new water fractions across discharge bins in the benchmark model. Dark blue and green symbols show estimates of the event new water fraction of discharge ( and the forward new water fraction (fraction of precipitation appearing in same-day streamflow, PFnew, Eq. 21) for each decile of the daily discharge distribution (the leftmost 10 points) and the uppermost 5 % (the rightmost point). Error bars show standard errors, where these are larger than the plotting symbols. Light blue and light green lines show the corresponding true new water fractions measured by age tracking in the benchmark model. The three rows (c–d, e–f, and g–h) show catchment response to three different precipitation climatologies (Smith River, Plynlimon, and Broad River), for both the damped response parameter set (c, e, g) and the flashy response parameter set (d, f, h). The new water fractions and PFnew vary strongly with discharge. Ensemble hydrograph separation accurately estimates both QpFnew and PFnew across the full range of discharge for all three forcings and both parameter sets.

Figure 7Variations in new water fractions across ranges of precipitation. (a, b) Relationship between tracer concentrations in precipitation and streamflow in the benchmark model run shown in Fig. 1, stratified by percentiles of the frequency distribution of precipitation, for damped and rapid response parameter sets. In these coordinates, the slope of the regression line through each ensemble of points estimates its average event new water fraction QpFnew (Eq. 10; Sect. 2.3). (c–h) Variation in new water fractions across precipitation bins in the benchmark model. Dark blue and green symbols show estimates of the event new water fraction of discharge (QpFnew) and the forward new water fraction (PFnew, the fraction of precipitation appearing in same-day streamflow; Eq. 21). Average QpFnew and PFnew values are plotted for each decile of the daily precipitation distribution (the leftmost 10 points) and the uppermost 5 % (the rightmost point), excluding precipitation amounts less than 1 mm day−1 (see text). Error bars show standard errors, where these are larger than the plotting symbols. Light blue and light green lines show the corresponding true new water fractions measured by age tracking in the benchmark model. The three rows (c–d, e–f, and g–h) show catchment response to three different precipitation climatologies (Smith River, Plynlimon, and Broad River), for both the damped response parameter set (c, e, g) and the flashy response parameter set (d, f, h). The new water fractions QpFnew and PFnew vary strongly with daily precipitation. Ensemble hydrograph separation accurately estimates both and PFnew across the full range of precipitation for all three forcings and both parameter sets.

If, instead, we split the time series shown in Fig. 1 into subsets reflecting ranges of precipitation rates rather than discharge, we obtain Fig. 7. Figure 7 is a counterpart to Fig. 6, but with and PFnew plotted as functions of rainfall rates rather than discharge. The two figures exhibit broadly similar behavior. Unsurprisingly, new water fractions are higher at higher discharges and rainfall rates, because under these conditions a higher fraction of discharge comes from the upper box, which has younger water. Forward new water fractions are typically smaller than event new water fractions, because during storms the rainfall rate is higher than the streamflow rate, so the ratio between same-day streamflow and the total rainfall rate (PFnew) will necessarily be smaller than the ratio between same-day streamflow and the total streamflow rate (). Exceptions to this rule arise when rainfall rates are lower than discharge rates, such as during periods of light rainfall while streamflow is still undergoing recession from previous heavy rain. Thus the green and blue curves cross over one another at the left-hand edges of Fig. 7c–h, whereas in Fig. 6c–h they do not.

Three conclusions can be drawn from Figs. 6 and 7. First, in these model catchments, new water fractions vary dramatically between low flows and high flows, and between low and high precipitation rates, with the event new water fraction and the forward new water fraction PFnew diverging from one another more at higher flows and higher rainfall forcing. Second, different catchment parameters (different columns in Fig. 6) and different precipitation forcings (different rows in Fig. 6) yield different patterns in the relationships between the new water fractions and PFnew on the one hand and precipitation and discharge on the other. And third, these patterns are accurately quantified by ensemble hydrograph separation, which matches the age-tracking results (shown by the solid lines) within the estimated standard errors in most cases.

Thus the patterns describing how new water fractions change with precipitation and discharge may be useful as signatures of catchment transport behavior and can be estimated directly from tracer time series using ensemble hydrograph separation. These observations raise the question of whether any of these signatures of behavior, as inferred from the patterns in these plots (if not the individual numerical values), might imply something useful about the characteristics of the catchments themselves, ideally in a way that is not substantially confounded by precipitation climatology. A comprehensive answer is not possible within the scope of this paper, since it focuses mostly on just two parameter sets and three precipitation records. But as a first approach, one can try superimposing the results in Figs. 6 and 7 on consistent axes (note that the axes in these figures' various panels differ from one another in order to show the full range of behavior). Doing so yields Fig. 8, which overlays the age-tracking results from Figs. 6c–h and 7c–h in its left- and right-hand panels, respectively. In Fig. 8, catchments with the damped and flashy parameter sets are denoted by green and blue curves, respectively, with different levels of brightness corresponding to the three different precipitation climatologies. The key question is: are there patterns in or PFnew that clearly distinguish the flashy catchment from the damped catchment, regardless of the precipitation forcing? Figure 8a shows an example where this is not the case; instead, the two catchments' behaviors largely overlap in a tangle of blue and green lines. In the other three panels, however (and particularly for the trends in PFnew as a function of precipitation rates, as shown in Fig. 8d), the blue and green curves are relatively distinct from one another, but the different climatologies largely overlap for each catchment. This result suggests that these traces may be useful as diagnostic signatures of catchment characteristics, which are relatively insensitive to precipitation climatology. However, Fig. 8 can only be considered a preliminary indication of what might be possible, rather than a definitive demonstration.

Figure 8Effects of precipitation climatology and catchment properties on discharge dependence and precipitation dependence of new water fractions. The lines plotted here superimpose the model age-tracking results (solid lines) from Figs. 6 and 7. Panels (a) and (d) show how event new water fractions (QpFnew, Sect. 2.3) and forward new water fractions (PFnew, Sect. 2.6) vary as functions of discharge and precipitation, respectively. Green and blue lines show benchmark model behavior under the flashy and damped parameter sets, with three levels of brightness corresponding to the three different precipitation climatologies: Mediterranean climate (Smith River, lightest colors), humid maritime climate (Plynlimon, intermediate colors), and humid temperate climate (Broad River, darkest colors). When event new water fractions are plotted as functions of discharge (a), different catchments and precipitation climatologies overlap. By contrast, in the other three panels (and particularly in d, which shows forward new water fractions as functions of precipitation), the lines for the flashy catchment and the damped catchment are clearly distinct from one another, regardless of precipitation climatology. This suggests that these patterns may be diagnostic of the internal workings of the catchment, but relatively insensitive to the particular rainfall forcing.

The behavior summarized in Figs. 6–8 shows that, in general, new water fractions are functions of both catchment characteristics and precipitation climatology. Moreover, new water fractions will depend on the sequence of precipitation events, not just on their frequency distribution, because they will depend on antecedent wetness. Thus although the ensemble hydrograph separation approach does not require continuous data, and thus can be applied to time series with data gaps, any inferred new water fractions will obviously represent only the particular time intervals that are included in the analysis.

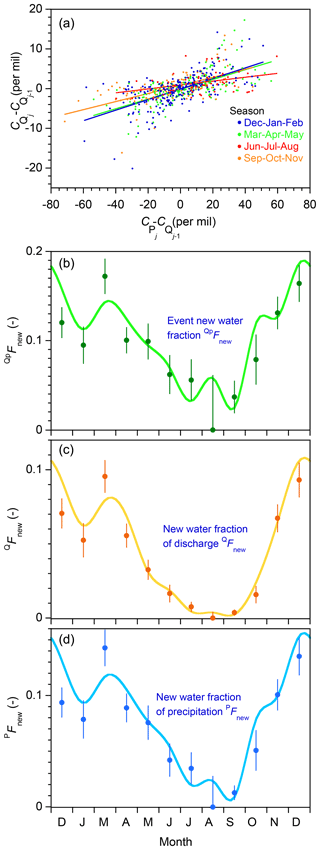

Figure 9Seasonality in new water fractions under Mediterranean climate precipitation forcing. (a) Relationship between tracer concentrations in precipitation and streamflow in the flashy benchmark model run shown in Fig. 1, stratified by season. Each season's event new water fraction can be estimated from the slope of the regression line fitted to the corresponding set of points. (b, c, d) Average event new water fractions (, new water fractions of discharge (QFnew), and forward new water fractions of precipitation (PFnew) calculated from ensembles of all points within each month, across the 5 years of benchmark model simulations. Error bars show standard errors, where these are larger than the plotting symbols. Curves are drawn through true monthly average new water fractions, as determined by age tracking in the benchmark model. Ensemble hydrograph separation reproduces this seasonal pattern in new water fractions reasonably well. The uncertainty estimates also realistically predict the average deviation of the ensemble hydrograph separation estimates from the true age-tracking determinations. Values shown here are generated by the benchmark model with the flashy catchment parameter set and Smith River (Mediterranean climate) precipitation forcing. The new water fractions would exhibit less pronounced seasonality if the rainfall forcing were less strongly seasonal or the catchment response were less flashy.

One implication of the forgoing considerations is that seasonal differences in storm size and frequency should also be reflected in seasonal variations in new water fractions. Figure 9a shows a scatterplot of tracer fluctuations in streamflow and precipitation, color-coded by season, for the flashy catchment simulation shown in Fig. 1. The regression lines (whose slopes define the event new water fractions for the corresponding seasons) show that tracer concentrations in streamflow and precipitation are more tightly coupled in winter and spring than in summer and autumn. Panels b–d of Fig. 9 demonstrate large variations in the event new water fraction , the new water fraction of discharge QFnew, and the forward new water fraction of precipitation PFnew from month to month, with a broad seasonal trend towards larger new water fractions in winter and spring. The month-to-month variations in the age-tracking results (the smooth curves) are usually quantified by the ensemble hydrograph separation estimates (the solid dots) within their calculated uncertainties (as shown by the error bars). Thus Fig. 9 suggests that ensemble hydrograph separation can be used to quantify how catchment transport behavior is shaped by seasonal patterns in precipitation forcing.

3.6 Effects of evaporative fractionation

Any analysis based on water isotopes must deal with the potential effects of isotopic fractionation due to evaporation (e.g., Laudon et al., 2002; Taylor et al., 2002; Sprenger et al., 2017; Benettin et al., 2018). A detailed treatment of evaporative fractionation would necessarily be site-specific and thus beyond the scope of this paper. Nonetheless, it is possible to make a simple first estimate of how much evaporative fractionation could affect new water fractions estimated from ensemble hydrograph separation. The benchmark model does not explicitly simulate evapotranspiration and its effects on the catchment mass balance, but the issue to be addressed here is different: how much could evaporative fractionation alter the isotope values measured in streamflow, and how could this affect the resulting estimates of new water fractions?

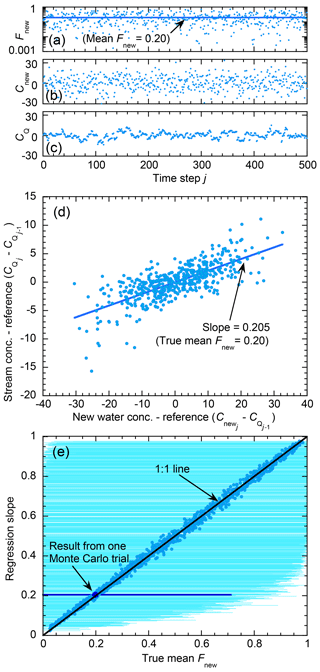

To explore this question, I first adjusted the isotope values of infiltration entering the model in Fig. 1 to mimic the effects of seasonally varying evaporative fractionation. I assumed that evaporative fractionation was a sinusoidal function of the time of year, ranging from zero in midwinter to 20 ‰ in midsummer. Thus I assumed that evaporative fractionation effectively doubled the seasonal isotopic cycle in the water entering the model catchment (but not in the sampled rainfall itself, since any fractionation that occurs before the rainfall is sampled will not distort the ensemble hydrograph separation). I then calculated new water fractions based on the time series of sampled precipitation tracer concentrations and of streamflow tracer concentrations (altered by the lagged and mixed effects of evaporative fractionation), and compared these to the true new water fractions calculated by age tracking within the model.

The results are shown in Fig. 10, which compares 1000 Monte Carlo trials with evaporative fractionation (the blue dots) and another 1000 Monte Carlo trials without evaporative fractionation (the gray dots). One can see that, in these simulations, evaporative fractionation leads to a slight tendency to underestimate new water fractions. Nonetheless, the blue and gray dots largely overlap, and both generally follow the 1:1 lines. These results are reassuring, because the modeled fractionation effects were designed to be a worst-case scenario, in the following sense. Because ensemble hydrograph separation is based on patterns of fluctuations in precipitation and streamflow tracers, any fractionation process that created a constant offset between inputs and outputs would introduce no bias. For the same reason, any fractionation process that was uncorrelated to the input isotopic signature would also introduce no bias; thus, for example, the modeled seasonal fractionation cycle would have had no effect if there were no seasonal pattern in the precipitation isotopes themselves. But because the seasonal fractionation cycle is correlated with the seasonal pattern in the precipitation isotopes, it can potentially bias the resulting estimates of new water fractions. The fact that these biases are small, as shown in Fig. 10, suggests that ensemble hydrograph separation should yield realistic estimates of new water fractions, even with substantial confounding by evaporative fractionation.

Figure 10Effects of seasonally varying evaporative fractionation on new water fractions estimated by ensemble hydrograph separation. Points show new water fractions predicted from tracer fluctuations in precipitation and streamflow (on the vertical axis), compared to averages of time-varying new water fractions determined by age tracking in the benchmark model (on the horizontal axis). Blue points show 1000 model runs in which precipitation undergoes seasonally varying evaporative fractionation ranging from zero in winter to 20 ‰ in summer. Gray background points show 1000 model runs without evaporative fractionation (analogous to Fig. 2). Each model run has a different random set of model parameters, measurement errors, and missing values, but the precipitation driver (Smith River daily precipitation) is the same in all cases. The blue data clouds closely follow the 1:1 line, indicating that ensemble hydrograph separation can reliably estimate new water fractions even in the presence of substantial evaporative fractionation.

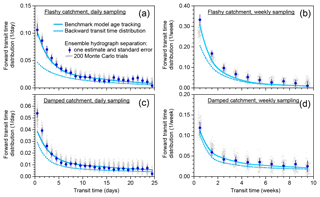

A natural extension of the approach outlined in Sect. 2 would be to quantify the contributions of precipitation to streamflow over a range of lag times: to quantify, in other words, the catchment transit time distribution. In principle this should be straightforward, although in practice several challenges must be overcome. Below, I describe these issues and outline techniques for addressing them. Readers who are not interested in the methodological details can proceed directly from Sect. 4.1 to 4.5, skipping over Sect. 4.2–4.4.

4.1 Definitions

I assume that catchment inputs and outputs are sampled at the same fixed time interval Δt and define the time that a parcel of water enters the catchment (via rainfall, snowmelt, etc.) as ti and the time that it exits via streamflow as tj. The lag interval between precipitation and streamflow is indexed as . Pi is the rate that precipitation or snowmelt (net of evaporative losses) enters the catchment at time ti, and Qj is the rate of discharge that exits the catchment at time tj. and are the tracer concentrations in precipitation and streamflow, respectively. The water flux that enters as precipitation at time ti and leaves as streamflow k time steps later (at time is represented as qj+k. The sum of qjk over all lag times k (corresponding to all previous entry times ) is the total discharge Qj. Each of the qjk will be a fraction of the total precipitation falling at time and a (typically different) fraction of the total discharge at time tj. The fraction of discharge exiting at time tj that entered k time steps earlier is qjk∕Qj, and the distribution of qjk∕Qj over lag time k yields the transit time distribution conditioned on the exit time tj (also called the “backward” transit time distribution). The fraction of precipitation entering at time ti that subsequently leaves as streamflow k time steps later is , and the distribution of over lag time k yields the transit time distribution conditioned on the entry time ti (also called the “forward” transit time distribution).

In practice, precipitation fluxes are typically measured as averages over discrete time intervals, and tracer concentrations in precipitation are likewise volume-averaged over discrete intervals (such as a day or a week) during which the sample accumulates in the precipitation collector. By contrast, discharge fluxes are typically measured instantaneously, and discharge tracer concentrations are typically measured in instantaneous grab samples. In most of what follows, I will assume that Pi and are averages over the interval , and Qj and are instantaneous values at t=tj. However, in a few catchment studies, discharge concentrations have instead been measured in time-integrated samples. The analysis presented below is the same, whether the discharge tracer concentrations are instantaneous at t=tj or are integrated over each time interval . The interpretation is slightly different, however, because the average lag time corresponding to a given lag interval k will depend on how precipitation and streamflow are sampled. Usually, streamwater samples are collected more or less instantaneously (grab sampling), and precipitation samples are integrated over the time interval that the sampler is open. A typical daily sampling scheme, for example, might involve collecting a precipitation sample at noon (which integrates precipitation that fell over the previous 24 h) and also collecting a grab sample of streamflow at noon. In this case, the average lag time between a raindrop falling as precipitation and being sampled in the same day's streamflow (i.e., k=0) would be 12 h, assuming that, on average, the probability of rainfall is independent of the time of day. Thus in this conventional sampling scheme, the average lag time will be (k+0.5)Δt, where Δt is the sampling interval. If, instead, the stream samples were daily composites, then (for example) the same-day raindrops appearing in the first hour's subsample of streamflow would have an average lag time of 30 min, the second hour's would be 60 min, and so forth, and therefore the daily average lag time would be 6 h. Thus if stream samples are time-integrated composites, the average lag time will be (k+0.25)Δt.

I now outline the fundamentals of the ensemble hydrograph separation approach to estimating transit time distributions. Conservation of water mass requires that the discharge at time step j equals the contributions from all lag times k (corresponding to all previous entry times ):

Because tracing contributions to streamflow from all previous time steps would be impractical, it will be necessary to truncate the summation in Eq. (31) at some maximum lag, which I will denote as m, and to combine the unmeasured older contributions in a water flux :

Conservation of tracer mass requires that the tracer fluxes add up similarly, again with a catch-all flux :

Dividing Eq. (33) by Qj and rearranging terms directly yields

which readers will recognize as the multi-lag counterpart of Eq. (7).

Analogous to the approach in Sect. 2, here I account for the concentration of older inputs using the streamflow concentration at lag m+1, just beyond the longest lag m, with the goal of filtering out long-term patterns that could otherwise distort the correlations between and . Thus serves as a reference level for measuring fluctuations in precipitation and streamflow tracer concentrations, analogous to in Eq. (8). Adding a bias term α and an error term εj yields

which almost looks like a conventional multiple linear regression equation,

where

with the difference that the coefficients βk in Eq. (36) are constant over all exit times j and differ only as a function of the lag time k, whereas the qjk∕Qj terms in Eq. (35) can differ among both lag times k and exit times j. Nonetheless, by analogy with the mathematical arguments in Appendix A and those at the end of Appendix B, one can expect that βk will closely approximate the average of the time-varying contributions qjk∕Qj to streamflow over the ensemble of exit times j (please note that this is not the same as assuming that the transit time distribution is time-invariant). Substituting βk as an ensemble estimate of qjk∕Qj, one obtains the ensemble hydrograph separation equation for estimating transit time distributions,

When appropriately rescaled as described in Sect. 4.5–4.7 below, the coefficients βk in Eq. (38) – or more precisely, their regression estimates – can be used to estimate the time-averaged (also sometimes called “marginal”) transit time distribution.

4.2 Solution method

Using Y to represent the vector of reference-corrected streamflow tracer concentrations and X to represent the matrix of reference-corrected input tracer concentrations , we can rewrite Eq. (38) in the array form of a multiple regression equation:

where Xk is the kth column vector of X, and ε is the vector of the errors εj. The least-squares solution for multiple regressions like Eq. (39) can be expressed in matrix form as

where the regression coefficients are the least-squares estimators of the true (but unknowable) coefficients βk. Equation (40) is the multidimensional counterpart to Eq. (10). The first term on the right-hand side of Eq. (40) is the inverse of the matrix of the covariances of the Xk at each lag with each other lag, and the second term is a vector of the covariances between Y and the Xk at each lag. Equation (40) is equivalent to the more widely known “normal equation” for solving multiple regressions,

if one first normalizes Y and each of the Xk by subtracting their respective means; doing so has no effect on the estimates of the regression coefficients . (The elements of the square matrix XTX are the covariances between the Xk's at each pair of lags, multiplied by the number of samples; likewise the elements of the column matrix XTY are the covariances between each of the Xk's and Y, multiplied by the number of samples.)

Astute readers will immediately notice a fundamental problem with applying Eqs. (40) or (41) in practice, namely that they require precipitation tracer concentrations for all time steps j=1 … n and lags k=0 … m. In every practical case, many precipitation tracer concentrations will be missing, for two reasons. Some tracer concentrations will be missing due to sampling or measurement failures, and many more will be inherently missing because precipitation tracer concentrations cannot exist for time steps without precipitation. As we will see shortly, missing measurements that arise for these two different reasons must be handled in two different ways. But regardless of its origins, each missing tracer concentration at time step i will create a diagonal line of missing values xj, k in the matrix X, causing a missing value in the first column (k=0) at j=i, and another in the second column (k=1) at , and so on up to the last column (k=m) at .

So-called “missing data problems” arise frequently in the statistical literature, and several approaches have been proposed for handling them (Little, 1992). One approach, termed “listwise deletion” or “complete-case analysis”, involves discarding all cases (meaning all rows j in the matrix X) in which any variables are missing and analyzing only the remaining (complete) cases. In our situation, this would mean analyzing only exit times tj that are preceded by unbroken series of rainy periods, up to the maximum lag m for which we want to estimate the coefficients . Such ensembles of points would be mathematically convenient, but they would also be very strongly biased in a hydrological sense, because they would represent periods of unusually consistent rainfall (and thus unusually wet catchment conditions). Furthermore, if the maximum lag m is sufficiently long, records with continuous rainfall over all m+1 lags (k=0 … m) will become impossible to find. For these reasons, complete-case analysis is not a feasible approach to our problem.

A second class of approaches to the missing data problem involves imputing values to the missing data (Little, 1992). In our case, however, many of the missing data are not simply unmeasured, but cannot exist at all (because rainless days have no rainfall concentrations), so it is not obvious how to impute the missing values.

A third approach, termed “pairwise deletion” or “available-case analysis”, first proposed by Glasser (1964), entails evaluating each of the covariances in Eq. (40) using any cases for which the necessary pairs of observations exist. Thus the covariances in Eq. (40) are replaced by

and

where the notation (kℓ) indicates terms that are evaluated over all cases j for which both xjk and xjℓ exist (e.g., is the mean of the column vector Xk for rows j where neither xjk nor xjℓ is missing, and n(kℓ) is the number of such cases), and (ky) indicates terms that are evaluated over all cases j for which xjk and yj exist.