the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Machine learning in stream and river water temperature modeling: a review and metrics for evaluation

Claudia Rebecca Corona

Terri Sue Hogue

As climate change continues to affect stream and river (henceforth stream) systems worldwide, stream water temperature (SWT) is an increasingly important indicator of distribution patterns and mortality rates among fish, amphibians, and macroinvertebrates. Technological advances tracing back to the mid-20th century have improved our ability to measure SWT at varying spatial and temporal resolutions for the fundamental goal of better understanding stream function and ensuring ecosystem health. Despite significant advances, there continue to be numerous stream reaches, stream segments, and entire catchments that are difficult to access for a myriad of reasons, including but not limited to physical limitations. Moreover, there are noted access issues, financial constraints, and temporal and spatial inconsistencies or failures with in situ instrumentation. Over the last few decades and in response to these limitations, statistical methods and physically based computer models have been steadily employed to examine SWT dynamics and controls. Most recently, the use of artificial intelligence, specifically machine learning (ML) algorithms, has garnered significant attention and utility in hydrologic sciences, specifically as a novel tool to learn undiscovered patterns from complex data and try to fill data streams and knowledge gaps. Our review found that in the recent 5 years (2020–2024), more studies using ML for SWT were published than in the previous 20 years (2000–2019), totaling 57. The aim of this work is threefold: first, to provide a concise review of the use of ML algorithms in SWT modeling and prediction; second, to review ML performance evaluation metrics as they pertain to SWT modeling and prediction to find the commonly used metrics and suggest guidelines for easier comparison of ML performance across SWT studies; and, third, to examine how ML use in SWT modeling has enhanced our understanding of spatial and temporal patterns of SWT and examine where progress is still needed.

- Article

(2609 KB) - Full-text XML

-

Supplement

(502 KB) - BibTeX

- EndNote

Water temperature in a stream or river plays a vital role in nature and society, regulating dissolved oxygen concentrations (Poole and Berman, 2001), biochemical oxygen demand rates, and chemical toxicities (Cairns et al., 1975; Patra et al., 2015). Additionally, SWT is an important indicator of cumulative anthropogenic impacts on lotic environments (Risley et al., 2010). Observations of SWT changes over time can reveal the effects of streamflow regulation, riparian alteration (Johnson and Jones, 2000), and large-scale climate change (Barbarossa et al., 2021) on local ecosystems. From an ecological standpoint, SWT strongly influences (Ward, 1998) the health, survival, and distribution of freshwater fish (Ulaski et al., 2023; Wild et al., 2023), amphibians (Rogers et al., 2020), and macroinvertebrates (Wallace and Webster, 1996). As climate change progresses, SWT will be an increasingly critical proxy for ecosystem health and function both locally and nationally.

1.1 SWT modeling in the 21st century

Technological advances since the turn of the 20th century have improved our ability to measure SWT in an affordable and dependable manner at varying spatial and temporal resolutions (Benyahya et al., 2007; Dugdale et al., 2017). Despite significant advances in the last 100 years, many stream reaches, stream segments, and entire catchments remain difficult to access for a myriad of reasons (Ouellet et al., 2020), including but not limited to the following: physical limitations, i.e., streams may be in private property, remote, or dangerous-to-access areas; financial constraints, i.e., access may be limited by monetary resources or lack thereof; and temporal limitations such as uncertainties and inconsistencies in the continuity of measurements or unforeseen equipment loss or failure (Webb et al., 2015; Isaak et al., 2017). In response to these limitations, statistical methods and physically based computer models have been steadily employed over the last few decades to support the advancement of scientific understanding of stream form and function as well as subsequent implications for water management (Cluis, 1972; Caissie et al., 1998; DeWeber and Wagner, 2014; Isaak et al., 2017).

Aided by the continued development of computers and the internet, physical and statistical computer models have gained prominence outside of academia and are more commonly being used by stakeholders and local groups to address a myriad of hydrology challenges (Maheu et al., 2016; Liu et al., 2018; Tao et al., 2020; Rogers et al., 2020). At the same time, the problem-solving success of machine learning (ML), which falls under the umbrella of artificial intelligence, has become increasingly popular in hydrologic sciences in the last few years (DeWeber and Wagner, 2014; Xu and Liang, 2021). Artificial intelligence (AI) describes technologies that can incorporate and assess inputs from an environment, learn optimal patterns, and implement actions to meet stated objectives or performance metrics (Xu and Liang, 2021; Varadharajan et al., 2022). As a subset of AI, the goal of ML algorithms and models is to learn patterns from complex data (Friedberg, 1958). A global call to better predict and prepare for near- and far-future hydrologic conditions has led researchers in the last few decades to use ML algorithms to model hydrologic processes at various temporal and spatial scales (Poff et al., 1996; Solomatine et al., 2008; Cole et al., 2014; Khosravi et al., 2023). For example, a type of ML called artificial neural networks (ANNs) have been used since the 1990s in many subfields of hydrology, such as streamflow predictions (Karunanithi et al., 1994; Poff et al., 1996), rainfall-runoff modeling (Hsu et al., 1995; Shamseldin, 1997), subsurface flow and transport (Morshed and Kaluarachchi, 1998), and flood forecasting (Thirumalaiah and Deo, 1998). For SWT modeling, however, the use of ML algorithms such as ANNs has only recently garnered interest (Zhu and Piotrowski, 2020).

1.2 Study objective

The current work includes an extensive literature review of studies that used ML algorithms and models for river and SWT modeling, hindcasting, and forecasting. The intent of this review is twofold: (1) to introduce ML for hydrologists who have modeling experience and are interested in pursuing ML use for their SWT studies and (2) to provide a broad overview of machine learning applications in SWT. For ML experts, we think that this review could also prove useful as a reference for how ML has been applied in the field of SWT modeling and where improvement is needed. Overall, this article aims to serve as a bridge between hydrologists and machine learning experts. Our review includes papers cited by Zhu and Piotrowski (2020), who previously conducted a study of ANNs used in SWT modeling; however, we provide a comprehensive examination of peer-reviewed journals that use any type of artificial intelligence or ML algorithm to model or evaluate river or SWT. This review's first objective is to provide a concise review of ML algorithm use in SWT modeling. Secondly, our goal is to examine the ML performance evaluation metrics used in SWT modeling and find the most-used metrics and suggest guidelines for clearer comparison of ML performance. The third objective is to discuss the community's use of ML to address physical system understanding in SWT modeling. Overall, this review aims to serve as a critical assessment of the state of SWT understanding given the increasing popularity of ML use in SWT modeling.

2.1 SWT statistical (also stochastic or empirical) models

In the 1960s, considerable interest grew in the prediction of SWT, particularly in the western United States (US) due to increased awareness of environmental quality issues (Ward, 1963; Edinger et al., 1968; Brown, 1969). The creation of large dams, daily release of heated industrial effluents, growing agricultural waste discharge, and forest clear-cutting could influence downstream SWT. However, the extent of such influence remained poorly understood and difficult to test at large spatial and temporal scales (Brown, 1969). From the 1960s to the 1970s, understanding of the relationship between SWT and ambient air temperature (AT) was solidified, and scientists began to increasingly use statistical methods to examine the air–water relationships in stream environments (Morse, 1970; Cluis, 1972). Statistical (also stochastic or empirical) models are governed by empirical relations between SWT and their predictors, which require fewer input data. An example of such progress took place in Canada, where researchers created an autoregressive model to calculate mean daily SWT fluctuations using 6 months of data from the summer and winter months of 1969 (Cluis, 1972). Cluis (1972) further said that their model was transferrable to other streams of comparable size. The use of statistical methods in SWT modeling became increasingly common in the latter half of the 20th century due in large part to minimal data requirements (Benyahya et al., 2007). For example, scientists in Europe used limited data and statistics to examine the influence of atmospheric and topographic factors on the temperature of a small upland stream (Smith and Lavis, 1975). In Australia, scientists interested in finding limits for reaches of streams downstream from thermal discharges found a simple method that could predict SWT based solely on site altitude and AT or upstream SWT (Walker and Lawson, 1977). In Canada, SWT was predicted using a stochastic approach, which included the use of Fourier series, multiple regression analysis, Markov processes, and a Box–Jenkins time series model (Caissie et al., 1998). In the 21st century, statistical methods continue to be a prominent tool used for SWT modeling and prediction (Ahmadi-Nedushan et al., 2007; Chang and Psaris, 2013; Segura et al., 2015; Detenbeck et al., 2016; Siegel and Volk, 2019; Ulaski et al., 2023; Fuller et al., 2023). We refer the reader to Benyahya et al. (2007) for a comprehensive review of SWT statistical models and approaches.

2.2 SWT physically based (also process-based, deterministic, mechanistic) models

While statistical methods can be straightforward to use and require minimal in situ data for first analysis (Benyahya et al., 2007), limitations and uncertainty with regards to SWT predictions are possible, specifically when trying to understand the controls of the energy transfer mechanisms responsible for trends (Dugdale et al., 2017). To address these shortcomings and with the introduction of personal computers in the late 1960s (Dawdy and Thompson, 1967), researchers developed computer models and software programs that tried to address the more fundamental hydrology questions founded in physics and natural processes (Theurer et al., 1984; Bartholow, 1989). One example of such progress was an SWT prediction one-dimensional computer model that used a simplified energy conservation equation to predict SWT for the upper reaches of the Columbia River in the Pacific Northwest of the US during July 1966 (Morse, 1970). These models are described as being physically based or process-based (alternatively called “deterministic” or “mechanistic” models).

Due to the continued lack of sufficient in situ observations and resources with which to undertake field studies in SWT science (Dugdale et al., 2017), physically based models became increasingly used. From the end of the 20th century through the present, they are considered one of the best available options in generating predictions of SWT, particularly at a localized scale (Dugdale et al., 2017). Physically based models became useful enough that government agencies introduced their own models to encourage uniformity. In the 1980s, the US Geological Survey (USGS) introduced a physically based model that simulated SWT called SNTemp (Theurer et al., 1984; Bartholow, 1989). A few years later, the US Environmental Protection Agency (EPA) introduced SHADE-HSPF for similar purposes (Chen et al., 1998a, b). Where available, academic scientists coupled field measurements with physically based numerical models. For example, scientists in Minnesota created a numerical model, called MNSTREM, based on a finite-difference solution of the nonlinear equation to predict SWT at 1 h increments for the Clearwater River (Sinokrot and Stefan, 1993). Similarly, academic scientists in Canada introduced CEQUEAU, a water balance type of model which incorporated vegetation and soil characteristics to solve for SWT (St-Hilaire et al., 2000). Physically based models became commercially available in the 2000s, one example being the MIKE suite of models, which were created to solve the heat and advection–dispersion equation to simulate both surface and subsurface water dynamics, created by the DHI consulting group (Jaber and Shukla, 2012; Loinaz et al., 2013). In addition to the models mentioned, over a dozen more physically based models were created and used between 1990 and 2017 (Dugdale et al., 2017). For a more detailed review of physically based SWT models, we refer the reader to Dugdale et al. (2017).

2.3 Artificial intelligence models in SWT modeling

Initial discussion of artificial intelligence can be traced back to 1943, when McCulloch and Pitts presented a computer model that functioned like neural networks of the brain (McCulloch and Pitts, 1943). In 1958, R.M. Friedberg published A Learning Machine: Part 1 in IBM's Journal of Research and Development, one of the first to describe the concept of “machine learning”. Friedberg hypothesized that machines could be taught how to learn such that they developed the capability to improve their own performance to the point of completing tasks or meeting objectives (Friedberg, 1958). Sixty years later, ML has grown as a field of study in academia and as an area of great interest in society, the latter due in large part to the popularity of large language models (a type of machine learning that we will not discuss here), such as ChatGPT (OpenAI, Inc., 2025), Copilot (Microsoft, Inc., 2025), and Gemini (Google, 2025).

In the last decade, computing advances in AI have started to offer several advantages for using machine learning (ML) in hydrology that are comparable to physically based models (Cole et al., 2014; Rehana and Rajesh, 2023). In contrast to traditional physically based models, the code underlying ML models is generally open-source and publicly available, allowing for near-real-time accessible advances and user feedback, whereas the source code for some physically based models may be inaccessible to the public due to being privately managed (MIKE suite of models), or the model software may be publicly available but could take years to publish updates (USGS MODFLOW, Simunek's HYDRUS). One advantage that has made ML increasingly appealing includes its ability to learn directly from the data (i.e., data-driven), which can be useful when the underlying physics are not fully understood or are considered too complex to model accurately.

Additionally, ML models are more efficient in making predictions compared to the time-intensive solvers of physically based models. ML models can also handle the challenge of scalability, which means managing large datasets and seamlessly deploying across various computer platforms and applications (Rehana and Rajesh, 2023). Air2stream, a hybrid statistical–physically based SWT model (Toffolon and Piccolroaz, 2015; Piccolroaz et al., 2016), initially outperformed earlier ML models such as Gaussian process regression (Zhu et al., 2019a). However, in the last few years, Air2stream has had its performance matched and even exceeded by recent neural network models (Feigl et al., 2021; Rehana and Rajesh, 2023)

Finally, with computer processing power improving and the emergent field of quantum computing, there is a strong belief that using ML and by extension AI in science applications will drive innovation to the point where natural patterns and insights not currently apparent in physical modeling will be uncovered (Varadharajan et al., 2022). Thus, while physically based models are considered invaluable for their interpretability and grounding in established physics, ML models have the potential for growth in various fields of hydrology, where they can be used to first complement and eventually lead as powerful tools for prediction, optimization, and understanding in increasingly complex and data-rich environments.

For this review, we differentiate between traditional ML and newer ML, where the former includes approaches that have been used for decades in hydrologic modeling, i.e., cluster analysis, support vector machine, and shallow neural networks. We define newer ML as that introduced in hydrologic modeling in recent years, such as the deep learning long short-term memory NNs, extreme learning machine, and ML hybridizations. The following sections provide an overview of ML types and learning techniques. Finally, we assume that readers have a very basic understanding of the differences between machine learning types such as supervised, semi-supervised, and unsupervised learning and refer the reader to Xu and Liang (2021) for a nice overview.

2.3.1 Traditional ML algorithms

K-nearest neighbors

K-nearest neighbors (K-nn) is a versatile supervised ML algorithm (Fix and Hodges, 1952; Cover and Hart, 1967) used to solve nonparametric classification and regression problems. The K-nn algorithm uses proximity between data points to make classifications or evaluations about the grouping of any given data point. K-nn gained popularity in the 2000s due to its simplicity in implementation and understanding, making it readily accessible to hydrologic researchers and practitioners. For example, St.-Hilaire et al. (2011) used various K-nn model configurations to model SWT for the Moisie River in northern Quebec, Canada, finding that the best K-nn model required prior-day SWT data and day of year (DOY), an indicator of seasonality. Advantages of K-nn include its non-assumptions of the underlying distribution of the data, allowing it to handle nonlinear complexities without requiring a solid model structure as is the case for some physical models (St-Hilaire et al., 2011). There are some disadvantages of K-nn: it is computationally intensive and may require extensive cross-validation; performance can be affected by irrelevant and/or redundant features; and due to its high memory and computational needs it is impractical for large-scale applications, i.e., scalability issues (Acito, 2023). For example, Heddam et al. (2022b) compared K-nn with other ML algorithms, finding that K-nn was outperformed by other MLs such as least-squares support vector machine and neural networks. The use of K-nn may still be reasonable for simple, local cases but we suggest other MLs for more complex or larger use cases.

Cluster analysis and variants

Cluster analysis is a category of unsupervised ML methods used to create groups from an unlabeled dataset. Clustering methods use distance functions such as Euclidean distance, Manhattan distance, Minkowski distance, cosine similarity, and others to group data into clusters (Irani et al., 2016). The analysis separates data into groups of maximum similarity, while also trying to minimize the similarity from group to group (Xu and Liang, 2021). In SWT modeling, studies have used cluster analysis to try a reduction of a dataset prior to assessment (Voza and Vuković, 2018) and/or to find spatiotemporal patterns in a dataset (Krishnaraj and Deka, 2020). Another popular clustering technique is discriminant analysis, which tries to find parameters that are most significant for temporal differentiation between rendered periods (Voza and Vuković, 2018). K-means, a type of unsupervised ML, is a clustering algorithm that finds k number of centroids in the dataset and distributes each respective data value to the nearest cluster while keeping the smallest number of centroids possible (Krishnaraj and Deka, 2020). Krishnaraj and Deka (2020) used K-means to organize spatial grouping for water quality monitoring stations for dry and wet regions along the Ganges River basin in India to identify whether pollution patterns could be discerned.

While cluster analysis and discriminant analysis are generally used to reduce datasets, another technique, the principal component analysis (PCA) (or factor) test, is applied to assess dominant factors in datasets. Mathematically, principal component analysis (PCA) is a statistical unsupervised ML technique that uses an orthogonal transformation (a linear transformation that preserves lengths of vectors and angles) to convert a set of variables from correlated to uncorrelated (Krishnaraj and Deka, 2020). Using PCA, Krishnaraj and Deka (2020) found that certain water quality parameters (dissolved oxygen, sulfate, electrical conductivity) were more dominant in the dry season compared to the wet season (total dissolved solids, sodium, potassium, sodium, chlorine, chemical oxygen demand), data which could be used to cater the monitoring program to the important parameters. SWT was not a dominant parameter, likely in part because the SWT values of large downstream rivers like the Ganges are generally less variable due to their larger volume and stronger thermal buffer.

Support vector machine and regression

Support vector machine (SVM) is a supervised learning technique used for classification, regression, and outlier detection. The aim of SVM is to find a hyperplane (or the decision surface) in an N-dimensional space (N is the number of features) that best separates labeled categories, or support vectors (Cortes and Vapnik, 1995). One of the advantages of SVM is that it seeks to minimize the upper bound of the generalization error instead of the training error (Cortes and Vapnik, 1995). A big disadvantage is that it does not perform well with large datasets due to the likelihood of greater noise, which would cause support vectors to overlap, making classification difficult. For a more detailed explanation of SVM, we refer the reader to Cortes and Vapnik (1995) and Xu and Liang (2021). In the last few decades, SVM has been coupled with other ML models to find the best-performing models for short-term water quality predictions (Lu and Ma, 2020) and daily SWT modeling (Heddam et al., 2022b). For example, Heddam (2022b) used least-squares SVM (LSSVM), a variant of SVM which takes a linear approach (instead of quadratic-like SVM), to reach a solution (Suykens and Vandewalle, 1999).

A version of SVM used for regression tasks is support vector regression (SVR). SVR attempts to minimize the objective function (composed of loss greater than a specified threshold) and a regularization term (Rehana, 2019; Hani et al., 2023). For further detail on SVR, we refer the reader to Rehana (2019) and Hani et al. (2023). Using historical data, SVR has been compared with other ML models that evaluate SWT variability due to climate change (Rehana, 2019), finding temperature increases less pronounced in the SVR model. Jiang et al. (2022) compared SVR to other ML models to forecast SWT in cascade-reservoir-influenced rivers. For the cascade-reservoir-operation-influenced study, SVR was outperformed by random forest (RF) and gradient boosting (Jiang et al., 2022). Focusing on 78 catchments in the mid-Atlantic and Pacific Northwest hydrologic regions of the US, researchers used SVR and an ML algorithm called XGBoost to predict monthly SWT (Weierbach et al., 2022), finding that SVR significantly outperformed traditional statistical approaches such as multi-linear regression (MLR) but did not outperform XGBoost. In addition, the SVR models had the highest accuracy for SWT across different catchments (Weierbach et al., 2022). In Quebec, Canada, a comparison of four ML models that estimated hourly SWT showed that SVR outperformed by RF (Hani et al., 2023).

A lesser-known form of SVM is its extended form, called relevance vector machine (RVM). RVM is a form of supervised learning that uses a Bayesian framework to solve classification and regression problems (Tipping, 2001). Locally weighted polynomial regression (LWPR) is a form of supervised ML (Moore et al., 1997) used for learning continuous nonlinear mappings from real-valued (i.e., functions whose values are real numbers) inputs and real-valued outputs. LWPR works by adapting the model locally to the respective data points, assigning different weights to different data points based on data point proximity to the target (Moore et al., 1997). This type of regression is best employed when the variance around the regression line is not constant, thereby suggesting heteroscedasticity.

Gaussian process regression and generalized additive models

Gaussian process regression (GPR) is a type of nonparametric supervised learning method used to solve regression problems. As a Bayesian approach, GPR assumes a probability distribution over all functions that fit the data. GPR is specified by a mean function and covariance kernel function which reflect prior knowledge of the trend and level of smoothness of the target function (Xu and Liang, 2021). One of GPR's advantages is the model's ability to calculate empirical confidence intervals, allowing the user to consider refitting predictions to areas of interest in the function space (Grbić et al., 2013). For more details on GPR, we refer the reader to Xu and Liang (2021). Grbić et al. (2013) used GPR for SWT modeling of the river Drava, Croatia, where model no. 1 estimated the seasonal component of SWT fluctuations and model no. 2 estimated the shorter-term component (Grbić et al., 2013). A separate study for the river Drava used three variations of GPR to model SWT, finding that GPR was outperformed by the physically based, stochastically calibrated model, Air2stream (Zhu et al., 2019). More recently, Majerska et al. (2024) used GPR to simulate SWT for a non-glaciated arctic catchment, Fuglebekken (Spitsbergen, Svalbard). Using GPR and another model, the authors identified a diurnal warming trend of 0.5–3.5 °C per decade through the summer season, implying a warming thermal regime in the Fuglebekken catchment (Majerska et al., 2024).

Generalized additive models (GAMs) are a type of semi-parametric, nonlinear model with a wide range of flexibility, allowing the model to analyze data without assuming relations between inputs and outputs (Hastie and Tibshirani, 1987). Where GPR uses a probabilistic approach, GAM uses smoothing functions (i.e., splines) to model the relationship between a predictor variable and response variable. GAMs have been used to model SWT for the Sainte-Marguerite River in eastern Canada (Laanaya et al., 2017; Souaissi et al., 2023; Hani et al., 2023). Hani et al. (2023) used GAMs to identify potential thermal refuge areas for Atlantic salmon in two tributary confluences using sub-hourly observations.

Decision trees and classification and regression trees

Decision trees (DTs) are a nonparametric, supervised learning technique. DTs can make predictions or decisions based on a set of input features and are likely to be more accurate where the problem can be solved in a hierarchical sequence of decisions (Breiman, 2001). Classification and regression trees (CARTs) are a specific type of algorithm that builds decision trees, where the internal node in the tree splits the data into two branches (sub-nodes) based on the specified decision rule (Loh, 2008). While CART can quickly find relationships between data, it is prone to overfitting and can be statistical unstable, where a small perturbation in the training data could negatively affect the output of the tree (Hastie et al., 2001; Xu and Liang, 2021). For a detailed explanation of DT and CART, we refer the reader to Hastie et al. (2001), Loh (2008), and Xu and Liang (2021). An SWT modeling study comparing the output of three model versions of DT, GPR, and feed-forward neural networks for multiple sites found that DTs could perform similarly to GPR and feed-forward neural networks when detailed statistics of air temperature, day of year, and discharge were included (Zhu et al., 2019a). However, when comparing daily SWT results from DTs with gradient boosting (GB) or random forest (RF), DTs generally underperform (Anmala and Turuganti, 2021; Jiang et al., 2022). Recent studies have compared CART with other ML algorithms to model water quality parameters (including SWT), finding that CART underperformed due to overfitting compared to RF (Souaissi et al., 2023) and extreme learning machine (ELM) (Heddam et al., 2022a). To combat the problem of overfitting that can occur using decision trees, the idea of using multiple trees by bootstrap aggregation (i.e., bagging) has gained interest.

Random forests and XGBoost

RF and XGBoost have been used to predict daily SWT prediction for Austrian catchments,with results showing minor differences in model performance, with a median RMSE difference of 0.08 °C between tested ML models (Feigl et al., 2021). Using RF and XGBoost along with four other ML models, Jiang et al. (2022) estimated daily SWT below dams in China, finding day of year, streamflow flux, and AT to be most influential in the prediction of SWT (Jiang et al., 2022). Weierbach et al. (2022) used XGBoost and SVR to predict SWT at monthly timescales for the Pacific Northwest region of the US, showing that an ensemble XGBoost outperformed all modeling configurations for spatiotemporal predictions in unmonitored basins, with AT identified as the primary driver of monthly SWT. Zanoni et al. (2022) used RF and a deep learning model to develop regional models of SWT and other water quality parameters, with RF performance comparatively less effective at detecting nonlinear relationships than the deep learning model, though both models identified AT as most influential (Zanoni et al., 2022).

Souaissi et al. (2023) tested the performance of RF and XGBoost with nonparametric models for the regional estimation of maximum SWT at ungaged locations in Switzerland, finding no significant differences between the ML performance and the nonparametric model performances, which was attributed to the lack of a large dataset. Hani et al. (2023) used four supervised ML models – MARS, GAM, SVM, and RF – to model potential thermal refuge area (PTRA) at an hourly time step for two tributary confluences of the Sainte-Marguerite River in Canada. RF had the highest accuracy at both locations in terms of hourly PTRA estimates and modeling SWT (Hani et al., 2023). Wade et al. (2023) conducted a CONUS-scale study using 410 USGS sites with 4 years of daily SWT and discharge to examine maximum SWT. They used RF to estimate max SWT and thermal sensitivity (Wade et al., 2023), finding that AT was the most influential control followed by other properties (watershed characteristics, hydrology, anthropogenic impact).

2.3.2 Traditional artificial neural networks (ANNs)

An artificial neural network (ANN) is a type of ML algorithm inspired by biological neural networks in the brain (McCulloch and Pitts, 1943; Hinton, 1992). ANNs learn from data provided and improve on their own to progressively extricate higher-level trends or relationships within the given dataset (Hinton, 1992). Currently, ANNs are capable of data classification, pattern recognition, and regression analysis. Considered robust, ANNs can undergo supervised, unsupervised, semi-supervised, and reinforcement learning. The first study that utilized ANNs specifically for SWT modeling was published around the year 2000. The work was done by researchers interested in hindcasting SWT for a river in Canada for a 41-year period dating back to 1953 (Foreman et al., 2001). Since 2000, various types of ANNs have been increasingly used to model SWT at various sites at hourly, daily, and monthly time steps. For more detail on traditional ANNs, with descriptions of ANN variants and back-propagation alternatives, we refer the reader to Appendix A.

2.3.3 Newer and recent ML algorithms

We define newer and recent ML algorithms as those introduced or reintroduced in the last decade for SWT modeling. These ML algorithms include deep (i.e., increased layers) ANNs such as recurrent neural networks (RNNs), convolutional neural networks (CNNs), extreme learning machine (ELM), ML hybridizations, and subsets.

A “deep” neural network (DNN) has three or more hidden layers, MLPNNs being one such example. The purpose of added layers is to serve as optimizations for greater accuracy. Due to their complex nature, DNNs need extensive time spent solely on training the network on the input data (Abdi et al., 2021). Convolutional neural networks (CNNs) are FFNNs used to recognize objects and patterns in visual data (LeCun et al., 1989, 2004). CNNs have convolutional layers, which hold one or more filters that calculate a local weighted sum as they analyze the input data. A CNN filter is a matrix (rows and columns) of randomized number values that convolves (i.e., moves) through the pixels of an image, taking the dot product of the matrix of values in the filter and the pixel values of the image. The dot product is used as input for the next convolutional layer. To ensure adequate performance, CNNs must be trained with examples of correct output in the form of labeled training data and should be calibrated (i.e., adjusting filters, implement loss functions) to optimize performance (Krizhevsky et al., 2012). For more detail on CNN, we refer the reader to LeCun et al. (2004), Krizhevsky et al. (2012), and Xu and Liang (2021). A disadvantage of CNNs is that they are not ideal for interpreting temporal or sequential information or data that require learning from past data to predict future output. For interpreting temporal information or sequential data, recurrent neural networks are preferred.

Unlike FFNNs, recurrent neural networks (RNNs) work in a chain-link nature that allows them to loop (i.e., keep) previously handled data for use in a present task to make better predictions (Hochreiter and Schmidhuber, 1997). The RNN architecture is better equipped (and preferred) to handle temporal (i.e., time series) or sequential (i.e., a video is a sequence of images) data due to their ability to learn from their past (Bengio et al., 1994). The Elman neural network (ELM-NN) is a type of RNN where the hidden layer (bi-directionally connected to the input layer and output layer) stores contextual information of the input that it sends back to the input layer with sequential time steps (Elman, 1990).

However, one of the issues that persists in RNNs is that there is a limit to how far back RNNs can access past data to make better predictions. This is described as the problem of long-term dependencies, also known as the vanishing gradient problem. The vanishing gradient problem is due to back-propagated gradients that can grow or shrink at each time step, increasing instability until the gradients “explode” or “vanish” (Bengio et al., 1994; Hochreiter and Schmidhuber, 1997). Hochreiter and Schmidhuber (1997) introduced the long short-term memory (LSTM) model, a type of RNN explicitly designed to overcome the vanishing gradient problem. The LSTM architecture includes three gates (input, forget, and output gates) that control the flow of information in and out of the cell state, allowing the ANN to store and access data over longer time periods. In the last few decades, LSTMs have improved and variations introduced (Gers and Schmidhuber, 2000; Cho et al., 2014; Yao et al., 2015), and many have been cross-compared, with findings showing similar performance across LSTMs (Greff et al., 2016). In the last few years, LSTMs and their variations have been revisited and employed in hydrologic studies to examine possible relationships in time series data (Shi et al., 2015; Shen, 2018; Kratzert et al., 2018, 2019). For example, Sadler et al. (2022) used an LSTM model to multi-task, i.e., predict two related variables – streamflow and SWT. Their argument for forcing an LSTM to multi-task is that if two variables are driven by the same underlying physical processes, a multi-tasking LSTM could more holistically represent shared hydrologic processes and thus better predict the variable of interest. Their LSTM model consisted of added components: specifically, two parallel, connected output layers that represented streamflow output and SWT output (Sadler et al., 2022). Overall, using the multi-tasking LSTM improved accuracy for half the sites, but for those sites with marked improvement, more calibration was needed to reach improvement (Sadler et al., 2022).

Another type of NN is the graph neural network (GNN), which is used for representation learning (unsupervised learning of feature patterns) of graphed data (Bengio et al., 2013), where a “graph” denotes the links between a collection of nodes. At each graph node or link, information in the form of scalars or embeddings can be stored, making them very flexible data structures. Example of graphs that we interact with regularly are images, where each pixel is a node and is linked to adjacent pixels. A stream network is also an example of a graph, albeit a directed graph, which is a graph in which the links (also called “edges”) have direction. Two examples of recent GNNs are recurrent graph convolution networks (RGCNs) and temporal convolution graph models (TCGMs). The RGCN utilizes LSTM network architecture (i.e., use of forget, input, output gates) for temporal recognition (Topp et al., 2023). In contrast to RGCN, TCGM uses 1D convolutions (i.e., input a three-dimensional object and output a three-dimensional object), pooling, and channel-wise normalization to capture low-, intermediate-, and high-level temporal information in a hierarchical manner (Lea et al., 2016). An example that utilizes this approach is Graph WaveNet (Wu et al., 2019), which has been used in spatial-temporal modeling of SWT (Topp et al., 2023). According to Topp et al. (2023), the temporal convolutional structure of Graph WaveNet is more stable in the gradient-based optimization process in contrast to the possible gradient explosion problem that the LSTM in the RGCN could experience.

While present studies continue to use ML models as standalones to evaluate SWT predictions, other studies have coupled modern ML with non-ML models to examine whether such combinations improve model performance (Graf et al., 2019; Qiu et al., 2020; Rehana and Rajesh, 2023). For example, Graf et al. (2019) coupled four discrete wavelet transform (WT) techniques with MLPNN to predict SWT for eight stations on the Warta River in Poland. For reference, WT is widely applied for the analysis and denoising of information (signals) and images both over time and on a domain scale (frequency). The unique characteristic of a wavelet neural network (WNN) is the use of the WT as the activation function in the hidden layer of the NN (Qiu et al., 2020). Zhu et al. (2019) coupled WT with MLPNN and ANFIS to evaluate daily SWT at two stations on the river Drava in Croatia and separately compared the WT-ML coupling with MLR. The study found that the combination of WT and ML improved performance compared to the standalone models (Zhu et al., 2019d). A recent ML approach called differentiable modeling incorporates physics into ML modeling frameworks, where the basic model structure and parameters of a process-based model are inserted into an ANN to estimate parameters or replace existing process descriptions (Rahmani et al., 2023). Rahmani et al. (2023) examined model components that could improve an LSTM model's ability to better match model predictions to field observations. From their study, Rahmani et al. (2023) found that adding a separate shallow subsurface flow component to the LSTM model and a recency-weighted averaging of past air temperature for calculating source SWT resulted in improved predictions (Rahmani et al., 2023).

Attention-based transformers are a more novel type of deep learning that has led to advancements in natural language processing, in the form of ChatGPT, Microsoft's CoPilot, Google's Gemini, and others. Due to their exponential success in the last few years, attention-based transformer models have been used in geological science fields such as oceanography for sea surface temperature prediction (Shi et al., 2015), hydrology for streamflow and runoff prediction (Ghobadi and Kang, 2022; Wei, 2023), and remote sensing for streambed land use change classification (Bansal and Tripathi, 2024). As a relatively new AI tool, attention-based transformers have yet to be used for SWT (to our knowledge), but their applications in other geological science fields suggest it is only a matter of time before their use is observed in SWT modeling.

2.4 SWT predictions using ML

2.4.1 Identifying model complexity

The strong success of ML use in SWT modeling warrants a brief and broad overview on identifying model complexity to minimize overfitting and underfitting of models. When a model is too complex, i.e., has too many features or parameters relative to the number of observations, or is forced to overextend its capabilities, i.e., to make predictions with insufficient training data, the model runs the risk of overfitting (Srivastava et al., 2014). An overfitted model fits the training data “too well”, capturing noise and details that provide high accuracy on a training dataset, only to perform poorly once the model encounters “unseen” data in testing and validation (Xu and Liang, 2021). Scenarios where overfitting may be temporarily acceptable are (1) model development is at preliminary stages, such as a “proof of life” concept; (2) when the objective is to identify heavily relied on features by the model, i.e., feature importance; or (3) in highly controlled modeling environments where the expected data will be consistently similar to the training dataset. The latter is more likely in industrial applications and unlikely in the changing nature of hydrology.

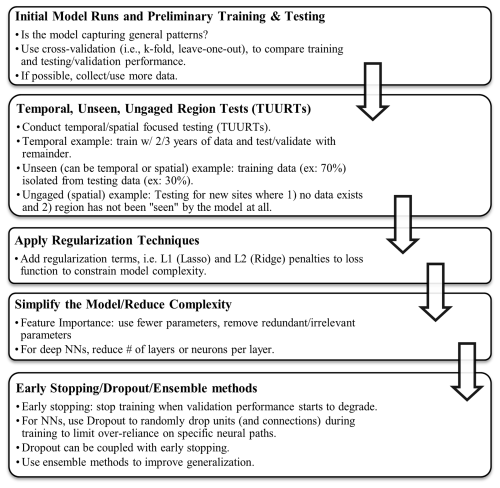

In contrast, underfitting occurs when a model is too simple to capture any patterns in the data, which can also lead to unsatisfactory performance in training, testing, and validation. Underfitting can occur with inadequate model features and poor model complexity or when regularization techniques (e.g., L1 or L2 regularization) are over-used, making the model too rigid and unable to respond to changes in the data. Given the propensity of ML models to effectively learn the training data, underfitting is less of an issue in ML, whereas overfitting can be widespread. In Fig. 1, we present an example workflow that researchers can use to transition away from overfitting and towards model generalizability. In the five-step outline (Fig. 1), we suggest the need for “temporal, unseen, ungaged region tests” (TUURTs) in SWT ML modeling. The idea behind TUURTs has been applied for decades in SWT process-based (Dugdale et al., 2017) and statistically based models (Benyahya et al., 2007; Gallice et al., 2015) to improve SWT model robustness. In TUURTs, testing for unseen cases means testing only within the developmental dataset, whereas testing for “ungaged” cases means testing for new sites that have no data and have not been previously seen by the model at all. Some statistically based models, such as DynWat (Wanders et al., 2019) and the Pacific Northwest (PNW) SWT model (Siegel et al., 2023), have tested for ungaged regions and unseen data. In the last few years, ML-SWT studies have begun applying TUURTs (Hani et al., 2023; Rahmani et al., 2020, 2021, 2023; Souaissi et al., 2023; Topp et al., 2023; Philippus et al., 2024a) but more ML-SWT studies need to apply these tests to improve user confidence in extrapolation capability. We further encourage researchers to shift towards more generalizable models, which are in theory more capable of performing well across diverse scenarios and datasets and stand to become increasingly important with the unpredictability of climate extremes.

2.4.2 Model inputs for ML-SWT

Using air temperature (AT) to better understand SWT has been considered since the 1960s, when Ward (1963) and Edinger et al. (1968) discussed the influence of air temperature on SWT. Since then, various input variables have been tested (see Table S1); however, the model inputs of AT and SWT continue to be the most used in ML modeling studies. For example, studies have used AT from time periods outside of the known SWT record to improve ML model performance (Sahoo et al., 2009; Piotrowski et al., 2015; Graf et al., 2019). In addition to AT and SWT, flow discharge has been used to attempt to constrain SWT (Foreman et al., 2001; Tao et al., 2020; St-Hilaire et al., 2011; Grbić et al., 2013; Piotrowski et al., 2015; Graf et al., 2019; Qiu et al., 2020). Other model inputs include precipitation (Cole et al., 2014; Jeong et al., 2016; Rozos, 2023), wind direction and speed (Hong and Bhamidimarri, 2012; Cole et al., 2014; Jeong et al., 2016; Kwak et al., 2016; Temizyurek and Dadaser-Celik, 2018; Abdi et al., 2021; Jiang et al., 2022), barometric pressure (Cole et al., 2014), landform attributes (Risley et al., 2003; DeWeber and Wagner, 2014; Topp et al., 2023; Souaissi et al., 2023), and many more (see Table S1).

In the last few years, including the day of year (DOY) as an input (Qiu et al., 2020; Heddam et al., 2022a; Drainas et al., 2023; Rahmani et al., 2023) and humidity (Cole et al., 2014; Hong and Bhamidimarri, 2012; Kwak et al., 2016; Temizyurek and Dadaser-Celik, 2018; Abdi et al., 2021) has also been shown to better capture the seasonal patterns of SWT (Qiu et al., 2020; Philippus et al., 2024a). With improved access to remote sensing data, there has also been a notable increase in satellite product inputs such as estimates of sky cover (Cole et al., 2014), solar radiation (Kwak et al., 2016; Topp et al., 2023; Majerska et al., 2024), sunshine per day (Drainas et al., 2023), and potential evapotranspiration or ET (Rozos, 2023; Topp et al., 2023). However, more research is needed to better understand the influence of newer model inputs on SWT (Zhu and Piotrowski, 2020).

Recently, SWT studies focused on the CONUS scale have chosen to use as many model inputs as available, with Wade et al. (2023) using a point-scale CONUS ML study using over 20 variables, while Rahmani et al. (2023) created an LSTM model and considered over 30 variables to simulate SWT. Despite the use of diverse data, the models in these studies performed only satisfactorily and were deemed not generalizable, leaving much room for improvement in CONUS-scale modeling of SWT. With the compilation of larger and larger datasets, feature importance in ML, which is the process of using techniques to assign a score to model input features based on how good the features are at predicting a target variable, can be an efficient way to improve data comprehension, model performance, and model interpretability, the latter of which can dually serve as a transparency marker of which features are driving predictions. Methods for measuring feature importance include using correlation criteria (Pearson's r, Spearman's ρ), permutation feature importance (shuffling feature values, measuring decrease in model performance), and linear regression feature importance (larger absolute values indicate greater importance); if using CART/RF/gradient boosting, entropy impurity measurements can be insightful (Venkateswarlu and Anmala, 2023).

For example, one technique that can be used to improve ML model parameter selection is the Least Absolute Shrinkage and Selection Operator (LASSO), a regression technique used for feature selection (Tibshirani, 1996). Research utilizing ML models for SWT frequency analysis at ungaged basins used the LASSO method to select explanatory variables for two ML models (Souaissi et al., 2023). The LASSO method consists of a shrinkage process where the method penalizes coefficients of regression variables by minimizing them to zero (Tibshirani, 1996). The number of coefficients set to zero depends on the adjustment parameter, which controls the severity of the penalty. Thus, the method can perform both feature selection and parameter estimation, an advantage when examining large datasets (Xu and Liang, 2021).

2.4.3 Local: single rivers, site-specific (≤100 km2)

SWT predictions using ML have extended from the local scale to nearly continental scales over the last 24 years. One of the first studies to use a neural network to estimate SWT using an MLPNN was done by Sivri et al. (2007), who predicted monthly SWT for Firtina Creek in Türkiye, a novel approach at the time. While the MLPNN model R2∼0.78 was not very good, the proof of concept was a success (Sivri et al., 2007). Chenard and Caissie (2008) used eight ANNs to calculate daily and max SWT for Catamaran Brook, a small drainage basin tributary to the Miramichi River in New Brunswick, Canada, for the years 1992 to 1999. Their ANN models performed best in late summer and autumn and performed comparatively to stochastic models for the same watershed (Chenard and Caissie, 2008). In 2009, Sahoo et al. (2009) compared an ANN, multiple regression analysis, and dynamic nonlinear chaotic algorithms (Islam and Sivakumar, 2002) to estimate SWT in the Lake Tahoe watershed area in along the California–Nevada border within the US. Their ANN models included available solar radiation and air temperature, with results showing a variation of the BPNN as having the best performance (Sahoo et al., 2009).

Hadzima-Nyarko et al. (2014) used a linear regression model, a stochastic model, and variations of two NNs – MLP (six variations) and RBF (two variations) – to compute and compare SWT predictions for four stations on the river Drava, along the Croatia–Hungary border in southern central Europe. While their ANN models performed better than the linear regression and stochastic models, a comparison of their NN models found that one of their six MLPNN variations barely outperformed the RBFNN, with a difference in RMSE of 0.0126 °C, within the margin of error. The authors stated that apart from the current mean AT, the daily mean AT of the prior 2 d and classification of the day of the year (DOY) were significant controls of the daily SWT (Hadzima-Nyarko et al., 2014). Rabi et al. (2015) conducted a study using the same gage stations on the river Drava using only AT as a predictor and restricted the use of NNs to only MLP, finding that the MLPNN outperformed the linear regression approaches (Rabi et al., 2015).

Cole et al. (2014) tested a suite of models including an FFNN to predict SWT downstream of two reservoirs in the upper Delaware River, in Delaware, US. During training, the FFNN was outperformed by an Auto Regressive Integrated Moving Average (ARIMA) model and performed similarly to the physically based Heat Flux Model (HFM) (Cole et al., 2014). During testing, the FFNN, ARIMA, and HFM models performed similarly, with HFM being slightly more accurate due to its advantage as a physically based model with data availability and calibration potential (Cole et al., 2014). The authors suggest that the under- or overpredictions of the models may have been from unaddressed groundwater inputs or unaccounted for nonlinear relationships (Cole et al., 2014). Hebert et al. (2014) focused on the Catamaran Brook area (like Chenard and Caissie, 2008) and included the Little Southwest Miramichi River in New Brunswick, Canada, to conduct ANN model predictions of hourly SWT. The study considered spring through autumn with hourly data from 1998 to 2007, finding that the ANN models performed similarly to or better than deterministic and stochastic models for both areas (Hebert et al., 2014).

Piotrowski et al. (2015) examined data from two streams, one mountainous and one lowland, in a moderately cold climate of eastern Poland to model SWT using MLPNN, PUNN, ANFIS, and WNN. The ANN models were independently calibrated to find the best fits, with results showing that MLPNN and PUNN slightly outperformed ANFIS and WNN (Piotrowski et al., 2015). The study also found current AT and information on the mean, maximum, and minimum AT from 1–2 d prior to be important for improving model accuracy (Piotrowski et al., 2015). Temizyurek and Dadaser-Celik (2018) used an ANN with observations of AT, relative humidity, prior month SWT, and wind speed to predict monthly SWT at four gages on the Kızılırmak River in Türkiye. Best results were obtained from using the sigmoidal (S-shape) activation function and the scaled conjugate gradient algorithm (Møller, 1993), though the average RMSE (∼2.3 °C) for the NN used was higher (worse) than the average calculated from this literature review where RMSE ∼1.4 °C.

Zhu et al. (2019a, c, d) conducted several studies that used NNs to examine SWT on the river Drava, Croatia (Zhu et al., 2019a, c, d). They also examined SWT of three rivers in Switzerland and three rivers in the US (Zhu et al., 2019a, b, c). Across the studies, the MLPNN models had better performance compared to ANFIS (Zhu and Heddam, 2019), GPR (Zhu et al., 2019a), or MLR (Zhu et al., 2019b). Qiu et al. (2020) used variations of NNs (MLP/BPNN, RBFNN, WNN, GRNN, ELMNN) to examine SWT at two stations on the Yangtze River, China, finding that the MLP/BPNN outperformed all other models when the particle swarm algorithm (PSO) was used for optimization (Qiu et al., 2020). Stream discharge and DOY were also shown to improve model accuracy. Piotrowski et al. (2020) used various MLPNN shallow (one hidden layer) structures to test the use of an approach called dropout in SWT modeling using data from six stations in Poland, Switzerland, and the US. The dropout approach can be applied to deep ANNs due to its efficiency in preventing overfitting and low computation requirements (Piotrowski et al., 2020). The study found that use of dropout and drop-connect significantly improved performance of the worst training cases. For more information on the use of dropout with shallow ANNs, we refer the reader to Piotrowski et al. (2020).

Graf and Aghelpour (2021) compared stochastic and ANN (ANFIS, RBF, GMDH) SWT models for four gages on the Warta River in Poland, finding that all models performed similarly well (R2>97.6 %). Results showed that the stochastic and ML models performed similarly, while the stochastic models had fewer prediction errors for extreme SWT (Graf and Aghelpour, 2021). Rajesh and Rehana (2021) used several ML models (ridge regression, K-nn, RF, SVR) to predict SWT at daily, monthly, and seasonal scales for a tropical river system in India. The authors found that the monthly SWT prediction performed better than the daily or seasonal (Rajesh and Rehana, 2021). Of the ML models, the SVR was the most robust, though a data assimilation algorithm notably improved predictions (Rajesh and Rehana, 2021). Jiang et al. (2022) examined SWT under the effects of the Jinsha River cascaded reservoirs using six ML models (i.e., adaptive boosting – AB, decision tree – DT, random forest – RF, support vector regression – SVR, gradient boosting – GB, and multilayer perceptron neural network – MLPNN). The study found that day of year (DOY) was most influential in each model for SWT prediction, followed by streamflow and AT (Jiang et al., 2022). With knowledge of the influential parameters, ML model variations were tested, finding that gradient boosting and random forest provided the most accurate estimation for the training dataset and the test dataset (Jiang et al., 2022). Abdi et al. (2021) used linear regression and a deep (multi-layered) neural network (DNN) to predict hourly SWT for the Los Angeles River, finding that the DNN outperformed the linear regressions. They suggested that using a variety of ML models to predict SWT could add robustness to a study but state that training ANNs is more time-consuming than training linear regression models for minimal improved accuracy (Abdi et al., 2021).

Khosravi et al. (2023) used an exploratory data analysis (EDA) technique, a type of feature engineering that prepares the dataset for best performance with an LSTM to identify SWT predictors (discharge, water level, AT, etc.) up to 1 week in advance for a monitoring station on the central Delaware River. The authors noted that though the LSTM performed satisfactorily, future studies should compare LSTMs with CNNs or other model types and that generalizability is limited to the specific location and dataset (Khosravi et al., 2023). Majerska et al. (2024) used GPR to simulate SWT for the years 2005–2022 for the Arctic catchment Fuglebekken in Svalbard, Norway. The unique opportunity to study SWT of an unglaciated High Arctic stream regime showed an alarming warming throughout the summer where SWT increased as much as 6 °C, highlighting a strong sensitivity of the Arctic system to ongoing climate change (Majerska et al., 2024).

2.4.4 Regional, continental scale (≥100 km2)

DeWeber and Wagner (2014) conducted one of the first regional ANN ensemble studies, focusing on thousands of individual streams reaches across the eastern US. They used an ensemble of 100 ANNs to estimate daily SWT with varying predictors for the 1980–2009 period, finding that daily AT, prior 7 d mean AT, and catchment area were the most important predictors (DeWeber and Wagner, 2014). In Serbia, Voza and Vuković (2018) conducted cluster analysis, PCA, and discriminant analysis for the Morava River Basin using data from 14 river stations to identify monitoring periods for sampling. With discriminant parameters identified, an MLPNN was used to predict changes in the values of the discriminant factors (see Fig. 1 of Voza and Vuković, 2018) and identify controls on the monitoring periods, finding that seasonality and geophysical characteristics were most influential (Voza and Vuković, 2018).

Rahmani et al. (2020) used 4 years of SWT data for 118 sites across the CONUS to test three LSTM models that simulated SWT, finding that the LSTM trained with streamflow observations was the most accurate, which was unsurprising. Of interest to the reader would be the inner mechanisms of the LSTM, but the study did not explicitly state what physical laws were followed by the LSTM. Instead, the authors hypothesized that the LSTM could assume internal representations of physical quantities (i.e., water depth, snowmelt, net heat flux, baseflow temperature, SWT). The authors further stated that the LSTM was dependent on a good historical data record and would not generalize well to ungaged basins. A follow-up study by Rahmani et al. (2021) used 6 years of SWT data and relevant meteorological parameters for 455 sites across the CONUS (minus California and Florida) to test LSTM models for data-scarce, dammed, and semi-ungaged basins (discharge used as input). The follow-up study showed improved performance, but the models remained limited in capturing the influence of latent contributions such as baseflow and subsurface storage. Feigl et al. (2021) tested the performance of six ML models – stepwise linear regression, RF, XGBoost, FFNNs, and two RNNs (LSTM and GRU) – using data from 10 gages in the Austria–Germany–Switzerland region to estimate daily SWT. From the comparison, FFNNs and XGBoost were the best-performing in 8 of 10 catchments (Feigl et al., 2021). For modeling SWT in large catchments (>96 000 km2 ∼ Danube catchment size), the RNNs performed best due to their long-term dependencies (Feigl et al., 2021). Zanoni et al. (2022) used RF, DNN, and a linear regression to predict daily SWT in the Warta River basin and compared the results with those of stochastic models. Their results found that the DNN was the most effective in capturing nonlinear relationships between drivers (i.e., SWT) and water quality parameters (Zanoni et al., 2022). On parameter influence, the analysis also found that DOY was an adequate surrogate for AT input in modeling SWT, experiencing only a slight performance reduction.

Heddam et al. (2022b) used six ML models – K-nn, LSSVM, GRNN, CCNN, RVM, and LWPR – to evaluate SWT for several of Poland's larger rivers. For each ML, three variations were created: one calibrating with only AT as input, another calibrating with AT and DOY, and a third decomposing AT using the variational mode decomposition (VMD) (Heddam et al., 2022b). For more on VMD, we refer the reader to Heddam et al. (2022a). The study found that the VMD parameters improved RMSE and MAE performance metrics for some models, but neither GRNN nor K-nn showed improvement. Heddam et al. (2022a) examined how use of the Bat algorithm optimized the extreme learning machine (Bat-ELM) neural network and how that in turn affected modeling of SWT in the Orda River in Poland. Results from the Bat-ELM were compared with MLPNN, CART, and multiple linear regression (MLR), finding the Bat-ELM outperformed MLPNN, CART, and MLR (Heddam, Kim, et al., 2022). Focusing on a region of Germany, Drainas et al. (2023) trained and tested various ANNs with different inputs for 16 small (≤1 m3 s−1) headwater streams, finding that the best-performing (lowest RMSE) input combination was stream-specific, suggesting that the optimal input combination cannot be generalized across streams for the region (Drainas et al., 2023). The ANN prediction accuracy of SWT was negatively affected by river length, total catchment area, and stream water level (Drainas et al., 2023). Additionally, ANN accuracy suffered when dealing with open-canopy land use types such as grasslands but improved with semi-natural and forested land cover (Drainas et al., 2023). Recently, He et al. (2024) built an LSTM framework to model water dynamics in stream segments while attempting to capture spatial and temporal dependencies. First, they created a baseline LSTM+GNN, then improved it by using graph masking and adjusting the model based on constraints (He et al., 2024). For the Delaware River Basin, the Fair-Graph model performed slightly better than the baseline with an RMSE of 1.83 vs. 1.78, respectively. For the Houston River network, the Fair-Graph model also performed slightly better than the baseline (NSE of 0.721 vs. 0.580). While the relative performance compared to the baseline was not significantly better, we anticipate that graph masking (an algorithm that incorporates spatial awareness into ANN) will play an increasingly large role in hydrologic modeling (Shen, 2018; He et al., 2024).

2.5 Decision support and climate change scenarios

In 2003, the United States Geological Survey (USGS) used an FFNN to estimate hourly SWT for a summer season in western Oregon (Risley et al., 2003). Their work used the predicted SWT to better constrain future total maximum daily loads (TMDLs) for stream management. Jeong et al. (2016) used an ANN to evaluate SWT for the Soyang River, South Korea. The goal was to couple the ANN predictions with a cyber infrastructure prototype system to deliver automated, real-time predictions using weather forecast data (Jeong et al., 2016).

Liu et al. (2018) used a hydrological model called the Variable Infiltration Capacity (VIC) model to produce estimates of AT and river-section-based variables for the Eel River Basin, Oregon, US, to be used as input data for an ANN. The study considered the AT rise from the RCP8.5 scenario to estimate future (2093–2100) daily streamflow and SWT, finding that SWT was increasingly sensitive to the proportion of baseflow in the summer (Liu et al., 2018). Topp et al. (2023) used the Delaware River Basin in the eastern US to compare two DL models: a recurrent graph convolution network (RGCN) and a temporal convolution graph model (TCGM) called Graph WaveNet. The comparison included scenarios capturing climate shifts representative of long-term projections where warm conditions or drought persisted. Considered spatiotemporally aware, the two process-guided deep learning models performed well (test RMSE of 1.64 and 1.65 °C); however, Graph WaveNet significantly outperformed RGCN in four out of five experiments where test partitions represented diverse types of unobserved environmental conditions.

Further focusing on the Delaware River Basin, Zwart et al. (2023a) used data assimilation and an LSTM to generate 1 and 7 d forecasts of daily maximum SWT for the purpose of aiding reservoir managers in decisions about when to release water to cool streams. Following up on this study was Zwart et al. (2023b), who used an LSTM and an RGCN, both with and without data assimilation, to generate 7 d forecasts of daily maximum SWT for monitored and unmonitored locations in the Delaware River Basin, finding that the RGCN with data assimilation performed best for ungaged locations and at higher SWT, which is important for reservoir operators to be aware of while drafting release schedules.

Rehana and Rajesh (2023) used a standalone LSTM, a WT-LSTM, and a k-nearest neighbor (K-nn) bootstrap resampling algorithm with LSTM to assess climate change impacts on SWT using downscaled projections of AT with RCPs 4.5 and 8.5 for seven polluted river catchments in India. Comparing the coupled models and the physically based Air2stream model, they found the K-nn coupled with LSTM to be the best-performing in terms of effectively predicting SWT at the monthly timescale. Considering the RCP scenarios, the predicted SWT increase for 2071–2100 for the rivers in India ranged from 3.0–4.7 °C.

The second part of this review compiles ML performance evaluation metrics as they pertain to SWT modeling and prediction and considers the commonly used metrics to suggest guidelines for easier comparison of ML performance across SWT studies. We considered journal articles from 2000–2024 that used ML to evaluate, predict, or forecast SWT and examine what model performance metrics were used. Performance metrics can be calculated during model calibration, testing, and (or) validation to compute a single value that denotes the agreeableness between simulated and observed data.

For this literature review, all journals examined used at least one metric to evaluate model performance, with two or more metrics used by >84 % of studies published in or after the year 2019. For review, the quantitative statistics were split into three categories: standard regression, dimensionless, and error index (Moriasi et al., 2007). Standard regression statistics (Pearson's r, R2) are ideal for examining the strength of the linear relationship between model simulations or predictions and the observed or measured data. Dimensionless techniques (NSE, KGE) provide a relative assessment of model performance but due to their interpretational difficulty (Legates and McCabe, 1999) have been less commonly used. In contrast, error indices (RMSE, MAE) quantify the error in terms of the units of the data (i.e., °C) considered.

3.1 Model performance metrics: standard regression

The most basic statistics (slope, y-intercept mean, median, standard deviation) continue to be used in part due to their simplicity and ease of interpretability. These statistics are useful for preliminary examinations, where the assumption is that measured and simulated values are linearly related, and all the variance of error is contained within the predictions or simulations, whilst the observations are free of error. Unfortunately, observations are rarely error-free, and datasets are nonlinear, highlighting a need for using a diverse set of statistics (Helsel and Hirsch, 2002). One such set of statistics commonly used for standard regressions are called the correlation coefficients – Kendall's tau, Spearman's rho, and Pearson's r.

Pearson's r, also known as the correlation coefficient, is used to determine the strength and direction (i.e., positive, negative) of a simple linear relationship (Helsel and Hirsch, 2002). Values of r range from −1 to +1, where r<0 indicates a negative correlation and r>0 indicates a positive correlation (Legates and McCabe, 1999). The square of r is denoted as r2, known as the square of the correlation coefficient, with values of r2 ranging from 0 to 1. The r2 metric is commonly used in simple linear regression to assess the goodness of fit by measuring the fraction of the variance in one variable (i.e., observations) that can be explained by the other variable (i.e., predictors). The metric r2 tends to be confused with R2, the latter of which is a statistical measure that represents the proportion of variance explained by the independent variable(s) in a multiple linear regression model (Helsel and Hirsch, 2002). Part of the confusion may be related to the fact that R2 shares the same range of 0 to 1, with R2=1 indicating that the model can explain all the variance, and vice versa. We note that while both r2 and R2 share similarities in that they measure the proportion of variance, R2 is more commonly used for multiple linear regression context, while r2 is best suited for simple linear regressions. To reduce confusion, we strongly suggest that r, r2, and R2 always be reported together (even if as a supplement to a manuscript) to characterize goodness of fit.

In contrast to the linear regression metrics, Spearman's rank correlation coefficient, rho (ρ), is a nonparametric rank-sum test useful for analyzing non-normally distributed data and nonlinear monotonic relationships (Helsel and Hirsch, 2002). The data are ranked on a range from −1 to +1, where ρ=0 indicates no association and or +1 suggests a perfect monotonic relationship. By ranking the data, Spearman's correlation coefficient quantifies monotonic relationships between two variables (converts nonlinear monotonic relationships to linear relationships), allowing ρ to be robust against outliers (Helsel and Hirsch, 2002).

3.2 Model performance metrics: error indices

The mean absolute error (MAE), mean square error (MSE), and root mean squared error (RMSE) are popular error indices used to assess model performance. The equations for MAE, MSE, and RMSE are as follows.

For the equations, N is the number of samples, Oi is the observed SWT, and Pi is the predicted SWT at time i. The MAE computes the average magnitude of the errors in a set of predicted values to obtain the average absolute difference between the predicted Pi and the observed Oi. In contrast to MAE, the MSE squares the error terms, resulting in the squared average difference between the predicted and observed values. The resultant MSE is not in the same units as the value of interest, making it difficult to interpret. As the square root of the MSE, RMSE provides an error index in the unit of the data (Legates and McCabe, 1999). However, both the MSE and RMSE are more sensitive to outliers and less robust than MAE.

Another error index used in SWT modeling is called the percent bias (PBIAS) index. PBIAS computes the average tendency of model predictions to be greater or smaller than the observations or measurements (Gupta et al., 1999). A PBIAS value of 0 is best, and low-magnitude values (closer to 0) denote stronger model accuracy. Positive PBIAS values suggest model underestimation, while negative PBIAS values suggest model overestimation (Moriasi et al., 2007).

3.3 Model performance metrics: dimensionless

The Nash–Sutcliffe efficiency (NSE, also called NSC, NS, or NASH) is a “goodness-of-fit” criterion that describes the predictive power of a model. Mathematically, the NSE is a normalized statistic that computes the relative magnitude of the variance of the residuals compared to the variance of the measured or observed data (Nash and Sutcliffe, 1970). Visually, the NSE shows how well the observed versus simulated data fit on a 1:1 line.

where is the average value of Oi. To compute the Kling–Gupta efficiency (KGE),

where r is the linear correlation coefficient between predictions and observations. The purpose of the KGE metric is to reach a balance between optimal conditions of modeled and observed quantities being perfectly correlated (i.e., r=1), with the same variance () and minimizing model output bias (). The Kling–Gupta efficiency (KGE) is based on a decomposition of NSE into separate components (correlation, variability bias, and mean bias) and tries to improve on NSE weaknesses (Knoben et al., 2019). Like NSE, KGE = 1 is a perfect fit between model simulations or predictions and observations or measurements. However, NSE and KGE values cannot be directly compared because each metric is influenced by the coefficient of variation of the observed time series (Knoben et al., 2019).

The Willmott index of agreement, d, ranging from 0 to 1, is defined as a standardized measure of model prediction error, where a value of 1 is perfect agreement between measured and predicted values, and a value of 0 indicates no agreement.

The Akaike information criterion (AIC) is a selection method used to compare several models to find the best approximating model for the dataset of interest (Akaike et al., 1973; Banks and Joyner, 2017; Portet, 2020). For details on the mathematical derivation and application of AIC, please see Banks and Joyner (2017), Portet (2020), and Piotrowski et al. (2021). The AIC equation version shown was developed for the least-squares approach (Anderson and Burnham, 2004):

where N is the number of samples, K is the number of model parameters + 1, and MSE is obtained by the model, for the respective dataset, per stream (Piotrowski et al., 2021). The Bayesian information criterion (BIC) was developed for studies where model errors are assumed to follow a Gaussian distribution (Faraway and Chatfield, 1998; Piotrowski et al., 2021). For other versions of BIC, please see Faraway and Chatfield (1998).

Unlike other performance metrics, the AIC and BIC are unique in their ability to penalize the number of parameters used by a model, thus favoring more parsimonious models. For both the AIC and BIC, lower values of the criterion point to a better model (Piotrowski et al., 2021).

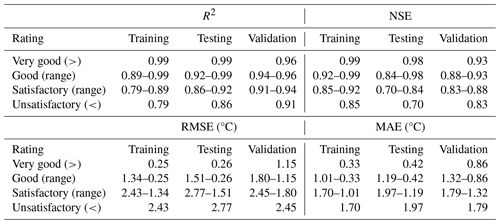

3.4 Performance metrics for most-cited ML statistics

Reviewing ML studies focused on SWT modeling (Table S1, S2), the most-cited performance metrics were RMSE (45 citations), NSE (25), MAE (18), and R2 (17). Having reviewed the literature and in agreement with previous published recommendations (Moriasi et al., 2007), we recommend that a combination of standard regression (i.e., r, r2, R2), dimensionless (i.e., NSE), and error index statistics (i.e., RMSE, MAE, PBIAS) be used for model evaluation and reported together in future publications. As part of our efforts to propose guidelines for easier comparison of ML performance across SWT studies, we identified the range in reported values for these four most-cited metrics and show the spread of values in the training and calibration as well as the testing and validation phases in box plot form.

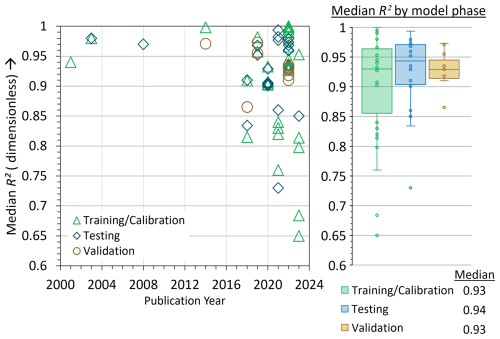

We begin with the standard regression and dimensionless statistics, R2 and NSE, both of which have an optimal value of 1. Figure 2 shows the median R2 per ML model per model phase for the cited publications. For example, Foreman et al. (2001) used an ANN to model SWT in the Fraser Watershed in British Columbia, Canada. Their model estimated 1995–1998 tributary and headwater temperatures and reported a median R2 (Fig. 2) of 0.93 for the training and calibration phase. Over the review period, the R2 range (2001–2024) was 0.65–1.00. We note that for process-based modeling, acceptable R2 values start around R2∼0.50 (Moriasi et al., 2007). In stark contrast, ML models published between 2000–2024 exhibited significantly higher R2 values, with a median of R2∼0.93 across 17 studies (Fig. 2).

Figure 2Median R2 (dimensionless) values from published literature for training and calibration, testing, and validation phases of model evaluation.

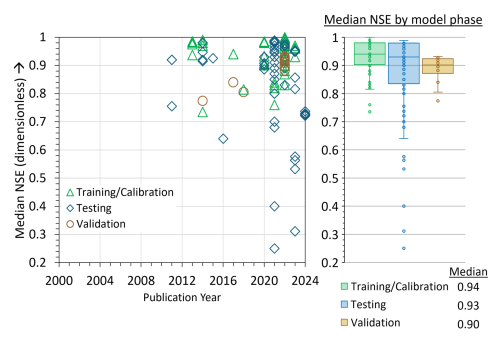

Figure 3Median NSE (dimensionless) values from published literature for training and calibration, testing, and validation phases of model evaluation.

Unlike the R2 metric, NSE was not used as a metric in ML studies of SWT between 2000 and 2010 (Fig. 3). The first ML study to use NSE was St. Hilaire et al. (2011) to analyze SWT in Catamaran Brook, a small catchment in New Brunswick, Canada. Fig. 3 shows that the NSE range reported by studies using ML for SWT was between 0.25–1.00 over the reviewed period (2000–2024). Like R2, NSE published values are high compared to traditional models (Moriasi et al., 2007, 2015), with a median NSE of 0.93 across 25 studies (Fig. 3). Overall, these complementary metrics should always be reported together as they provide a broader evaluation of model performance; i.e., NSE measures a model's predictive skill and error variance, while R2 assesses how well the model explains the variability of the data.

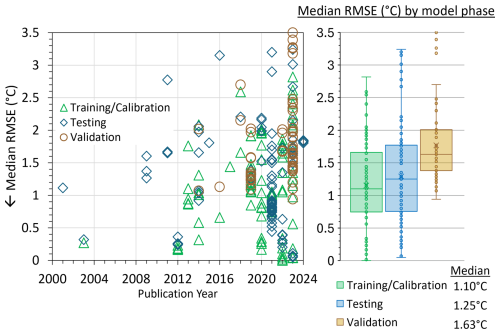

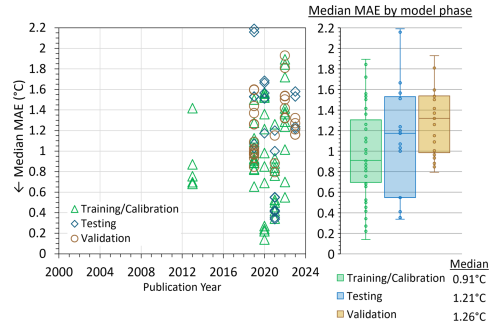

Figure 4 shows the median RMSE (°C) and Fig. 5 shows the median MAE (°C) per ML model per model phase for each publication. RMSE (°C) and MAE (°C) are popular error indices used in model evaluation because the metrics show error in the units of the data of interest (i.e., °C), which helps analysis of the results. RMSE and MAE values equal to 0 are a perfect fit. Over the review period, median RMSE (Fig. 4) ranged from 0.0002–3.50 °C. The median RMSE was 1.35 °C across 45 studies (Fig. 4). Figure 5 shows that between 2000–2012, MAE was not used as a metric in ML studies of SWT. The first ML study to use MAE for SWT modeling was Grbić et al. (2013), where the Gaussian process regression (GPR) ML approach was compared with field observations of SWT from the river Drava in Croatia to assess the feasibility of model development in SWT prediction. In contrast to RMSE, the MAE range (Fig. 5) was 0.14–2.19 °C. The median MAE overall was 1.09 °C across 18 studies (Fig. 5).

Figure 4Median RMSE (°C) values from published literature for training and calibration, testing, and validation phases of model evaluation.

Figure 5Median MAE (°C) values from published literature for training and calibration, testing, and validation phases of model evaluation.

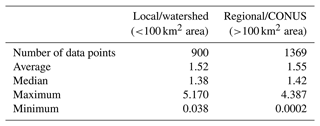

3.5 Spatial scale