the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

The Great Lakes Runoff Intercomparison Project Phase 4: the Great Lakes (GRIP-GL)

Hongren Shen

Bryan A. Tolson

Étienne Gaborit

Richard Arsenault

James R. Craig

Vincent Fortin

Lauren M. Fry

Martin Gauch

Daniel Klotz

Frederik Kratzert

Nicole O'Brien

Daniel G. Princz

Sinan Rasiya Koya

Tirthankar Roy

Frank Seglenieks

Narayan K. Shrestha

André G. T. Temgoua

Vincent Vionnet

Jonathan W. Waddell

Model intercomparison studies are carried out to test and compare the simulated outputs of various model setups over the same study domain. The Great Lakes region is such a domain of high public interest as it not only resembles a challenging region to model with its transboundary location, strong lake effects, and regions of strong human impact but is also one of the most densely populated areas in the USA and Canada. This study brought together a wide range of researchers setting up their models of choice in a highly standardized experimental setup using the same geophysical datasets, forcings, common routing product, and locations of performance evaluation across the 1×106 km2 study domain. The study comprises 13 models covering a wide range of model types from machine-learning-based, basin-wise, subbasin-based, and gridded models that are either locally or globally calibrated or calibrated for one of each of the six predefined regions of the watershed. Unlike most hydrologically focused model intercomparisons, this study not only compares models regarding their capability to simulate streamflow (Q) but also evaluates the quality of simulated actual evapotranspiration (AET), surface soil moisture (SSM), and snow water equivalent (SWE). The latter three outputs are compared against gridded reference datasets. The comparisons are performed in two ways – either by aggregating model outputs and the reference to basin level or by regridding all model outputs to the reference grid and comparing the model simulations at each grid-cell.

The main results of this study are as follows:

-

The comparison of models regarding streamflow reveals the superior quality of the machine-learning-based model in the performance of all experiments; even for the most challenging spatiotemporal validation, the machine learning (ML) model outperforms any other physically based model.

-

While the locally calibrated models lead to good performance in calibration and temporal validation (even outperforming several regionally calibrated models), they lose performance when they are transferred to locations that the model has not been calibrated on. This is likely to be improved with more advanced strategies to transfer these models in space.

-

The regionally calibrated models – while losing less performance in spatial and spatiotemporal validation than locally calibrated models – exhibit low performances in highly regulated and urban areas and agricultural regions in the USA.

-

Comparisons of additional model outputs (AET, SSM, and SWE) against gridded reference datasets show that aggregating model outputs and the reference dataset to the basin scale can lead to different conclusions than a comparison at the native grid scale. The latter is deemed preferable, especially for variables with large spatial variability such as SWE.

-

A multi-objective-based analysis of the model performances across all variables (Q, AET, SSM, and SWE) reveals overall well-performing locally calibrated models (i.e., HYMOD2-lumped) and regionally calibrated models (i.e., MESH-SVS-Raven and GEM-Hydro-Watroute) due to varying reasons. The machine-learning-based model was not included here as it is not set up to simulate AET, SSM, and SWE.

-

All basin-aggregated model outputs and observations for the model variables evaluated in this study are available on an interactive website that enables users to visualize results and download the data and model outputs.

- Article

(9551 KB) - Full-text XML

-

Supplement

(3894 KB) - BibTeX

- EndNote

Model intercomparison projects are usually massive undertakings, especially when multiple (independent) groups come together to compare a wide range of models over large regions and a large number of locations (Duan et al., 2006; Smith et al., 2012; Best et al., 2015; Kratzert et al., 2019c; Menard et al., 2020; Mai et al., 2021; Tijerina et al., 2021). The aim of such projects is diverse. It might be to identify models that are most appropriate for certain objectives (e.g., simulating high flows or representing soil moisture) or to study the differences in model setups and implementations in detail.

Intercomparison projects are also well suited for introducing new models through the inherent benchmarking with other models (Best et al., 2015; Kratzert et al., 2019c; Rakovec et al., 2019). In particular, the recent successful application of data-driven models in hydrologic applications necessitate standardized experiments – such as model intercomparison studies – to make sure that the models are using the same information and strategies to enable fair comparisons.

Several model intercomparison have been carried out over subdomains (here called regions) of the Great Lakes in the past (Fry et al., 2014; Gaborit et al., 2017a; Mai et al., 2021) under the umbrella of the Great Lakes Runoff Intercomparison Projects (GRIP). The GRIP projects were envisioned since the Great Lakes region with its transboundary location leads to (a) challenges such as finding datasets that are consistent across borders or models that are only set up on either side of the border, (b) challenges in the modeling itself due to, for example, climatic conditions driven by large lake effects and substantial areas of heavy agricultural land use and urban areas, and (c) a high public interest since the Great Lakes watershed is one of the most densely populated areas in Canada (32 % of the population) and the USA (8 % of the population; Michigan Sea Grant, 2022). Another reason for the high public interest is that changes in runoff to the Great Lakes have implications for water levels, which have undergone dramatic changes (from record lows in 2012–2013 to record highs in 2017–2020) in recent decades. The aforementioned GRIP projects were set up over regions of the entire Great Lakes watershed, i.e., Lake Michigan (GRIP-M; Fry et al., 2014), Lake Ontario (GRIP-O; Gaborit et al., 2017a), and Lake Erie (GRIP-E; Mai et al., 2021), and primarily investigated the model performances regarding simulated streamflow even though studies show that additional variables beyond streamflow might be helpful to improve realism of models (Demirel et al., 2018; Tong et al., 2021).

Previous GRIP studies have pointed out limitations regarding the conclusions that can be drawn. In particular, the last study (GRIP-E by Mai et al., 2021) listed the following challenges in the concluding remarks:

- I.

There was only a short period of forcings (5 years) to calibrate and evaluate the models.

- II.

There was only a comparison of streamflow but no additional variables like soil moisture.

- III.

Even though all models use the same forcings, all other datasets (soil texture, vegetation, basin delineation, etc.) were not standardized.

Hence, model differences might be due to different quality input data but not due to differences in models themselves. The study presented here will address these previous GRIP study limitations. Furthermore, this study demonstrates some unique intercomparison aspects that are not necessarily common practice in large-scale hydrologic model intercomparison studies. Key noteworthy aspects of this intercomparison study are as follows:

-

Datasets used. Hydrologic and land surface models need data to be set up (e.g., soil and land cover) and to be forced (e.g., with precipitation). The forcing data have, at best, a high temporal and spatial resolution, a complete coverage of the domain of interest, a long available time period, and no missing data. In this study, models will be using common datasets for these forcings, and all geophysical datasets are required to set up the various kinds of models. A recently developed 18-year-long forcing dataset will be employed (Gasset et al., 2021). This addresses limitations (I) and (III) above.

-

Variables compared. Models are traditionally only evaluated for one primary variable (e.g., streamflow). In this study, models will be evaluated regarding variables beyond streamflow such as evapotranspiration, soil moisture, and snow water equivalent. This addresses limitation (II) above.

-

Method of comparison. A model intercomparison might include various model types, e.g., lumped models vs. semi-distributed or conceptual vs. physically based vs. data driven. To compare these models – especially when not only comparing time series like streamflow – model agnostic methods are needed (meaning methods that do not favor one type of model over others). We will perform comparisons on different scales and use metrics to account for these issues. This addresses an issue associated with limitation (II).

-

Types of models considered. Model intercomparison studies traditionally use conceptual or physically based hydrologic models. The rising popularity and success of data-driven models in hydrologic science (e.g., Herath et al., 2021; Nearing et al., 2021; Feng et al., 2021), however, warrant the inclusion of such models in such studies, given that the data-driven, machine-learning-based models face the same information and input data as traditional models to guarantee a fair comparison. A machine-learning-based model was included in this study to demonstrate the advantages and limitations of state-of-the-art machine-learning-based models compared to conceptual and physically based models.

-

Communication of results. Large studies with many models and locations at which they are compared lead to vast amounts of model results. During such a study, it is challenging for collaborators to process and compare all results. After the study is completed, it is equally challenging to present all results in detail in a paper such that the data and results are accessible to others. In this study, we shared data through an interactive website, which was first internally accessible during the study and then publicly shared to enhance data sharing, exploring, and communication with a broader audience.

The remainder of the paper is structured as follows. Section 2 presents the materials used and methods applied including a description of datasets, models, metrics, and types of comparative analyses. Section 3 describes and discusses the results of the analyses performed, while Sect. 4 summarizes the conclusions that can be drawn from the experiments performed here. Note that this work is accompanied by an extensive Supplement, primarily providing more details for model setups, and an interactive website (http://www.hydrohub.org/mips_introduction.html, last access: 6 July 2022) (Mai, 2022), for sharing and exploring comparative results.

This section first describes the study domain (Sect. 2.1), datasets used to set up and force models (Sect. 2.2), and the common routing product produced for this study and used by most of the models (Sect. 2.3). A brief description of the 13 participating models can be found in Sect. 2.4. The datasets used to calibrate and validate the models regarding streamflow and the datasets to evaluate the models beyond streamflow are introduced in Sects. 2.5 and 2.6, respectively, followed by Sect. 2.7, which defines the metrics to determine the models' ability to simulate the variables provided in these datasets using two different approaches, depending on which spatial aggregation is applied (Sect. 2.8). A multi-objective analysis described in Sect. 2.9 is performed to augment the previous single objective analyses that evaluated the performance across the four variables independently. The data used (e.g., model inputs) and produced (e.g., model outputs) in this study are made available through an interactive website, for which features are explained in Sect. 2.10.

2.1 Study domain

The domain studied in this work is the St. Lawrence River watershed, including the Great Lakes basin and the Ottawa River basin. The five Great Lakes located within the study domain contain 21 % of the world's surface fresh water and approximately 34 million people in the USA and Canada live in the Great Lakes basin. This is about 8 % of the USA's population and about 32 % of Canada's population (Michigan Sea Grant, 2022). The study domain chosen consists of six major regions, i.e., the five local lake regions (i.e., regions that are partial watersheds and do not include upstream areas draining into upstream lakes) draining into Lake Superior, Lake Huron, Lake Michigan, Lake Erie, and Lake Ontario, and one region that is defined by the Ottawa River watershed. The latter was included as it was important to some collaborators to evaluate how well models performed on this highly managed/human-impacted watershed. Furthermore, the Ottawa River flow has implications for Lake Ontario outflow management.

The study domain (including the Ottawa River watershed) is 915 798 km2 in size, of which 630 844 km2 (68.9 %) are land, while 284 954 km2 (31.1 %) are water bodies. These estimates are derived using the common routing product used in this study; more information about this product can be found in Sect. 2.3. The outline of the study domain is displayed in Fig. 1.

The land cover of land areas (water bodies excluded) is dominated by forest (71 %) and cropland (6.2 %), estimated based on the North American Land Change Monitoring System (NALCMS; NACLMS, 2017). The soil types of land areas (water bodies excluded) are dominated by sandy loam (47.3 %), loam (19.1 %), loamy sand (11.5 %), and silt loam (10.5 %). These estimates are derived using the vertically averaged soil data based on the entire soil column up to 2.3 m from the Global Soil Dataset for Earth System Models (GSDE; Shangguan et al., 2014) dataset, and soil classes are based on the United States Department of Agriculture's (USDA) classification. The elevation of the Great lakes watershed (including the Ottawa River watershed) ranges from 17 to 1095 m. The mean elevation is 270 m, and the median is 247 m. These estimates are derived using the HydroSHEDS 90 m (3 arcsec) digital elevation model (DEM) data (Lehner et al., 2008). These datasets – NALCMS, GSDE, and HydroSHEDS – summarize the common datasets used by all partners in this study to setup models. More details about the datasets are provided in Sect. 2.2.

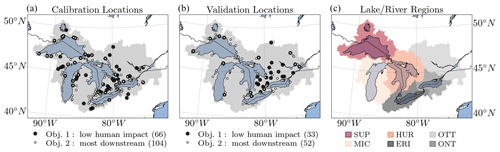

Figure 1Study domain and streamflow gauging locations. In total, 212 streamflow gauging stations (dots) located in the Great Lakes watershed, including the Ottawa River watershed (gray area in panels a and b), have been used in this study. Note that all selected gauging stations eventually drain to one of the Great Lakes or the Ottawa River, and none are downstream of any of the Great Lakes. Hence, most models do not simulate the areas of the Great Lakes themselves. Panel (a) shows the location of stations used for calibration regarding streamflow, where 66 of them are downstream of a low human impact watershed (objective 1; large black dots), and 104 stations are most downstream, draining into one of the five lakes or the Ottawa River (objective 2; smaller dots with a white center). In total, there are 141 stations used for calibration, as 29 stations are both low human impact and most downstream (large black dots with white center; ). Panel (b) shows the 71 validation stations, of which 33 are low human impact, 52 are most downstream, and 14 are both low human impact and most downstream (). The number of stations is added in parenthesis to the labels in the legend. Panel (c) shows the six main regions of the study domain, i.e., the Lake Superior region (SUP), the Lake Michigan region (MIC), the Lake Huron region (HUR), the Lake Erie region (ERI), the Ottawa River region (OTT), and the Lake Ontario watershed (ONT).

2.2 Meteorologic forcings and geophysical datasets

All models contributing to this study used the same set of meteorologic forcings and geophysical datasets to set up and run their models. Additional datasets required to set up specific models had to be reported to the team and made available to all collaborators in order to make sure everyone would have the chance to use that additional information as well. The common datasets used here were determined after the preceding Great Lakes Runoff Intercomparison project for Lake Erie (GRIP-E; Mai et al., 2021) in which contributors were allowed to use datasets of their preference. The team for the Great Lakes project (Great Lakes Runoff Intercomparison Project Phase 4: the Great Lakes – GRIP-GL) evaluated all datasets used by all models in GRIP-E and decided together which single dataset to commonly use in GRIP-GL. This led to the situation in which most models had to be set up again as, for all models, at least one dataset differed from what was used in GRIP-E and what was decided to be used in GRIP-GL. The common datasets are briefly described in the following.

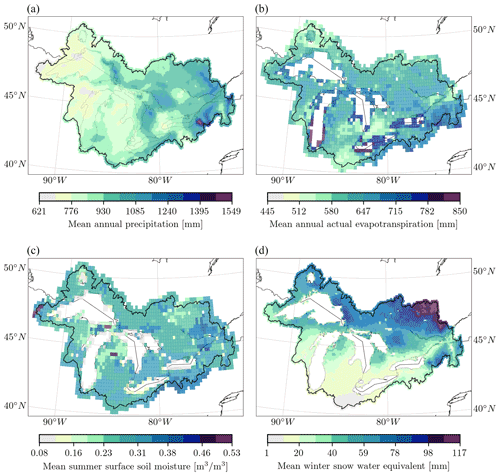

The Regional Deterministic Reanalysis System v2 (RDRS-v2) was employed as meteorologic forcing (Gasset et al., 2021). RDRS-v2 is an hourly and 10 km by 10 km meteorologic forcing dataset covering North America (Fig. 2a). Forcing variables such as precipitation, temperature, humidity, and wind speed, among others, are available from January 2000 until December 2017 through the Canadian Surface Prediction Archive (CaSPAr; Mai et al., 2020b). The dataset has been provided to all the participating groups in two stages. First, only data for the calibration period (January 2000 to December 2010) were shared. Second, after finalizing the calibration of all models, the data were shared for the validation period (January 2011 until December 2017). This allowed for a true blind (temporal) validation of the models. This was possible since the RDRS-v2 dataset was not publicly available when the GRIP-GL project started, and the participating groups (except the project leads) did not have access to the data. The forcings were aggregated according to the needs of each model. For example, lumped models used aggregated basin-wise daily precipitation and minimum and maximum daily temperature instead of the native gridded, hourly inputs.

The HydroSHEDS dataset (Lehner et al., 2008) was used as the common digital elevation model (DEM) for the project. It has a 3 arcsec resolution which corresponds to about 90 m at the Equator. Since the upscaled HydroSHEDS DEMs with 15 arcsec (500 m) and 30 arcsec (1 km) are consistent with the best-resolution dataset, the collaborators were allowed to pick the resolution most appropriate for their setup. The DEM was then postprocessed to the specific needs of each model. For example, some lumped models required the average elevation of each watershed as an input. The data were downloaded from the HydroSHEDS website (https://www.hydrosheds.org, last access: 6 July 2022), cropped to the study domain, and provided to the collaborators.

The Global Soil Dataset for Earth System Models (GSDE; Shangguan et al., 2014) was used as the common soil dataset for all models in this study. This dataset, with a 30 arcsec spatial resolution (approximately 1 km at the Equator), contains eight layers of soil up to a depth of 2.3 m. The data were downloaded directly from the website mentioned in the Shangguan et al. (2014) publication and preprocessed for the needs of the collaborators and models. For example, the data were regridded to the grid of the meteorologic RDRS-v2 forcings, as this was the grid for which most distributed models were set up. Some other models required the aggregation of soil properties to specific (fixed) layers different to those distributed. The project leads also converted the soil textures provided to soil classes as this was required by a few models. All these data products were converted to a standardized NetCDF format and shared with the collaborators.

As a common land cover dataset for all models, the North American Land Change Monitoring System (NALCMS) product including 19 land cover classes for North America was used. The dataset has a 30 m by 30 m resolution and is based on Landsat imagery from 2010 for Mexico and Canada and from 2011 for the USA. The data can be downloaded from a website (NACLMS, 2017). The data were downloaded, cropped to the study domain, and saved in a common TIFF file format. Furthermore, model-specific postprocessing of the data included regridding to model grids and aggregation of the land cover classes to the eight classes common in MODIS. Those datasets were saved in standard NetCDF file formats and provided to the collaborators.

Figure 2Meteorologic forcing data and auxiliary datasets. (a) The meteorologic forcings are provided by the Regional Deterministic Reanalysis System v2 (RDRS-v2; 10 km; hourly; Gasset et al., 2021). The figure shows the mean annual precipitation derived for the 18 years during which the dataset is available and used in this study (2000–2017). The forcing inputs are preprocessed as needed by the models, e.g., aggregated to basin averages or aggregated to daily values. (b) Actual evapotranspiration estimates and (c) surface soil moisture from the Global Land Evaporation Amsterdam Model (GLEAM v3.5b; 25 km; daily; Martens et al., 2017) and (d) snow water equivalent estimates from the ERA5-Land dataset (Muñoz Sabater, 2019; 10 km; daily) have been used to evaluate the calibrated model setups. All datasets have been cropped to the study domain (black line). The actual evapotranspiration and surface soil moisture (panels b and d) are not available over the lake area (missing grid cells). Panels (b–d) show the mean annual estimates for the auxiliary variables. The average soil moisture (panel c) is based on summer time steps only (June to October), while for the snow water equivalent annual mean only values larger than 1 mm of daily snow water equivalent were considered. In both cases, this is what was considered to evaluate model performance regarding these variables.

2.3 Routing product

The GRIP-GL routing product is derived from the HydroSHEDS DEM (Lehner et al., 2008), drainage directions, and flow accumulation. All of them are in 3 arcsec (90 m) resolution. The GRIP-GL routing product is generated by BasinMaker (Version 1.0; Han, 2021), which is a set of Python-based geographic information system (GIS) tools for supporting vector-based watershed delineation, discretization, and simplification and incorporating lakes and reservoirs into the network. This toolbox has been successfully applied for producing the Pan-Canadian (Han et al., 2020) and North American routing product (Han et al., 2021b). More detailed information, a user manual, and tutorial examples can be found on the BasinMaker website (Han et al., 2021a).

In this study, we used the HydroSHEDS DEM, flow direction, and flow accumulation at the 3 arcsec resolution for BasinMaker to delineate the watersheds and discretize them into subbasins. The HydroLAKES database, which provides a digital map of lakes globally (Messager et al., 2016), is used in the watershed delineation as well. The Terra and Aqua combined Moderate Resolution Imaging Spectroradiometer (MODIS) Land Cover Type (MCD12Q1) Version 6 data (USGS, 2019) is used in BasinMaker to estimate the Manning's n coefficient for the floodplains. The global river channel bankfull width database developed by Andreadis et al. (2013) is used to calculate geometry characteristics of river cross sections.

This routing product is compatible with other hydrological models (i.e., runoff-generating models at any resolution, either gridded or vector based) when the Raven hydrologic modeling framework (Craig et al., 2020) is used as the routing module. The routing product contains adequate information for hydrologic routing simulation, including the routing network topology, attributes of watersheds, lakes, and rivers, and initial estimates for routing parameters (e.g., Manning's coefficient). The key to routing arbitrary-resolution spatiotemporal runoff fluxes through Raven is the derivation of grid weights to remap the fluxes of runoff onto the routing network discretization. A workshop on how to couple the models participating in this study with the Raven framework in routing-only mode was held in October 2020. After that workshop, all models except one decided to use the common routing product and route their model-generated runoff through the Raven modeling framework. The GEM-Hydro-Watroute model (see the list of models in Sect. 2.4 and Table 1) was the only model to use its native routing scheme. Preliminary calibrated versions of, for example, the subbasin-based Soil and Water Assessment Tool (SWAT) were tested with and without Raven-based routing across 13 individually calibrated basins. These tests confirmed that SWAT showed notably improved streamflow predictions when their water fluxes for routing were handled with Raven-based routing in comparison to their native lake and channel routing approaches (Shrestha et al., 2021).

The common routing product includes the explicit representation of 573 lakes (214 located in calibration basins and 359 in validation basins). Small lakes with a lake area less than 5 km2 were not included to achieve a balance between lake representation and computational burden. BasinMaker requires a threshold of flow accumulation to identify streams and watersheds. We used a flow accumulation value of 5000 for this parameter. Generally, the smaller this value, the more subwatersheds and tributaries will be identified.

The routing product was prepared at three equivalent spatial scales. First, the routing networks for each of the 212 gauged basins (141 calibration basins and 71 validation basins) were individually delineated. The gauged basins were discretized into 4357 subbasins (2187 for calibration basins and 2170 for validation basins) with an average size of about 220 km2 (221 km2 for calibration basins and 215 km2 for validation basins). The 573 lake subbasins are further discretized into one land and one lake hydrological response unit (HRU) per lake subbasin. Second, for models that were regionally calibrated, for ease of simulating entire regions at once, the above gauge-based routing networks were aggregated into six regional routing networks. Importantly, at all 212 gauge locations, the regional routing networks has upstream routing networks that are equivalent to the individual routing networks for each gauge. Third, the global setup for the entire Great Lakes region was prepared but not used by any of the collaborators. More details about the streamflow gauge stations are given in Sect. 2.5.

2.4 Participating models

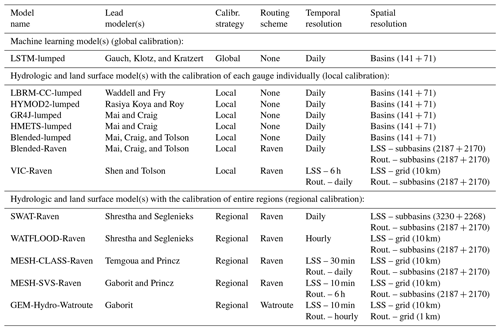

The 13 models participating in this study are listed in Table 1, including the (a) co-authors leading the model setups, calibration, validation, and evaluation, (b) calibration strategy, (c) routing scheme used, and (d) temporal and (e) spatial resolution of the models. The models are grouped according to their major calibration strategy. The first group is the machine-learning-based model, which also happens to be the only model with a global setup (Sect. 2.4.1); the second group is comprised of the seven models that are locally calibrated (Sect. 2.4.2), and the third group is the five models that followed a regional calibration strategy (Sect. 2.4.3). The calibration strategy (local, regional, and global) and calibration setup (algorithm, objective, and budget) was subject to the expert judgment of each modeling team. The main goal of this project was to deliver the best possible model setup under a given set of inputs; the standardization and enforcement of calibration procedures would have limited this significantly due to the wide range of model complexity and runtimes. The models are briefly described below, including a short definition of these three calibration strategies. More details about the models can be found in the Supplement, including the lists of parameters that have been calibrated for each model.

Please note that the model names follow a pattern. The last part of the name indicates whether the model does not require routing (e.g., XX-lumped), used the common Raven routing (e.g., XX-Raven), or used another routing scheme (i.e., XX-Watroute).

Table 1List of participating models. The table lists the participating models and the lead modelers responsible for model setups, calibration, and validation runs. The models are separated into three groups (see headings in the table), namely machine learning (ML) models which are all globally calibrated, hydrologic models that are calibrated at each gauge (local calibration), and models that are trained for each region, such as the Lake Erie or Lake Ontario watershed (regional calibration). Note that the temporal and spatial resolution of the fluxes of the land surface scheme (LSS) can be different from the resolutions used in the routing (Rout.) component. All LSS grids are set to the RDRS-v2 meteorological data forcing grid of around 10 km by 10 km. The two numbers given in the column specifying the spatial resolution (XXX + YYY) correspond to the spatial resolution of the models regarding calibration basins (XXX) and validation basins (YYY).

2.4.1 Machine-learning-based model

-

LSTM-lumped. One model based on machine learning, a Long Short-Term Memory network (LSTM), was contributed to this study. The model is called LSTM-lumped throughout this study. LSTM networks were first introduced by Hochreiter and Schmidhuber (1997) and used for rainfall–runoff modeling by Kratzert et al. (2018). The model was set up using the NeuralHydrology Python library (Kratzert et al., 2022). An LSTM is a deep-learning model that learns to model relevant hydrologic processes purely from data. The LSTM setup used in this study is similar to that from Kratzert et al. (2019c) and has been successfully applied for streamflow prediction in a variety of studies (e.g., Klotz et al., 2021; Gauch et al., 2021; Lees et al., 2021). The model inputs are nine basin-averaged daily meteorologic forcing data (precipitation, minimum and maximum temperature, components of wind, etc.), nine static scalar attributes derived for each basin from these forcings for the calibration period (mean daily precipitation, fraction of dry days, etc.), 10 attributes derived from the common land cover dataset (fraction of wetlands, cropland, etc.), six attributes derived from the common soil database (sand, silt, and clay content, etc.), and five attributes derived from the common digital elevation map (mean elevation, mean slope, etc.). The aforementioned data and attributes are based solely on the common dataset (Sect. 2.2). Streamflow is not part of the input variables. A full list of attributes used can be found in Sect. S3.1 in the Supplement. The LSTM setup follows a global calibration strategy, which means that the model was trained for all 141 calibration stations at the same time, resulting in a single trained model for the entire study domain that can be run for any (calibration or validation) basin as soon as the required input variables are available. The LSTM training involved fitting around 300 000 model parameters. This number should, however, not be directly compared to the number of parameters in traditional hydrological models because the parameters of a neural network do not explicitly correspond to individual physical properties. The training of one model takes around 2.75 h on a single graphics processing unit (GPU). The Supplement contains the full list of inputs, and a more detailed overview of the training procedure (Sect. S3.1). Unlike process-based hydrologic models, the internal states of an LSTM do not have a direct semantic interpretation but are learned by the model from scratch. In this study, the LSTM was only trained to predict streamflow, which is why it did not participate in the comparison of additional variables in this study. Note, however, that even though the model does not explicitly model additional physical states, it is nevertheless possible – to some degree – to extract such information from the internal states with the help of a small amount of additional data (Lees et al., 2022; Kratzert et al., 2019a).

2.4.2 Locally calibrated models

The following models follow a local calibration strategy, i.e., the models are trained for each of the 141 calibration stations individually. This leads to one calibrated model setup per basin. In order to simulate streamflow for basins that are not initially calibrated (spatial or spatiotemporal validation), the model needs a calibrated parameter set to be transferred to the uncalibrated basin. This parameter set is provided by a (calibrated) donor basin. All locally calibrated models in this study used the same donor basins for the validation basins, as described in Sect. 2.5. The individual models will be briefly described in the following (one model per paragraph). More details for each model can be found in the Supplement.

-

LBRM-CC-lumped. The Large Basin Runoff Model (LBRM) – described by Crowley (1983), with recent modifications to incorporate the Clausius–Clapeyron relationship, LBRM-CC, described by Gronewold et al. (2017) – is a lumped conceptual rainfall–runoff model developed by NOAA Great Lakes Environmental Research Laboratory (GLERL) for use in the simulation of total runoff into the Great Lakes (e.g., used by the U.S. Army Corps of Engineers in long-term water balance monitoring and seasonal water level forecasting). The model will be referred to as LBRM-CC-lumped hereafter. Inputs to LBRM-CC-lumped include daily areally averaged (lumped) precipitation, maximum temperature, and minimum temperature, as well as the contributing watershed area. All these inputs are based on the common dataset described in Sect. 2.2. For each basin, LBRM-CC-lumped's nine parameters were calibrated against the Kling–Gupta efficiency (KGE; Gupta et al., 2009) using a dynamically dimensioned search (DDS) algorithm (Tolson and Shoemaker, 2007) run for 300 iterations within the OSTRICH optimization package (Matott, 2017). A list of the calibrated nine parameters is given in Table S3 in the Supplement. LBRM-CC-lumped was modified for this project to output actual evapotranspiration (AET) in addition to the conventional outputs, which included snow water equivalent. LBRM does not provide a soil moisture as a volume per volume. Accordingly, we used the LBRM-CC-lumped upper soil zone moisture (mm) as a proxy for surface soil moisture. It should be noted that, subsequent to the posting of model results, an error was found in the calibration setup that resulted in ignoring an important constraint on the proportionality constant controlling potential evapotranspiration (PET; see Lofgren and Rouhana, 2016 for an explanation of this constraint). In addition, the LBRM-CC-lumped modeling team found that an improved representation of the long-term average temperatures applied in the PET formulation improves the AET simulations in some tested watersheds (PET is a function of the difference between daily temperature and long-term average temperature for the day of year). The impact of these bug fixes on the performance regarding streamflow or other variables like evapotranspiration across the entire study domain is not yet clear and will need to be confirmed through the recalibration of the model. Accordingly, accounting for these PET modifications may improve the LBRM-CC-lumped performance in simulating AET in future studies. Because LBRM/LBRM-CC is conventionally used for simulating seasonal and long-term changes in runoff contributions to the lakes' water balance, the evaluation has previously focused on simulated runoff; simulated AET, surface soil moisture (SSM), and snow water equivalent (SWE) are not typically evaluated. However, LBRM studies, such as those by Lofgren and Rouhana (2016) and Lofgren et al. (2011), did evaluate simulated AET, leading to the development of the LBRM-CC-lumped. More model details are given in Sect. S3.2 in the Supplement.

-

HYMOD2-lumped. HYMOD2 (Roy et al., 2017) is a simple conceptual hydrologic model simulating rainfall–runoff processes on a basin scale. Due to its lumped nature, it will be referred to as HYMOD2-lumped hereafter. It produces daily streamflow at basin level (lumped) by using daily precipitation and PET. The latter is calculated in this study using the Hargreaves method (Hargreaves and Samani, 1985) using the minimum and maximum daily temperatures. Additionally, the version of HYMOD2-lumped used in this study includes snowmelt and rain–snow partitioning, for which temperature and relative humidity data are required. The basin drainage area and latitude were the other two bits of information relevant for the version of the model implemented in this study. All forcing data and geophysical basin characteristics used are provided in the common dataset (Sect. 2.2). In total, 11 model parameters are calibrated against the KGE using the shuffled complex evolution (SCE) algorithm (Duan et al., 1992). The SCE was set up with two complexes and a maximum of 25 (internal) loops, and the algorithm converged on average after 1800 model evaluations. One calibration trial was performed for each basin. The list of the 11 calibrated parameters is provided in Table S4 in the Supplement. The model variables for AET and SWE are vertically lumped and saved as model outputs to evaluate them against reference datasets. The proxy variable used for SSM is the storage content in the nonlinear storage tank (C) from which the runoff is derived (see the schematic diagram of HYMOD2 shown in Fig. 4 of Roy et al., 2017). This storage content is written as a function of the height of water in the tank as follows: . H and Hmax represent the height and maximum height of water, respectively, while b is a unitless shape parameter, and Cmax is the maximum storage capacity. Additional model details can be found in Sect. S3.3 in the Supplement.

-

GR4J-lumped, HMETS-lumped, and Blended-lumped. The models GR4J (Perrin et al., 2003), HMETS (Martel et al., 2017), and the Blended model (Mai et al., 2020a) are set up within the Raven hydrologic modeling framework (Craig et al., 2020). The models are set up in the lumped mode and will therefore be called GR4J-lumped, HMETS-lumped, and Blended-lumped, respectively, hereafter. The three models run on a daily time set up. The forcings required are daily precipitation and minimum and maximum daily temperature. The models require the following static basin attributes which are all derived based on the common set of geophysical data (Sect. 2.2): basin center latitude and longitude in degrees (∘), basin area in kilometers squared (km2), basin average elevation in meters (m), basin average slope in degrees (∘), and fraction of forest cover in the basin (only HMETS-lumped and Blended-lumped). The models are calibrated against KGE using the DDS algorithm. The models GR4J-lumped and HMETS-lumped were given a budget of 500 model evaluations per calibration trial, while the Blended-lumped model, which has more parameters than the other two models, was given a budget of 2000 model evaluations per trial. In total, 50 independent calibration trials per model and basin were performed; the best trial (largest KGE) was designated as the calibrated model. The tables of calibrated parameters can be found in Tables S5–S7 in the Supplement. For model evaluation, the model outputs for actual evapotranspiration (AET) are saved using the Raven custom output

AET_Daily_Average_BySubbasin. The surface soil moisture SSM is derived using the saved custom outputSOIL[0]_Daily_Average_BySubbasin, which is water content Θ in millimeters (mm) of the top soil layer and is converted using the unitless porosity ϕ and soil layer thickness τ0 in millimeters (mm) into the unitless soil moisture ). The snow water equivalent estimate is the custom Raven outputSNOW_Daily_Average_BySubbasin. Additional details for these three models can be found in Sects. S3.4–S3.6 in the Supplement. -

Blended-Raven. The semi-distributed version of the above Blended model is also set up within the Raven hydrologic modeling framework. The set up is similar to the Blended-lumped model, with the difference that, instead of modeling each basin in a lumped mode, here, each basin is broken into subbasins (2187 in total across all calibration basins and 2170 overall in validation basins), and these subbasins were further discretized into two different hydrologic response units (lake and non-lake). The simulated streamflows are routed between subbasins using the common routing product (Sect. 2.3) within Raven and is hence called Blended-Raven hereafter. The model produces simulation results at the subbasin level but outputs are only saved for the most downstream subbasins (141 calibration; 71 validation). The model uses the same forcing variables (gridded and not lumped) and basin attributes as the Blended-lumped model. Calibration, validation, and evaluation are exactly the same as described for the Blended-lumped model above. The model parameters calibrated can be found in Sect. S3.7 and Table S8 in the Supplement.

-

VIC-Raven. The Variable Infiltration Capacity (VIC) model (Liang et al., 1994; Hamman et al., 2018) is a semi-distributed macroscale hydrologic model that has been widely applied in water and energy balance studies (Newman et al., 2017; Xia et al., 2018; Yang et al., 2019). In this study, VIC was forced with the gridded RDRS-v2 meteorological data, including precipitation, air temperature, atmospheric pressure, incoming shortwave radiation, incoming longwave radiation, vapor pressure, and wind speed at the 6 h time step and 10 km by 10 km spatial resolution. Other data, such as topography, soil, and land cover, are derived from those datasets, as described in Sect. 2.2. VIC was used for runoff generation simulation at grid cell level, and the resultant daily gridded runoff and recharge fluxes were aggregated to subbasin level for the Raven routing, which then produced daily streamflow at the outlet of each catchment. The VIC model using Raven routing will be referred to as VIC-Raven hereafter. In total, 14 parameters of the VIC-Raven model were calibrated at each of the 141 calibration catchments using the DDS algorithm to maximize the objective metric of the KGE. The optimization is repeated for 20 trials, each with 1000 calibration iterations, and the best result out of 20 was designated as the calibrated model. The VIC model outputs including the soil moisture, evaporation, and snow water equivalent in snowpack are dumped for model evaluation. A list of the 14 calibrated model parameters and more details about the VIC-Raven setup can be found in Table S9 and Sect. S3.8 in the Supplement, respectively.

2.4.3 Regionally calibrated models

Models following the regional calibration strategy focused on simultaneously calibrating model parameters to all calibration stations within a region (rather than estimating parameters for each gauged basin individually). All regionally calibrated models utilized the same six subdomains (here called regions) which are the Ottawa River watershed and the local watersheds of Lake Erie, Lake Ontario, etc. (see Fig. 1c). This results in six model setups for the whole study domain for each of the regionally calibrated models. These models can be run for validation stations using those regional setups as soon as it is known in which region the validation basin is located.

-

SWAT-Raven. The Soil and Water Assessment Tool (SWAT) was originally developed by the U.S. Department of Agriculture and is a semi-distributed, physically based hydrologic and water quality model (Arnold et al., 1998). For each of the six regions, the geophysical datasets (DEM, soil, and land use), as presented in Sect. 2.2, were used to create subbasins which were further discretized into hydrologic response units. Subbasin-averaged daily forcing data (maximum and minimum temperature and precipitation), as presented in Sect. 2.2, were then used simulate land–surface processes. The vertical flux (total runoff) produced at the subbasin spatial scale was then fed to a lake and river routing product (Han et al., 2020), integrated into Raven modeling framework (Craig et al., 2020), for subsequent routing. Note that the SWAT subbasins and routing product subbasins are not equivalent, and hence, aggregation to routing product subbasins was necessary, similar to the aggregation for gridded models. The integrated SWAT-Raven model was calibrated against daily streamflow using the OSTRICH platform (Matott, 2017) by optimizing the median KGE values of all the calibration gauges in each region using 17 SWAT-related and two Raven related parameters. See Sect. S3.9 and Table S10 in the Supplement for further details of the parameters and model. The calibration budget consisted of three trials, each with 500 iterations, and the best result out of the three trials was designated as the calibrated model. SWAT-simulated daily actual evapotranspiration, volumetric soil moisture (aggregated for the top 10 cm), and snow water equivalent at the subbasin spatial scale were used to further evaluate the model.

-

WATFLOOD-Raven. WATFLOOD is a semi-distributed, gridded, physically based hydrologic model (Kouwen, 1988). A separate WATFLOOD model for each of the six regions (Fig. 1c) was developed using the common geophysical datasets and hourly precipitation and temperature data at a regular rotated grid of about 0.09 decimal degrees (≈10 km). Model grids were further discretized into grouped response units. Hourly gridded runoff and recharge to lower zone storage (LZS) were aggregated to the subbasin level for the Raven routing, which then produced hourly streamflow at the outlet of each catchment. Streamflow calibration and validation of the integrated WATFLOOD-Raven was carried out using the same methodology (different parameters) as described in the previous section for SWAT-Raven. We refer to Sect. S3.10 and Table S11 in the Supplement for further details of the 11 WATFLOOD and 6 Raven parameters used during model calibration. Actual evapotranspiration and snow water equivalent are direct outputs of the WATFLOOD model and were used to evaluate the model. Volumetric soil moisture, however, is not a state variable of the model. Hence, the rescaled upper zone storage (UZS) was used as a proxy to qualitatively evaluate the volumetric soil moisture.

-

MESH-CLASS-Raven. MESH (Modélisation Environnementale communautaire-Surface and Hydrology) is a community hydrologic modeling platform maintained by Environment and Climate Change Canada (ECCC; Pietroniro et al., 2007). MESH uses a grid-based modeling system and accounts for subgrid heterogeneity using the grouped response units (GRUs) concept from WATFLOOD (Kouwen et al., 1993). GRUs aggregate subgrid areas by common attributes (e.g., soil and vegetation characteristics) and facilitate parameter transferability in space (e.g., Haghnegahdar et al., 2015). The MESH model setup here is using the Canadian Land Surface Scheme (CLASS) to produce grid-based model outputs, which are passed to the Raven routing to generate the streamflow at the locations used in this study. The model will hence be referred to as MESH-CLASS-Raven hereafter. The following meteorologic forcing variables from the RDRS-v2 dataset (Sect. 2.2) are used to drive CLASS: incoming shortwave radiation, incoming longwave radiation, precipitation rate, air temperature, wind speed, barometric pressure, and specific humidity (all provided at or near surface level). The model simulates vertical energy and water fluxes for vegetation, soil, and snow. The MESH-CLASS-Raven grid is the same as the forcing data (≈10 km by 10 km) and produces outputs at an hourly time step. The MESH-CLASS streamflow outputs were aggregated to a daily time step and then aggregated to subbasin level for the Raven routing, which then produced daily streamflow at the outlet of each subbasin. Streamflow calibration and validation of the integrated MESH-CLASS-Raven model was carried out using the same methodology (different parameters) as described in the previous sections for SWAT-Raven and WATFLOOD-Raven. The calibration algorithm chosen is the DDS algorithm, with a single trial and a maximum of 240 iterations (per region). A list of the 20 model parameters calibrated is available in Sect. S3.11 and Table S12 in the Supplement. The calibration was computationally time-consuming (at least 2 weeks for each region). For model evaluation, the MESH output control flag was used to activate the output of the daily average auxiliary variables (AET, SSM, SWE). The actual evapotranspiration (AET in mm) was directly computed by the model while the surface soil moisture (SSM in m3 m−3) was obtained by adding the volumetric liquid water content of the soil (in m3 m−3) to the volumetric frozen water content of the soil (in m3 m−3). The snow water equivalent (SWE in mm) was obtained by adding the snow water equivalent of the snowpack at the surface (in mm), with liquid water storage held in the snow (e.g., meltwater temporarily held in the snowpack during melt; in mm).

-

MESH-SVS-Raven. The MESH (Modélisation Environnementale communautaire-Surface and Hydrology) model not only includes the CLASS land surface scheme (LSS) but also the soil, vegetation, and snow (SVS; Alavi et al., 2016) LSS and the Watroute routing scheme (Kouwen, 2018). MESH allows, for example, us to emulate the GEM-Hydro-Watroute model outside of ECCC informatics infrastructure. In this study, however, the hourly MESH-SVS gridded outputs of total runoff and drainage were provided to the Raven routing scheme, which is computationally less expensive than the Watroute routing scheme (more info in Sect. S3.13 in the Supplement). The model will be referred to as MESH-SVS-Raven hereafter. The following meteorological forcing variables from the RDRS-v2 dataset (Sect. 2.2) are used to drive the SVS LSS (same as that used for MESH-CLASS-Raven): incoming shortwave radiation, incoming longwave radiation, precipitation rate, air temperature, wind speed, barometric pressure, and specific humidity. SVS also needs geophysical fields such as soil texture, vegetation cover, slope, and drainage density. All of these variables were derived from the common geophysical datasets used for this project (Sect. 2.2), except for drainage density, which is derived from the National Hydro Network (NHN) dataset over Canada (Natural Resources Canada, 2020) and from the National Hydrography Dataset (NHD) dataset over the United States of America (USGS, 2021). In this study, the MESH-SVS-Raven grid is the same as the forcing data (around 10 km by 10 km), and SVS was run using a 10 min time step. The Raven routing was used with a 6 h time step. Routing results were aggregated to a daily time step for model performance evaluation. MESH-SVS-Raven was calibrated using a global calibration approach (Gaborit et al., 2015) but applied for each of the six regions of the complete area of interest, therefore resulting in what is called in this study a regional calibration method. The objective function consists of the median of the KGE values obtained for the calibration basins located in a region. The calibration algorithm consists of the DDS algorithm, with a single calibration trial and a maximum of 240 iterations, which took about 10 d to complete for each region. The list of the 23 parameters calibrated is provided in Sect. S3.12 and Table S13 in the Supplement. Regarding the MESH-SVS-Raven auxiliary variables required for additional model evaluation, daily averages valid from 00:00 to 00:00 UTC were computed based on the hourly model outputs. For surface soil moisture (SSM), the average of the first two SVS soil layers was computed in order to obtain simulated soil moisture values valid over the first 10 cm of the soil column, which is the depth to which the GLEAM SSM values correspond. AET is a native output of the model, and the average SWE over a grid cell was computed based on a weighted average of the two SVS snowpacks, where SVS simulates snow over bare ground and low vegetation in parallel to snow under high vegetation (Alavi et al., 2016; Leonardini et al., 2021).

-

GEM-Hydro-Watroute. GEM-Hydro (Gaborit et al., 2017b) is a physically based, distributed hydrologic model developed at Environment and Climate Change Canada (ECCC). It relies on GEM-Surf (Bernier et al., 2011) to represent five different surface tiles (glaciers, water, ice over water, urban, and land). The land tile is represented with the SVS LSS (Alavi et al., 2016; Husain et al., 2016; Leonardini et al., 2021). GEM-Hydro also relies on Watroute (Kouwen, 2018), a 1D hydraulic model, to perform 2D channel and reservoir routing. The model will be referred to as GEM-Hydro-Watroute in this study. It was preferred to use its native gridded routing scheme of Watroute during this study, instead of the common Raven routing scheme used by other hydrologic models, in order to investigate the potential benefit of calibration for the experimental ECCC hydrologic forecasting system named the National Surface and River Prediction System (NSRPS; Durnford et al., 2021), which relies on GEM-Hydro-Watroute. GEM-Surf forcings are the same as those used for MESH-SVS-Raven, except that GEM-Surf needs both wind speed and wind direction (available in RDRS-v2), and surface water temperature and ice cover fraction (taken from the analyses of the ECCC Regional Deterministic Prediction System). Regarding the geophysical fields needed by GEM-Surf, they consist of the same as those used for MESH-SVS-Raven, except that some additional fields are required, like surface roughness and elevation (taken or derived from the common geophysical datasets used in this project). Moreover, the Watroute model used here mainly relies on HydroSHEDS 30 arcsec (≈1 km) flow direction data (HydroSHEDS, 2021), Global Multi-resolution Terrain Elevation Data 2010 (GMTED2010; USGS, 2010), and on Climate Change Initiative Land Cover (CCI-LC) 2015 vegetation cover (ESA, 2015). The surface component of GEM-Hydro-Watroute was employed here with the same resolution as the forcings (≈10 km) and with a 10 min time step, with outputs aggregated to hourly afterwards. The Watroute model used here has a 30 arcsec (≈1 km) spatial resolution and a dynamic time step comprised between 30 and 3600 s. Daily flow averages were used when computing model performances. GEM-Hydro-Watroute is computationally intensive; it was not optimized to perform long-term simulations but is rather oriented towards large-scale forecasting. Moreover, the Watroute routing scheme is computationally expensive, and its coupling with the surface component is not optimized either. See Sect. S3.13 in the Supplement for more information about GEM-Hydro-Watroute computational time. It is therefore very challenging to calibrate GEM-Hydro-Watroute directly. Several approaches have been tried to circumvent this issue in the past, and each approach has its own drawbacks (see Gaborit et al., 2017b; Mai et al., 2021). In this study, a new approach is explored, consisting of calibrating some SVS and routing parameters with MESH-SVS-Raven, transferring them back in GEM-Hydro-Watroute (more information in Sect. S3.13 and Table S14 in the Supplement), and further manually tuning Watroute Manning coefficients afterwards. However, because this approach leads to different performances for MESH-SVS-Raven and GEM-Hydro-Watroute for several reasons explained in Sect. S3.13 in the Supplement, they are presented here as two different models. Exactly the same approach as for MESH-SVS-Raven was used for GEM-Hydro-Watroute streamflow validation and for the additional model evaluation variables.

2.5 Model calibration and validation setup and datasets

The models participating in this study were calibrated and validated based on streamflow observations made available by either Water Survey Canada (WSC) or the U.S. Geological Survey (USGS). The streamflow gauging stations selected for this project had to have at least a (reported) drainage area of 200 km2 (to avoid too flashy watershed responses in the hydrographs) and less than 5 % of missing (daily) data between 2000 and 2017, which is the study period, i.e., warmup period (January to December 2000), calibration period (January 2001 to December 2010), and validation period (January 2011 to December 2017). The low portion of missing data was to avoid not having enough data to properly estimate the calibration and validation performance of the models. It is known that the calibration period (2001–2010) is a dry period, while the validation period (2011–2017) is known to be very wet (US Army Corps of Engineers: Detroit District, 2020a). This might have an impact on model performances – especially in temporal validation experiments. In this study, no specific method has been applied to account for these trends in the meteorologic forcings.

Besides a minimum size and data availability, the streamflow gauges need to be either downstream of a low human impact watershed (objective 1) or most downstream of areas draining into one of the five Great Lakes or into the Ottawa River (objective 2). If a watershed is most downstream and human impacts are low, the station would hence be classified as both objective 1 and 2. Objective 1 was defined to give all models – especially the ones without the possibility to account for watershed management rules – the ability to perform well. Objective 2 was chosen since the ultimate goal of many operational models in this region is to estimate the flow into the lakes (or the Ottawa River). This classification of gauges was part of the study design that ended up not being evaluated in this paper. This is because each of the modeling teams decided to build their models using all objective 1 and objective 2 stations treated the same way. As such, our results do not distinguish performance differences for these two station and/or watershed types. The information is included here so that followup studies, by our team and others, can evaluate this aspect of the results.

The stations were selected such that they are distributed equally across the six regions (five lakes and the Ottawa River) and would be used for either calibration (cal) or validation (val). The assignment of a basin to be either used for calibration or validation was done such that two-thirds of the basins (141) are for training, and the remaining basins (71) are for validation. There are 33 stations located within the Lake Superior watershed (21 cal; 12 val), 45 within the Lake Huron watershed (29 cal; 16 val), 35 stations within the Lake Michigan watershed (27 cal; 8 val), 48 locations within the Lake Erie watershed (36 cal; 12 val), 31 stations within the Lake Ontario watershed (21 cal; 10 val), and 20 stations located within the Ottawa River watershed (7 cal; 13 val). The location of these 212 streamflow gauging stations is displayed in Fig. 1. A list of the 212 gauging stations, including their area, region they are located in, objectives they were selected for, and whether they were used for calibration or validation is given in Table S15 in the Supplement.

To summarize the steps of calibration and validation of all models regarding streamflow, we provide the following list:

- A.

Calibration was performed for 141 calibration locations for the period January 2001 to December 2010 (2000 was discarded as warmup).

- B.

Temporal validation was performed for 141 locations where models were initially calibrated but are now evaluating the simulated streamflow from January 2011 until December 2017.

- C.

Spatial validation was performed for 71 validation locations from January 2001 until December 2010.

- D.

Spatiotemporal validation was performed for 71 validation locations from January 2011 until December 2017.

The temporal validation (B; basin known but time period not trained) can be regarded as being the easiest of the three validation tasks (assuming climate change impacts are not too strong, no major land cover changes happened, etc.). The spatial validation (C; time period trained but location untrained) can be regarded as being more difficult, especially for locally calibrated models, given that one either needs a global/regional model setup or a good parameter transfer strategy for ungauged/uncalibrated locations. The spatiotemporal validation (D) can be regarded as being the most difficult validation experiment as both the location and time period were not included in model training. The two latter validation experiments, including the spatial transfer of knowledge, provide an assessment of the combined quality of a model which, in this context, includes the model structure/equations, calibration approach, and regionalization strategy.

All locally calibrated models used the same (simple) donor basin mapping for assigning model parameter values from the calibrated basins to other basins used for spatial and spatiotemporal validation. The donor basins were assigned using the following strategy: (a) if there exists a nested basin (either upstream or downstream) that was trained, then use that basin as donor, (b) if there are multiple of those nested candidates, then use the one that is the most similar with respect to drainage area, and (c) if there is no nested basin trained, then use the calibration basin which spatial centroid is the closest to the spatial centroid of the validation basin. There are certainly more appropriate/advanced ways to do regionalization, but the main objective was to ensure that the models used the same approach. We wanted to avoid favoring a few models by testing more advanced approaches with them and then applying that method to other models which might have favored other strategies. The donor basin mapping is provided in Table S16 in the Supplement.

The WSC and USGS streamflow data were downloaded by the project leads, the units were converted to be consistent in cubic meters per second (m3 s−1), and then shared with the team in both CSV and NetCDF file formats to make sure all team members used the same version of the data for calibration. Note that at first only the data from the 141 calibration locations was shared. The validation locations and respective observations were only made available after the calibration of all models was finished to allow for a true blind (spatial and spatiotemporal) validation.

2.6 Model evaluation setup and datasets

In order to assess the model performance beyond streamflow, a so-called model evaluation was performed. Model evaluation means that a model was not (explicitly) trained against any of the data used for evaluation. We distinguish model evaluation from the model validation of streamflow because, when building the models, although the modeling teams knew their model would be assessed based on streamflow validation, they did not know in advance that additional variables beyond streamflow would be assessed against other observations (this was only decided later in the study). It is not known if the models have been trained in previous studies against any of the observations we use for validation or model evaluation. Any model previously using such data to inform model structure or process formulations might have an advantage relative to another model whose structural development did not involve testing in this region. Such kinds of model developments are, however, the nature of modeling in general and are assumed to determine the quality of a model that is to be assessed here exactly.

The evaluation datasets were chosen based on the following criteria: (a) they had to be gridded to allow for a consistent evaluation approach across all additional variables, (b) the time step of the variables preferably had to be daily to match the time step of the primary streamflow variable, (c) they had to be available over the Great Lakes for a long time period between 2001 and 2017 (study period) to allow for a reliably long record in time and space, (d) they had to be open source to allow for the reproducibility of the analysis, (e) they had to be variables typically simulated in a wide range of hydrologic and land surface models, and (f) they had to have been at least partially derived on distributed observational datasets for the region (e.g., ground or satellite observations).

There were two evaluation variables taken from the GLEAM (Global Land Evaporation Amsterdam Model) v3.5b dataset (Martens et al., 2017). GLEAM is a set of algorithms using satellite observations of climatic and environmental variables in order to estimate the different components of land evaporation and surface and root zone soil moisture, potential evaporation, and evaporative stress conditions. The first variable used in this project is actual evapotranspiration (AET; variable E in the dataset; see Fig. 2b) given in millimeters per day (mm d−1), and the second variable is surface soil moisture (SSM; variable SMsurf in the dataset; see Fig. 2c) given in cubic meters per cubic meter (m3 m−3) and valid for the first 10 cm of the soil profile. Both variables are available over the entire study domain on a 0.25∘ regular grid and for the time period from 2003 until 2017 with a daily resolution.

The third evaluation variable is snow water equivalent (SWE) which is available in the ERA5-Land dataset (Muñoz Sabater, 2019, variable sd therein; see Fig. 2d). ERA5-Land combines a large number of historical observations into global estimates of surface variables using advanced modeling and data assimilation systems. ERA5-Land is available for the entire study period and domain on a daily temporal scale and a 0.1∘ regular grid. The data are given in meters of water equivalent and have been converted to converted to kilograms per square meter (i.e., millimeter) to allow for comparison with model outputs.

The ERA5-Land dataset was chosen over the available ground truth SWE observations like the CanSWE dataset (Canadian historical Snow Water Equivalent dataset; Vionnet et al., 2021) because (a) the ERA5-Land dataset is available over the entire modeling domain, while CanSWE is only available for the Canadian portion, (b) the ERA5-Land dataset is gridded, allowing for similar comparison approaches as used for AET and SSM, and (c) the ERA5-Land dataset is available on a daily scale, while the frequency of data in CanSWE varies from biweekly to monthly, which would have limited the number of data available for model evaluation. We, however, provide a comparison of the ERA5-Land and CanSWE observations by comparing the grid cells containing at least one snow observation station available in CanSWE and derive the KGE of those two time series over the days on which both datasets provide estimates. The results show that 83 % of the locations show an at least medium agreement between the ERA5-Land SWE estimates and the SWE observations provided through the CanSWE dataset (KGE larger than or equal 0.48). A detailed description and display of this comparative analysis can be found in Sect. S1 in the Supplement.

These three auxiliary evaluation datasets were downloaded by the project leads and merged into single files from annual files, such that the team only had to deal with one file per variable. The data were subsequently cropped to the modeling domain and then distributed to the project teams in standard NetCDF files with a common structure, e.g., spatial variables are labeled in the same way, to enable an easier handling in processing. Besides these preprocessing steps, the gridded datasets were additionally aggregated to the basin scale, resulting in 212 files – one for each basin – to allow for not only a grid-cell-wise comparison but also a basin-level comparison. The reader should refer to Sects. 2.7 and 2.8 for more details on performance metrics and basin-wise/grid-cell-wise comparison details, respectively.

2.7 Performance metrics

In this study, the Kling–Gupta efficiency (KGE; Gupta et al., 2009) and its three components α, β, and r (Gupta et al., 2009) are used for streamflow calibration and validation and model evaluation regarding AET, SSM, and SWE. The component α measures the relative variability in a simulated versus an observed time series (e.g., Q(t) or or of one grid cell ). The component β measures the bias of a simulated versus an observed time series while the component r measures the Pearson correlation of a simulated versus an observed time series. The overall KGE is then based on the Euclidean distance from its ideal point in the untransformed criteria space and converting the metric to the range of the Nash–Sutcliffe efficiency (NSE), i.e., optimal performance results in a KGE and NSE of 1 and suboptimal behavior is lower than 1. The KGE is defined as follows:

To make comparisons easier, we transformed the range of the KGE components to match the range of the KGE. We introduce the following component metrics:

with y being a time series (either simulated, sim, or observed, obs) and r being the Pearson correlation coefficient.

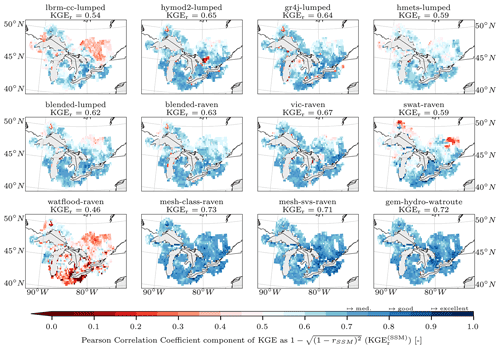

We use the overall KGE to estimate the model performances regarding streamflow (components are shown in the Supplement only), AET, and SWE. For surface soil moisture, we used the correlation component of KGE (KGEr) since most models do not explicitly simulate soil moisture but only saturation or soil water storage in a conceptual soil layer where depth is not explicitly modeled. We therefore agreed to report only the standardized soil moisture simulations, which are defined as follows:

where μSSM is the mean, and σSSM denotes the standard deviation of a surface soil moisture time series. The mean and variance of those standardized time series SSM′ hence collapse to 0 and 1, respectively, and the KGE components β and α are noninformative. The Pearson correlation r, however, is informative. Note that the Pearson correlation for standardized and non-standardized datasets is the same ().

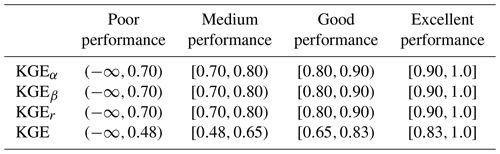

Several levels of model performance are introduced in this study to allow for a categorization of results and models. The thresholds defined in the following for the individual components have been chosen based on expert opinions and reflect the subjective level of quality expected from the models. The levels can be adapted depending on the stronger or looser expectations. The thresholds of the components are then combined to the (derived) thresholds for KGE.

We defined a medium performance to not over- or underestimate bias and variability by more than 30 %. A correlation is expected to be at least 0.7. All performances below this are regarded as poor quality. A good performance is defined as bias and variability being over- or underestimated at most by 20 % and a correlation being at least 0.8. An excellent performance is defined as not more than 10 % over- or underestimation in the variability or bias and a correlation of at least 0.9. The resulting ranges for the KGE and its three component performances are derived using Eqs. (1)–(4) and summarized in Table 2. These performance levels are consistently used throughout this study to categorize the model performances regarding streamflow and additional variables.

2.8 Basin-wise and grid-wise model comparisons for individual simulated variables

We decided to use two approaches to compare the model outputs with streamflow observations and the reference datasets for AET, SSM, and SWE in order to account for the wide range of spatial discretization approaches used by the participating models. In total, six models were set up in a lumped mode (basin-wise output), two models operated on a subbasin discretization, and five models are set up in a gridded mode where the model grids do not necessarily match the grid of the AET, SSM, or SWE reference datasets. Please refer to Table 1 for the spatial resolution of the individual models.

In the calibration and validation phases of the project (Sect. 2.5), the simulated time series of streamflow are compared in the traditional way with the observed time series of streamflow at gauging stations. We used the Kling–Gupta efficiency metric (Eq. 1) to compare the simulated daily streamflow estimates with the corresponding observations.

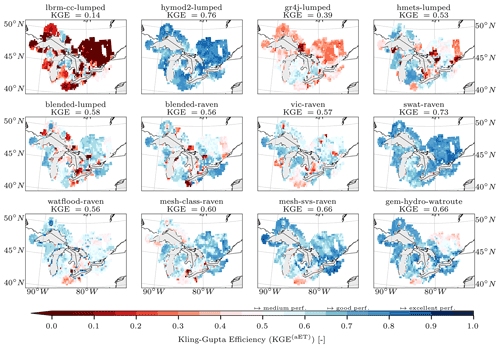

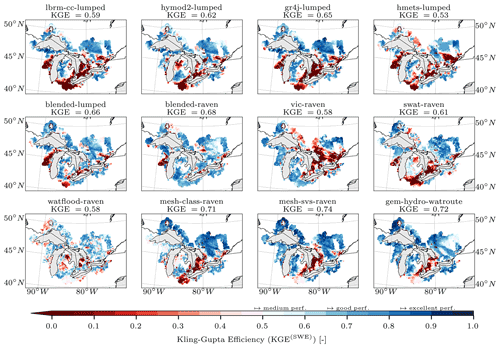

In the evaluation phase (Sect. 2.6) the additional variables were compared to their simulated counterparts. The additional variables chosen for evaluation are gridded and on a daily timescale. Model evaluation for additional variables is compared with the following two strategies. The first comparison is performed basin-wise, meaning that reference datasets and simulations are both aggregated to basin level, e.g., gridded evapotranspiration is aggregated to area-weighted mean evapotranspiration of all grid cells that contribute to a basin domain. This results in 212 observed time series per variable and 212 simulated time series per model and variable. The corresponding basin-wise observed and simulated time series are then compared using KGE (Eq. 1) for evapotranspiration and snow water equivalent and the Pearson correlation coefficient (Eq. 4) for surface soil moisture. The second comparison strategy is to compare all models in a grid-cell-wise manner, thereby appropriately enabling the evaluation of how well distributed models can simulate spatial patterns within basins. In this approach, all model outputs are regridded to the grid of the reference datasets (i.e., 0.25∘ regular grid for GLEAM's evapotranspiration and surface soil moisture and 0.1∘ regular grid for ERA5-Land's snow water equivalent). Regridding is here meant as the areally weighted model outputs contributing to each grid cell available in the reference datasets; no higher-order interpolation was applied. The reference (gridded) and simulated (regridded) time series are then compared for each grid cell, leading to a map of model performance per variable (i.e., KGE(x,y)). Again, the KGE is used for evapotranspiration and snow water equivalent and the Pearson correlation coefficient for surface soil moisture.

Note that also lumped models and models that are based on a subbasin discretization are regridded to the reference grids. In those cases, each grid cell is overlaid with the (sub)basin geometries, and the area-weighted contribution of the (sub)basin-wise outputs to the grid cell are determined.

Basin-wise comparisons match a lumped modeling perspective, while grid-cell-wise comparisons are a better approach for distributed models. The authors are not aware of past studies including grid-cell-wise comparisons across multiple models. This may be due to regridding being a tedious task – especially considering a wide range of model internal spatial discretizations. The regridding was performed by the project leads to ensure a consistent approach across all models. The team members provided the project leads with their model outputs using the native model grid in a standardized NetCDF format that the group had agreed on.

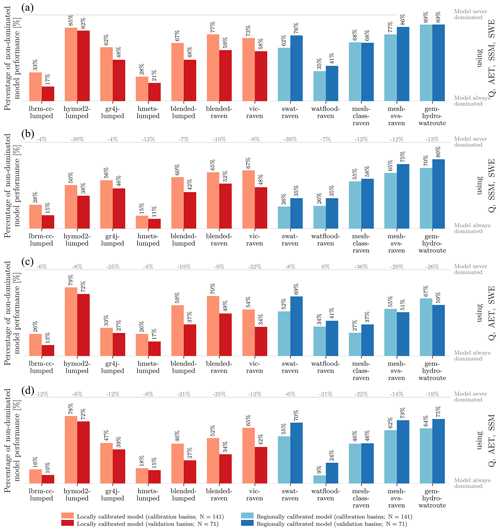

2.9 Multi-objective multi-variable model analysis

Besides analyzing model performances individually for the variables of streamflow, actual evapotranspiration (AET), surface soil moisture (SSM), and snow water equivalent (SWE), a multi-objective analysis considering the performance of all variables at the same time was performed. Considering that performance metrics for these four variables are available, each of these can be considered an objective, and modelers would like to see all objectives optimized. From a multi-objective perspective, model A can only be identified to be truly superior compared to model B when it is superior in at least one objective with all other N−1 objectives being at least equal to model B. In this case, model A is said to dominate model B, and model B is dominated by model A. When multiple models are under consideration, model A is dominated when there is at least one other model that dominates model A, otherwise model A is non-dominated (e.g., no model is objectively superior to model A). The set of non-dominated models forms the so-called Pareto front, which is of unique interest as it defines the set of models for which no objective decision can be made about which model is better than the others. Theoretically, any number between 1 and M models can form the Pareto front. A visual depiction of Pareto fronts in a 2D example can be found in Sect. S2 and Fig. S2 in the Supplement.

The Pareto analysis, i.e., computing the set of models on the Pareto front, is carried out for each of the 212 basins. The N=4 objectives are KGE of basin-wise AET and SWE, KGEr of SSM derived for the entire period from 2001 to 2017, and the basin-wise KGE regarding streamflow derived for the validation period from 2011 to 2017. The validation period was chosen for streamflow to allow for a fair comparison with the evaluation variables, as those have never been used for model training. Including the calibration period (2001 to 2010) for streamflow would have implicitly favored models that are easier to calibrate.

In this analysis, the score for each model is defined by the number of times that a model is part of the Pareto front (i.e., not dominated by another model). A perfect score is achieved if a model is a member of the non-dominated set of models in 212 of the 212 Pareto analyses (100 %); the lower limit of this score is zero (0 %).

In order to shed some light on the reasons for the high/low scores of individual models, the Pareto analysis was repeated with only N=3 metrics at a time. Streamflow as a primary hydrologic response variable was included each time, while one of the three evaluation variables was left out. This results in three additional scores for each model besides the score that is derived using all N=4 variables.

2.10 Interactive website

During the 2 years of this project, an interactive website was developed as an interactive tool to explore study results and data beyond what can be shown in individual static plots. It is, for example, challenging to show the 212 times 13 hydrographs for the calibration and validation periods or to compare individual events at a specific location between a subset of models.

The website (Mai, 2022) hosts all results for basin-wise model outputs of streamflow, AET, SSM, and SWE. The basin-specific time series can be displayed and a specific event analyzed by magnifying the time series plots.

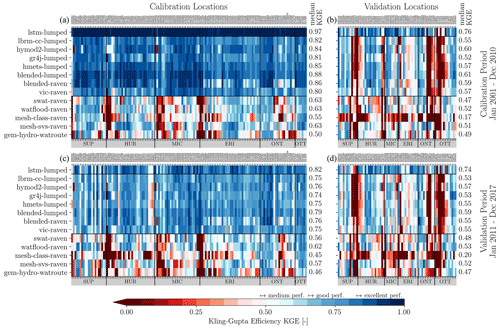

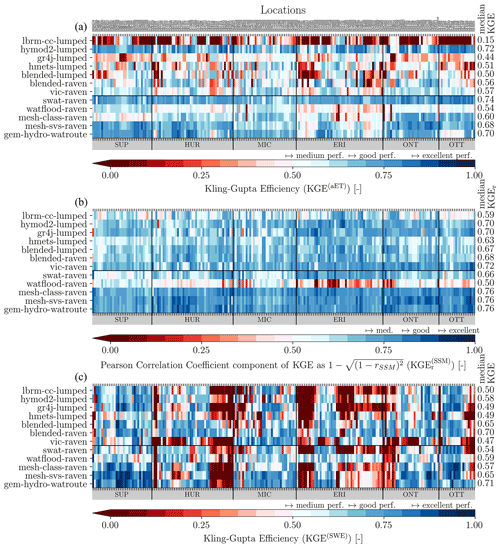

Additional basin characteristics, such as mean precipitation or temperature and slope or basin elevation, can be shown through the dropdown menu to color specific markers on the website. The user also has the option to choose to rank the selected models in this dropdown menu.