the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Technical Note: Flow velocity and discharge measurement in rivers using terrestrial and unmanned-aerial-vehicle imagery

Anette Eltner

Hannes Sardemann

Jens Grundmann

An automatic workflow to measure surface flow velocities in rivers is introduced, including a Python tool. The method is based on particle-tracking velocimetry (PTV) and comprises an automatic definition of the search area for particles to track. Tracking is performed in the original images. Only the final tracks are geo-referenced, intersecting the image observations with water surface in object space. Detected particles and corresponding feature tracks are filtered considering particle and flow characteristics to mitigate the impact of sun glare and outliers. The method can be applied to different perspectives, including terrestrial and aerial (i.e. unmanned-aerial-vehicle; UAV) imagery. To account for camera movements images can be co-registered in an automatic approach. In addition to velocity estimates, discharge is calculated using the surface velocities and wetted cross section derived from surface models computed with structure-from-motion (SfM) and multi-media photogrammetry. The workflow is tested at two river reaches (paved and natural) in Germany. Reference data are provided by acoustic Doppler current profiler (ADCP) measurements. At the paved river reach, the highest deviations of flow velocity and discharge reach 4 % and 5 %, respectively. At the natural river highest deviations are larger (up to 31 %) due to the irregular cross-section shapes hindering the accurate contrasting of ADCP- and image-based results. The provided tool enables the measurement of surface flow velocities independently of the perspective from which images are acquired. With the contactless measurement, spatially distributed velocity fields can be estimated and river discharge in previously ungauged and unmeasured regions can be calculated, solely requiring some scaling information.

- Article

(13026 KB) - Full-text XML

-

Supplement

(131551 KB) - BibTeX

- EndNote

Measuring the discharge of rivers is a major task in hydrometry because of its importance in many hydrological and geomorphological research questions, e.g. to understand the characteristics of catchments and their adaption to climatic changes. Different approaches exist to apply the velocity–area method to measure discharge relying on information about the flow velocity and the wetted river cross-section area. Established tools to retrieve flow velocities are the application of current meters, acoustic devices (i.e. acoustic Doppler current profilers; ADCPs) or surface velocity radar (Herschy, 2008; Merz, 2010; Morgenschweis, 2010; Gravelle, 2015; Welber et al., 2016). However, these velocity estimation methods are either labour intensive, require minimum water depths, need prolonged measurement periods or can endanger the operator during flood measurements.

A promising alternative is remote sensing tools utilising image-based approaches. Due to their flexibility (only a camera is needed) they are used frequently exploiting various sensors and platforms for data acquisition. For instance, RGB (red–green–blue) sensors have been used (e.g. Muste et al., 2008) as well as thermal cameras (e.g. Puleo et al., 2012). Ran et al. (2016) demonstrated the suitability of a low-cost Raspberry Pi camera to observe flash floods, and Le Coz et al. (2016) and Guillén et al. (2017) illustrated the usability of crowd-sourced imagery for post-flood analysis. Image-based setups allow for the assessment of temporally changing flow dynamics (Sidorchuk et al., 2008) due to the potential continuous recording of entire river reaches. Furthermore, small-scale investigations are enabled as shown by Legout et al. (2012), who measured the spatial distribution of surface runoff from depths of millimetres to centimetres, at a range where other methods are failing.

Various algorithms exist for surface flow velocity monitoring from image-based observations deploying tracking tools. Four tracking approaches are applied frequently in the field to monitor rivers. The first method is large-scale particle image velocimetry (LSPIV) originally introduced by Fujita et al. (1998). This approach uses the tracking of features at the water surface that are caused due to natural occurring floating particles or free surface deformations caused by ripples or waves, e.g. due to wind or turbulence (Muste et al., 2008). In general, the area of interest (i.e. the water surface) is divided in sub-regions, and these sub-regions are used as templates. In the subsequent images, the corresponding areas are searched for using correlation techniques.

Fujita et al. (2007) advanced the LSPIV approach by an algorithm called space–time image velocimetry (STIV). STIV performs faster because tracking is performed in 1D instead of 2D. Profiles are extracted along the main flow direction to subsequently draw particle movements along the time axis (i.e. change along the profiles within succeeding frames), leading to a space–time image. The resulting angle of the pattern within that image resolves into the flow velocity.

The third possibility is the usage of optical flow algorithms developed in the computer vision community. For instance, the Lucas–Kanade (Lucas and Kanade, 1981) operation has been utilised to measure surface velocities of large floods or small rivers (Perks et al., 2016 or Lin et al., 2019, respectively). The method aims to minimise greyscale value differences between the template and search area adapting the parameters of an affine transformation within an optimisation procedure. Finally, particle-tracking velocimetry (PTV) is a tracking option that uses correlation techniques as in LSPIV. However, instead of using entire sub-regions as templates, single particles are detected first and then searched for in the subsequent images.

LSPIV is the most widely used method and can be considered as matured (Muste et al., 2011). Amongst other things, it enabled the measurement of the hysteresis phenomena during flood events (Tsubaki et al., 2011; Muste et al., 2011). However, LSPIV mostly underestimates velocities, which is revealed in more detail by Tauro et al. (2017), who prefer PTV instead. In contrast to LSPIV PTV does not assume similar flow conditions for the entire search area, and it is not influenced by surface frictional resistance (Lewis and Rhoads, 2015) or standing waves (Tsubaki et al., 2011).

Besides surface flow velocity another parameter has to be considered to derive discharge measurements from image-based tracking approaches. The depth averaged flow velocity, used in the velocity–area method, does not necessarily correspond to the surface flow velocity, which is amongst other reasons due to the influence of riverbed roughness. Therefore, a so-called velocity coefficient has to be used to adjust the surface velocities (Creutin et al., 2003; Le Coz et al., 2010). Usually, the deeper the flow is, the higher the coefficient is assumed to be (Le Coz et al., 2010). The coefficient can vary with different river cross sections (Le Coz et al., 2010), and it can change within the same cross section due to varying water depths, which is likely for irregular profiles (Gunawan et al., 2012). Muste et al. (2008) state that the coefficient mostly ranges between 0.79 to 0.93, but values as low as 0.55 have been measured (Genç et al., 2015). Considering the correct velocity coefficient is important because it has a high impact on the discharge estimation error in remote sensing approaches (Dramais et al., 2011).

When flow velocities and the velocity coefficient are known, the area of the river cross section is needed to calculate the discharge with the velocity–area method (e.g. Hauet et al., 2008). Different tools exist for contactless river area measurements of a cross section. Muste et al. (2014) show that it is possible to use velocity pattern measured with LSPIV to retrieve flow depth in shallow-flow conditions. Another approach is the utilisation of ground-penetrating radar as illustrated for larger rivers by Costa et al. (2000). An additional increasingly used method to retrieve the topographic (and thus cross-section) information of the river reach is the usage of structure-from-motion (SfM) photogrammetry (Eltner et al., 2016). For instance, Ran et al. (2016) capture stereo images to reconstruct the 3D information of a river reach from overlapping images during low-flow conditions. However, if water is present during data acquisition and the riverbed is still visible, the underwater measurements have to be corrected for refraction impacts (Mulsow et al., 2018) or else heights of points below the water surface will be underestimated. Woodget et al. (2015) introduce a workflow to account for refraction using a constant correction value for the case of Nadir viewing image collection. Dietrich (2017) extends this correction procedure for the case of oblique imagery. Detert et al. (2017) were the first to perform fully contactless, image-based discharge estimations using refraction corrected river cross sections (adapting Woodget et al., 2015) and surface flow velocities, all measured from unmanned-aerial-vehicle (UAV) imagery. However, the authors relied on a seeded flow to apply LSPIV.

Image-based tracking approaches can be applied to imagery captured terrestrially as well as from aerial platforms. In the case of aerial imagery, the utilisation of UAVs for data acquisition is increasing. The advantage of drones is their flexibility and allowance to capture runoff patterns during high-flow conditions (e.g. Tauro et al., 2016; Perks et al., 2016; Detert et al., 2017; Koutalakis et al., 2019), even enabling real-time data processing (Thumser et al., 2017). However, a challenge to overcome is the correction of camera movements during the UAV flight. Although camera mounts are commonly stabilised, the remaining motion needs to be accounted for. Tauro et al. (2016) subtract velocities measured in stable areas outside the river from velocities tracked in the river. Another possibility is the usage of co-registration. Thereby, either features (e.g. scale invariant feature transform – SIFT – features; Lowe, 2004) are searched for in stable areas (Fujita et al., 2015; Blois et al., 2016) or ground control points (GCPs) are detected (Le Boursicaud et al., 2016). Subsequently, these image points are matched across the images. Afterwards, this information is used to apply a perspective transformation to each image to fit them to a reference image. However, so far stable areas are still masked manually.

In the case of terrestrial data acquisition, the conversion of pixel measurements to metric velocity values is more challenging compared to UAV data due to a stronger deviation of the perspective from an orthogonal projection, which leads to decreasing accuracies with increasing distance to the sensor. Therefore, Kim et al. (2008) suggest to avoid camera setups with tilting angles larger than 10∘. Most approaches ortho-rectify the images prior to tracking to allow for a correct scaling of the image tracks. However, performing the tracking in the original image would be favoured to minimise interpolation errors, especially for oblique camera setups, and to solely transform the tracked image point coordinates into object space (Stumpf et al., 2016).

Several software tools already exist to perform image-based velocimetry (e.g. PTVlab from Brevis et al., 2011; PIVlab from Thielicke and Stamhuis 2014; Fudaa-LSPIV at https://forge.irstea.fr/projects/fudaa-lspiv (last access: 24 March 2020); KU-STIV developed by Fujita; Rectification of Image Velocity Results – RIVeR – from Patalano et al., 2017). These tools cover different processing steps and tracking options to retrieve surface flow velocities and discharge. In this study, we combine the entire workflow from video, either captured with a UAV or from a terrestrial camera, to the velocity of river reaches, considering image stabilisation, automatic feature search area extraction, PTV, track filtering and metric velocity retrieval via forward ray intersection. An automatic flow velocity measurement tool (FlowVelo tool) for image velocimetry is presented and provided to the public domain to overcome existing gaps discussed before. It is independent from the data acquisition scheme and relies on PTV. Camera movements are accounted for in a fully automatic approach if a sufficient amount of shore area is visible. Furthermore, the search area for features to track, i.e. the river area, is extracted automatically solely requiring water level information and a 3D surface model of the river reach. The 3D surface model is calculated from image data with SfM photogrammetry additionally considering multi-media photogrammetry to retrieve both topography and bathymetry. In order to improve tracking results, detected features and velocity tracks are filtered with different methods. Finally, we estimate discharge from surface flow velocities and cross-sectional areas. The FlowVelo tool and the whole workflow are investigated for two river reaches, paved and natural, at which velocities and discharges are compared to ADCP references.

In this study, the FlowVelo tool is introduced that allows for the measurement of flow velocity from videos independently of the acquisition platform, i.e. either aerial or terrestrial. Different parameter options for feature detection and tracking as well as track filtering are explained. Two experimental study sites have been chosen to evaluate the performance of video-based flow velocity estimation using camera frames acquired from different perspectives. First the experimental study sites are introduced, and afterwards the tool is explained.

2.1 Areas of interest

The experimental study sites are short river reaches in Saxony, Germany (Fig. 1). One studied river reach is situated at the Wesenitz. This river originates in the Lausitzer highlands, has a catchment size of about 280 km2 and exhibits a river length of 83 km. The area of interest is located at the river gauge station Elbersdorf, which is operated by the Staatliche Betriebsgesellschaft für Umwelt und Landwirtschaft, the Saxon state company for the environment and agriculture. Here, annual average water level and discharge for the hydrological year 2017 are 48 cm and 2.4 m3 s−1, respectively. Field campaigns were conducted on 31 March and on 4 April 2017. During the campaigns, the water level amounted 51 cm (discharge 2.7 m3 s−1). The investigated river section at the Wesenitz is paved but influenced by local sand banks at the river bottom. During the data acquisition the river had a width of about 10 m.

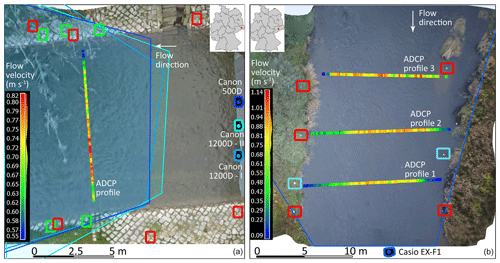

Figure 1Areas of interest at the Wesenitz (a) and the Freiberger Mulde (b) displayed with UAV orthophotos calculated from video frames. Surface flow velocities measured with an ADCP and the corresponding locations of the measurement cross sections within the river are illustrated. Ground control points are used to reference the image data at both river reaches. Red squares highlight GCPs used for terrestrial and UAV data at the Freiberger Mulde and UAV data at the Wesenitz. Green squares show the location of GCPs used for terrestrial imagery at the Wesenitz. Check points (blue squares) are used to assess the accuracy of the 3D reconstruction from video frames at the Freiberger Mulde. Camera locations of the terrestrial image sequence acquisition are illustrated as pictograms, and corresponding image extent areas are shown (displayed area of interests in RGB correspond to the aerial image extents).

The other river is the Freiberger Mulde, which originates in the Ore Mountains, has a catchment size of about 2980 km2 and displays a river length of 124 km. The area of interest is located close the gauge Nossen. Average discharge and water level for the hydrological year 2016 are 5.6 m3 s−1 and 65 cm, respectively. The gauge station is located 1 km upstream of the studied river reach. The field campaign was conducted on 26 October 2016. During this day discharge and water level were 5.7 m3 s−1 and 68 cm. The approximated river width was 15 m. The chosen region of interest at the Freiberger Mulde is a natural river section with non-uniform flow conditions.

2.2 Data acquisition

Different data were collected during the field campaigns at both river sections. Amongst other things, ADCP measurements were performed as a flow velocity reference; GCPs were defined to geo-reference the video data; and UAV and terrestrial imagery were acquired to perform image-based flow velocity estimation.

2.2.1 ADCP measurements

For the ADCP measurements the moving boat approach with StreamPro from RDI is used. Velocity profiles were measured with a blanking range of 14 cm near the water surface. Data were processed using the AGILA software from the German Federal Institute of Hydrology (BfG). Measurements along the boat track were projected onto a reference cross-sectional area. Afterwards surface flow velocities were extrapolated to allow for a comparison to the image-based values. For the extrapolation, power functions were fitted to the measured vertical velocity profile for each individual ADCP ensemble using the software AGILA (for more detail see Adler, 1993, and Morgenschweis, 2010). Then, velocities at the water surface were calculated with these functions. Thus, all ADCP measurements of the profile were considered to extrapolate surface velocities.

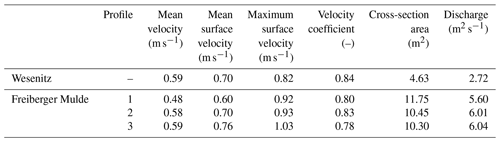

At the Wesenitz ADCP measurements were performed at one cross section in eight repetitions (Fig. 1a). Average water surface velocity was about 0.7 m s−1, and the resulting discharge amounted to 2.7 m3 s−1 (Table 1). At the Freiberger Mulde three cross sections were chosen (Fig. 1b) to acquire data that allow for a spatially distributed assessment of the image-based data. Average river surface velocities ranged between 0.60 and 0.76 m s−1 (Table 1).

The spatial variation of flow velocities is larger at the Freiberger Mulde, where measurements were performed in a natural river reach, which is in contrast to the flow velocity range at the Wesenitz, where data were captured at a standardised gauge station. Thus, only one profile was measured at the Wesenitz. The discharge at the Freiberger Mulde is 5.88 m3 s−1 on average but reveals a standard deviation of 0.25 m3 s−1. The estimated discharge of the river reach therefore reveals a variation of about 4 %, which can be attributed to inconsistencies during data acquisition. A decrease of the velocity coefficient, which has been derived from the ADCP measurements, with decreasing water depth is observed. At the Freiberger Mulde, cross sections 1 and 3 (Table 1) have lower water depth compared to profile 2. Thus, the velocity coefficients are lower.

2.2.2 Image-based data

At both river reaches video sequences were acquired with terrestrial cameras and with a camera installed on the UAV AscTec Falcon 8. The airborne image data were captured at flying heights of about 20 and 30 m at the Wesenitz and Freiberger Mulde, respectively. Videos were captured with a frame rate of 25 frames per second (fps) and with a resolution of 1920×1080 pixels using the Sony NEX-5N camera with a fixed lens with a focal length of 16 mm. The ground sampling distance (GSD) is about 7 mm at the Wesenitz and about 9 mm at the Freiberger Mulde.

The terrestrial cameras were installed at bridges across the river (Fig. 1). At the Wesenitz three cameras were installed to evaluate the performance of different cameras (Fig. 1a). Two Canon EOS 1200D cameras and one Canon EOS 500D camera were set up. The 1200D cameras captured video sequences at 25 fps and with a resolution of 1920×1080 pixels. The 500D captured frames with a higher rate (30 fps) and smaller image resolution (1280×720 pixels). All three cameras were facing downstream. At the Freiberger Mulde the Casio EX-F1 camera, equipped with a zoom lens fixed to 7.5 mm, was used. Videos were captured at 30 fps and a resolution of 640×480 pixels. The camera was facing upstream.

The terrestrial cameras were calibrated for both rivers to allow for the correction of image distortion impacts. To estimate the interior geometry of the cameras, images of an in-house calibration field have been captured in a specific calibration pattern (Luhmann et al., 2014). These images, together with approximate coordinates of the calibration field and approximations of the interior camera orientations were used in a free-network bundle adjustment within AICON 3D Studio to calibrate each camera. More details regarding the workflow are given in Eltner and Schneider (2015).

2.3 High-resolution topography of the river reaches

Local 3D surface models describing the topography of the river reaches are necessary to scale the image measurements. Therefore, high-resolution topography data were acquired at both rivers using SfM photogrammetry (Eltner et al., 2016; James et al., 2019). SfM in combination with multi-view stereo (MVS) matching allows for the digital reconstruction of the topography from overlapping images and some GCPs. Thereby, homologous image points in overlapping images are detected and matched automatically. From these homologous points and some assumptions about the interior camera model, the position and orientation of each captured image (i.e. camera pose) can be calculated. With known network geometry, a dense point cloud can be computed, reconstructing the 3D information for almost each image pixel. The resulting 3D surface models are geo-referenced during the reconstruction or afterwards via GCPs.

At the Wesenitz the 3D surface model of the river reach was calculated from 85 terrestrially captured images with a Canon EOS 600D (20 mm fixe lens) and from 20 UAV images (Eltner et al., 2018). The SfM calculations were performed in Agisoft Metashape. At the Freiberger Mulde seven frames of the video sequence, which is also used for later PTV processing, were utilised to perform SfM photogrammetry to retrieve the corresponding 3D model of the river reach.

GCPs made of white circles on a black background were installed in order to reference the 3D data as well as the image-based velocity measurements. They were measured with a total station at the Freiberger Mulde and during the first campaign at the Wesenitz. During the second campaign at the Wesenitz GCPs were extracted from cobblestone corners (with sufficient contrast) at the gauge, which are visible in the terrestrial images used for the 3D model reconstruction. GCPs were measured in at least five images for sufficient redundancy and thus more reliable coordinate calculation.

The bathymetric information of the river reaches was retrieved using the same UAV data as for the topographic information above the water level. Refraction impacts are accounted for using by the tool provided by Dietrich (2017). Underwater points, camera poses and interior camera parameters as well as the water level need to be provided. The corrected point clouds can be noisy and were therefore filtered and smoothed in CloudCompare using a statistical outlier filter to detect isolated points and using a moving least-square filter. Eltner et al. (2018) revealed that accuracies at the centimetre scale can be reached using multi-media SfM at the Wesenitz river reach. Due to opaque water conditions at the Freiberger Mulde, imagery of a previous UAV flight (6 weeks earlier and with no flood events happening during that period) had to be used to reconstruct the underwater area.

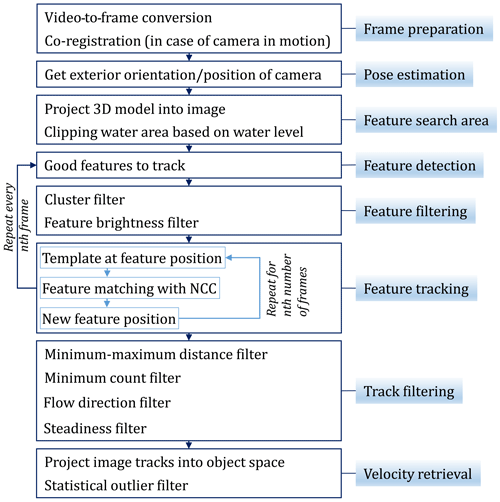

2.4 Surface flow velocity workflow – the FlowVelo tool

This section introduces the general approach to measure surface flow velocities from either terrestrial or airborne video sequences. Thereby, essential processing steps are described in more detail. The FlowVelo tool is realised in Python and using the OpenCV (Open Source Computer Vision Library) library (Bradski, 2000). Figure 2 illustrates the entire data processing workflow of the tool.

2.4.1 Frame preparation

Video sequences are converted into individual frames prior to the data processing. Afterwards, image co-registration is necessary if the camera is not stable during video capturing, as is the case for the UAV data. Each frame of the entire video sequence is co-registered to the first frame of the same sequence to correct camera movements and thus to enable all frames to capture the same scene. This processing step is preformed fully automatically. In each frame Harris corner features are detected (Harris and Stephens, 1988), which are then matched to the first frame of the sequence using SIFT (Lowe, 2004) or ORB descriptors (Rublee et al., 2011). The suitability of co-registration in different conditions and over longer periods of time has been illustrated in Eltner et al. (2018), who introduce a terrestrial camera gauge for water level measurements.

Harris features in the water region are detected as outliers due to their changing appearance between subsequent frames leading to matching failure. And if moving features in the water area still might be matched, they are latest filtered during the parameter estimation of the homography because these points will be considered as outliers during the model fitting with RANSAC (random sample consensus; Fischler and Bolles, 1981). Thus, only stable and reliable homologous image points outside the river are kept and used to calculate the homography parameters between the first frame and all subsequent frames. Finally, a perspective transformation is applied to ensure that all frames fit to the first image. It has to be mentioned that this approach is only working as long as enough stable areas are visible on both river shores.

In the FlowVelo tool five parameters can be set to adjust the co-registration of each individual scenery. The maximum number of key points defines how many features are maximally searched for in each frame. Larger numbers can increase the robustness and accuracy of matching but also the processing time. The number of good matches determines how many matched features between two frames are needed minimally to find the homography. Again, larger values increase the robustness, but they can also lead to a failure of processing if fewer feature matches are found than appointed here. Furthermore, it can be defined which feature descriptor is chosen for matching, if features are matched back and forth increasing the accuracy and processing time, and if image co-registration is performed to the first frame or in a series to each consequent frame of the sequence.

2.4.2 Finding features to track

A search area in the river region has to be defined to detect particles before tracking. This is due to the circumstance that most feature detectors look for regions with high contrast. Therefore, points of interest would be found on the land, where contrast is usually higher than on the water surface. Thus, in a first step the river area has to be masked in the images and defined as the search area for tracking before applying particle detection.

Feature search area and pose estimation

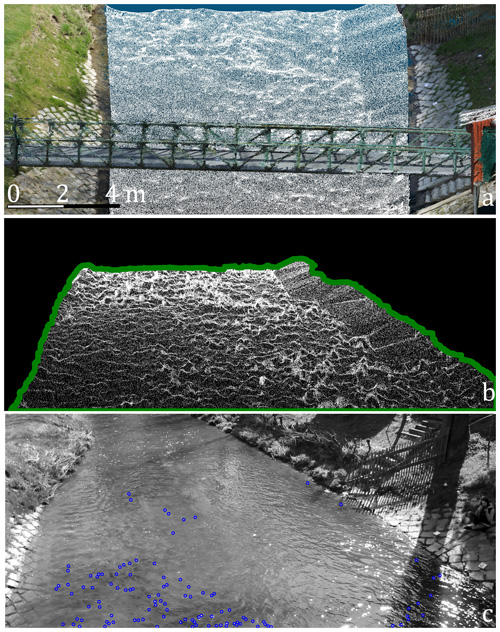

The feature search area is a region of interest that is defined as a function of the water level to mask the image. The water level and a 3D surface model of the river reach (Fig. 3a) observed by the camera have to be known to define this water area automatically. The 3D surface model is clipped with the water level value to solely keep the points below the water surface. Afterwards, these points are projected into image space (Fig. 3b). Therefore, information about the pose and the interior geometry of the camera is necessary. In the FlowVelo tool, information about the camera pose is either estimated with spatial resection considering the GCP coordinates in image and object space and the interior camera parameters (for more details see Eltner et al., 2018) or it can be simply stated if the pose has been defined by other measures.

Figure 3Defining the search area to extract particles to track. (a) 3D point cloud of the investigated river reach at the Wesenitz. Coloured points (colourised with RGB information according to their real-world object colour) are 3D points above the water surface reconstructed with SfM photogrammetry. White points are 3D points below the water surface reconstructed with SfM and corrected for refraction effects. (b) 3D point cloud below the water level projected into image space. Green line depicts contour line, which is used as search mask for feature detection. (c) Detected and filtered features considered for tracking (blue circles).

Next, the 3D point cloud of the observed river reach is projected into a 2D image (Fig. 3b). To fill gaps, potentially arising for 3D surface models with a low resolution, a morphological closing is performed. Finally, the contour of the underwater area is extracted to define the search mask for the individual frames. If several contours are detected, the largest contour is chosen. If a 3D surface model is not present for automatic feature search area detection, the area of interest for tracking can also be provided via a mask file.

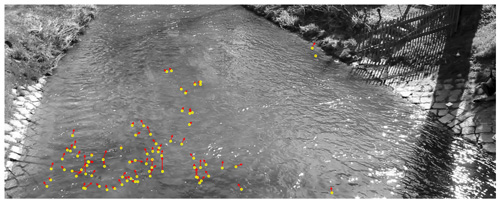

Feature detection and filtering

Particles are detected with the Shi–Tomasi feature (good feature to track; GFTT) detector (Shi and Tomasi, 1994). Thereby, features are detected similar to the Harris corner detector, but a different score is considered to decide for a valid feature (Fig. 3c). Many more feature detectors are possible. Tauro et al. (2018) test several methods and show that the GFTT detector performs well and also finds features in regions of poor contrast.

The elimination of particles, which are not suitable for tracking, is necessary. For instance, reflections of sunlight at waves showing high contrasts on the water surface need to be removed to avoid the erroneous tracking of fake particles (Lewis and Rhoads, 2015). Therefore, a nearest neighbour search is performed to find areas with strong clusters of particles. If there are too many features within a defined search radius, the particle will be excluded from further analysis. In addition, features are removed that reveal brightness values below a threshold, e.g. to avoid the inclusion of wave shadows as features.

2.4.3 Feature tracking

When features have been detected, they are tracked through subsequent frames (Fig. 4). This tracking is performed using normalised cross-correlation (NCC). Normalisation allows accounting for brightness and illumination differences between different frames. The positions of the detected features are chosen to define templates with a specific kernel size (mostly 10 pixels in this study; Supplement 1). In the next frame NCC is performed within a defined search area (mostly 15 pixels in this study; Supplement 1) to find the positions with highest correlation scores for each feature, potentially corresponding to the new positions on the water surface of the migrated particles.

To refine the matching, an additional sub-pixel accurate processing is performed. Thereby, template and matched search area of the same size are converted into the frequency domain to measure the phase shift between both, and afterwards the sub-pixel peak location is determined with a weighted centroid fit. The final matched locations define the new templates for tracking in the next frame. This tracking approach is performed for a specified number of frames. In this study, features are tracked for 20 frames, and new features are detected every 15th frame. It can be suitable to detect features more frequently than the number of frames they are tracked across because features can change their appearance, and new features can enter the area of view, although the already detected features are still tracked.

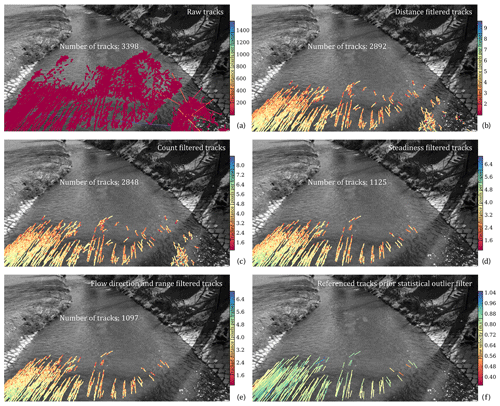

2.4.4 Track filtering

Figure 4 shows that false tracking results can still occur, e.g. tracks that significantly deviate from the main flow direction. This is amongst other reasons due to remaining speckle detected as features or due to tracking of features with a low contrast leading to ambiguous matching scores. Therefore, resulting velocity tracks need to be filtered. Tauro et al. (2018) remove false trajectories considering minimum track length and track orientation. In this study, we also make assumptions about the flow characteristics of the river (Fig. 5). We consider six parameters: minimum frame amount of a tracked feature, minimum and maximum tracking distances, flow steadiness, range of track directions, and deviation from the average flow direction. Each track has to fulfil these criteria to be considered as reliable velocity information. Thereby, each track is the combination of the individual sub-tracks from frame to frame, with feature detection performed in the first frame.

Figure 5Result of tracked features after filtering has been applied to the video sequence of the 500D camera (with a temporal length of 23 s). Sub-tracks are displayed, and the number of tracks refers to full tracks (combination of sub-tracks). (a) Raw tracks prior any filtering. (b) Filtered tracks after applying minimum and maximum distance thresholds. (c) Filtered tracks after applying a minimum count of sub-tracks over which features need to be tracked. (d) Filtered tracks after considering standard deviation of sub-track directions. (e) Filtered tracks after considering deviation from average flow direction and the range of orientation angles of sub-tracks. (f) Filtered tracks converted into metric values to receive flow velocities in metres per second.

The first criterion considers the minimum percentage of frames across which the features have to be traceable (here 65 %). The underlying assumption is that if the feature is only traceable across a few frames, then it is more likely not a well-defined flowing particle at the water surface but may for instance be a speckle occurrence due to sun glare. However, the minimum value can be set to 0 to avoid any constraints regarding flow velocities and camera frame rates.

The second and third filter criteria are the distances across which features were tracked, comprising thresholds for minimum and maximum distances. The distance thresholds can be roughly approximated when the image scale and the range of expected river flow velocity are known. In this study, the minimum and maximum distance parameters are set to 0.1 and 10 pixels, respectively.

The fourth criterion considers the directional flow behaviour of the feature with a steadiness parameter. Therefore, directions of sub-tracks (from frame to frame) are analysed for each track. Tracks are excluded when the standard deviation is above a defined threshold (30∘ in this study). The idea is that river observations are performed during nearly uniform flow conditions. Thus, high frequencies of changes in flow directions within a track indicate measurement errors and should be filtered. In addition to this steadiness parameter, the range of all sub-track directions is also considered as a measure of the flow behaviour. If the range is above a defined threshold, the track will be excluded (here 120∘).

For the last criterion the main flow direction of the river is examined. The average direction of all tracks is calculated, and if the direction of the individual tracks are larger or below a buffer threshold (here 30∘), they are rejected from further processing. The buffer value has to be defined considering the general variability of the river surface flow pattern. The lower the parameter is chosen to be, the more a uniform flow is assumed to be. It has to be noted that the directional filter has a limited applicability in more complex flow conditions, e.g. turbulent, non-uniform rivers. In such situation, local filters should be preferred over these global values.

2.4.5 Velocity retrieval

In the last processing stage, measured distances are transformed from pixel values to metric units to receive flow velocities in metres per second. With a known camera pose and interior camera geometry, image measurements can be projected into object space. This leads to a 3D representation of the light ray emerging from the image plane and proceeding through the camera's projection centre. 3D object coordinates of an image measurement can be calculated by intersecting its ray with a 3D surface model of the river. In this case the water surface, assumed to be planar at the water level, defines the location of intersection. The starting and ending points of each track are intersected with the water plane to retrieve real-world coordinates. From the distance between start and end and considering the camera's frame rate as well as the number of tracked frames, metric flow velocities are retrieved. Finally, the metric velocity tracks are filtered once more with a statistical outlier filter to remove remaining outliers (Fig. 6). The threshold is defined as the sum of the average velocity with a multiple of its standard deviation (e.g. Thielicke and Stamhuis, 2014). The lower the multiple is chosen, the more features will be filtered, and only tracks will be kept which have values close to the average velocity. In this study, the parameter was set to 1.5. This processing step is more important for challenging tracking situations.

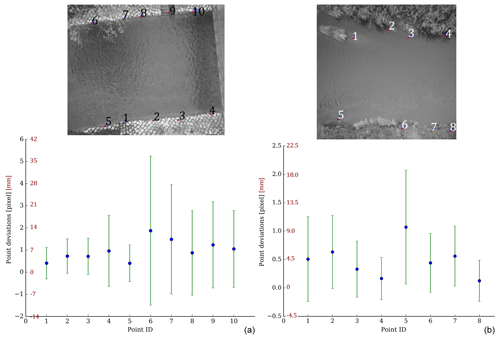

Figure 6Accuracy of co-registration of video frames to single master frame displayed in image space (black axis) and the corresponding accuracy in object space (red axis) at the river Wesenitz (a) and Freiberger Mulde (b). Note that the images are only extracts from the original (bigger) images.

Regarding tracking reliability, it should be noted that in the case of terrestrial cameras with an oblique view onto the river velocity, measurements are preferred closer to the sensor. Particles move across a larger number of pixels in close range to the camera than in further distances, e.g. an erroneous measurement of 1 pixel close to the camera might result in a measurement error of 1 cm, whereas at a further distance it can correspond to 1 m. Furthermore, tracking accuracy decreases significantly in far ranges due to increasing glancing ray intersections with the water surface.

2.5 Discharge estimation

The bathymetric information as well as the flow velocities are needed to calculate the discharge. Thereby, sole UAV data can be used as shown by Detert et al. (2017). In this study, we cut river cross sections from the reconstructed bathymetry and topography at the approximate locations of the ADCP measurements. Afterwards, we extract the water level information by manually detecting the water line in at least three overlapping images and spatially intersecting these point measurements in the object space.

The surface flow velocity values are averaged and multiplied with the velocity coefficients estimated from the ADCP measurements to account for depth-averaged velocities (Table 1). This approach is suitable at the Wesenitz. But at the Freiberger Mulde the method is restricted due to the irregular river cross sections limiting the application of a constant velocity coefficient. Finally, discharge is estimated by multiplying the cross-section area with the depth-averaged velocity.

In this section, results of the accuracies of the image processing are displayed; tracked flow velocities are evaluated; and discharge estimations are analysed.

3.1 Accuracy assessment of camera pose estimation and image co-registration

To enable an accurate measurement of flow velocities, it is necessary to consider how well the camera pose has been estimated. Furthermore, for cameras in motion the accuracy of frame co-registration has to be evaluated as well, to ensure that tracked movements of the particles indeed correspond to river flow instead of camera movements.

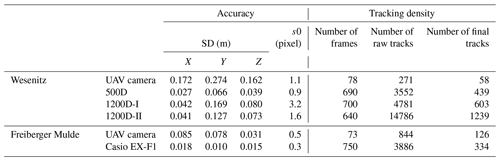

The accuracy of camera pose estimation can be estimated because more than three GCPs are available. In general, the camera pose will be calculated more accurately if GCPs are distributed around the area of interest in the object space and if images capture them in such a way that they cover the entire image extent because it allows for a stable image-to-object geometry. Furthermore, for the highest accuracy demands, GCPs need to be measured with high accuracy in object space and ideally with sub-pixel accuracy in image space. At both river reaches, accuracies are better for the terrestrial cameras (Table 2), which is due to a higher GSD as cameras are significantly closer to the area of interest compared to the UAV cameras. At the Wesenitz, another reason for the larger deviations is the circumstance that well-marked, artificial GCPs were used for the terrestrial images, whereas GCPs were extracted from the 3D surface models to estimate the UAV camera pose leading to lower point coordinate accuracies.

Table 2Accuracy of camera pose estimation and density of tracking results. s0 corresponds to the average reprojection error after the adjusted spatial resection.

Small template regions (10 pixels in size) in stable areas have been chosen (Fig. 6) to estimate the accuracy of frame co-registration. At the Freiberger Mulde only GCPs could be used as templates because the remaining area of interest is covered by vegetation that changes frequently. At the Wesenitz cobble stone corners close to the river surface are chosen because it is important to see how well co-registration performs close to the water body for which velocities are estimated. Each extracted reference location is tracked through the frame sequence via NCC. In case of a perfect alignment, the templates should remain at the same image location throughout the sequence. In this study, at the Freiberger Mulde average deviation between tracked frames to the first frame amounts 0.5±0.6 pixels for all templates, which corresponds to a co-registration accuracy of 4.3±5.2 mm. At the Wesenitz, co-registration reveals an accuracy of 1.0±1.6 pixels (6.8±11.3 mm). The lower image coverage of the right shore at the Wesenitz leads to a lower quality of the frame co-registration when compared to the Freiberger Mulde reach because features for frame matching are only kept outside the water area as the appearance of the river surface changes too quickly. Therefore, higher deviations are measured at the right shore than at the left shore. Considering only the matched targets at the left river side reveals an error range similar to the Freiberger Mulde.

3.2 Flow velocity measurements at the Wesenitz

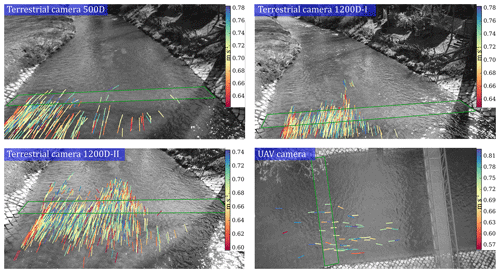

The tracking results and retrieved flow velocities show a diverse picture for the different cameras. For instance, the final number of flow velocity tracks is different for each device (Table 2). The lowest number of tracks is measured for the UAV camera. However, this camera solely captured a very short video sequence (about 3 s) that could be used for tracking. Furthermore, the GSDs of the UAV data are much lower than the GSD of the terrestrial cameras due to a larger sensor-to-object distance. The terrestrial cameras reveal a significantly denser field of flow velocity tracks (Fig. 7). The terrestrial cameras captured videos of a length of about 0.5 min. Although video lengths of the terrestrial cameras are similar, the number of final velocity tracks varies. The camera closest to the water surface and with the least oblique view (1200D-II) reveals the highest track number. The 1200D-I camera reveals a lower number of velocity measurements, although frame resolution and focal length are the same, and video length is even longer. The third camera (500D) depicts lowest track number, which is mainly due to a lower frame resolution.

Figure 7Flow velocities estimated at the Wesenitz using video frames captured with three different terrestrial cameras and a camera on a UAV platform. Final tracks after the statistical outlier filter (Fig. 5f) are displayed. Green border indicates area, in which image-based measurements are compared to ADCP velocities.

Besides considerations of the camera geometry, track filtering is another very important aspect to retrieve reliable velocity measurements. The filtered track number is about a magnitude lower than the raw track amount for the terrestrial cameras (Table 2), highlighting the importance of video sequences with sufficient temporal duration. Thus, tracking should be performed as long as possible to increase the robustness of velocity filtering.

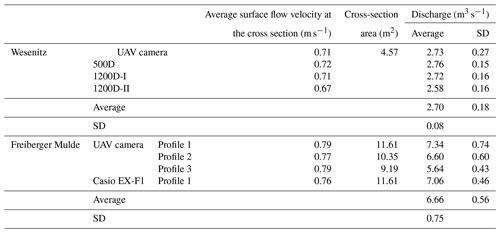

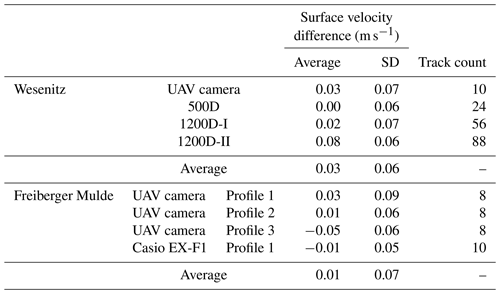

Comparing the range of flow velocity values between the different terrestrial cameras and the UAV camera reveals a good fit (Fig. 7), which also coincides with the ADCP reference (Table 3). Furthermore, regions of faster and slower velocities are revealed in the terrestrial image data that also show within the acoustic data. The average deviation of all cameras to the ADCP measurements are calculated for video-based track values that are within a maximal perpendicular distance to the ADCP profile of 1 m. The difference amounts to 0.03±0.06 m s−1. However, it is difficult to perform an exact comparison to the ADCP measurements because the precise location of the ADCP cross section in the local coordinate system of the river reach is not known, as the ADCP boat was not equipped with any positioning tool, and its movement across the water surface was neither tracked nor synchronised. Therefore, accuracy assessment of the spatial velocity pattern is limited. Nevertheless, we were able to identify the start and end points of the cross sections at the shore in the imagery. Therefore, we could approximately estimate the locations of the cross sections in the decimetre range, which allows for velocity comparison if the surface flow velocity pattern does not become too variable within the shortest distances. This has to be kept in mind when assessing the velocity differences, especially at the Freiberger Mulde.

Average surface flow velocities from the image-based measurements are higher or similar to the (extrapolated) ADCP retrieved surface velocity of 0.7 m s−1, except for camera 1200D-II, which depicts lower values, also compared to the other cameras (Table 4). A potential reason is the different coverage of the cross section with measured velocity values. 1200D-II reveals the highest velocity value density and covers a larger part of the cross section (Fig. 7). More regions with lower velocities are measured by the 1200D-II, whereas the other cameras feature less cross-section coverage, and more values are measured in areas of faster velocities.

Table 3Deviation between ADCP measurements and video-based flow velocities. Differences are calculated for tracks within a range of 1 m and are closest to the ADCP measurements.

Interestingly, an impact of the missing camera calibration of the UAV images is not obvious. Lens distortion parameters were only modelled for the terrestrial cameras but were discarded for the UAV camera. The impact is assumed to be minimal because the camera distortion is usually especially large for cameras with very wide angles, which is not the case for the UAV camera. Furthermore, the distortion impact is more important when features are tracked for large distances in the image, which is also not the case in this study because features are mostly tracked between subsequent frames for only a few pixels.

3.3 Flow velocity measurements at the Freiberger Mulde

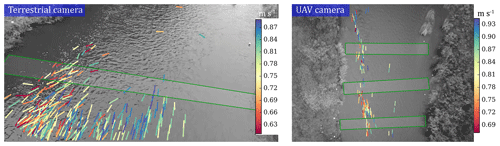

At the Freiberger Mulde a more diverse spatial velocity pattern becomes obvious (Fig. 8). Especially the UAV data reveal areas of increased and decreased velocities along the river reach. Velocity ranges coincide with the ADCP measurements. Average deviations of the closest tracks to the reference values (similar to the approach in Sect. 3.2) are on average m s−1 for the terrestrial and UAV camera and for all cross sections. However, velocities are either overestimated or underestimated at different profiles and for different cameras (Table 3). The flow velocities for the UAV data are lower at profile 3 compared to the reference. However, due to the strong changes of flow velocities within short distances, especially at cross section 3 (Fig. 1), a possible reason can be false mapping of ADCP values to image-based values. The assumption that velocity underestimation at that cross section is due to imprecise point-based velocity comparison is backed when comparing the average cross section UAV-retrieved surface velocity (Table 4) with the average ADCP velocity. In that case, the UAV data reveal larger values (0.79 versus 0.76 m s−1, respectively), confirming the observations at cross sections 1 and 2.

Figure 8Flow velocities estimated at the Freiberger Mulde using video frames captured with a terrestrial camera and a camera on a UAV platform. Green border indicates area, in which image-based measurements are compared to ADCP velocities.

The terrestrial camera depicts a lower spatial density of velocities compared to the terrestrial cameras at the Wesenitz (Table 2), although the video sequence has a comparable length. This is due to the significantly lower image resolution as well as the larger distance to the object. Therefore, fewer features are detectable. The average flow velocity at cross section 1 and the average of contrasted individual velocity tracks are smaller than the reference. However, error behaviour of the image-based data might be less favourable at cross section 1, where the comparison is made for image tracks measured at the far reach of the image. The sharp glancing angles at the water surface lead to higher uncertainties of the corresponding 3D coordinate.

The decision about how to set the parameters for tracking (e.g. patch size) and filtering (e.g. statistical threshold) remains challenging, especially in long-term applications when spatiotemporal flow conditions can change strongly (Hauet et al., 2008). Thus, in future studies intelligent decision approaches for corresponding parameters need to be developed, for instance where measurements are performed iteratively with changing parameters.

3.4 Camera-based discharge retrieval

Discharge estimations at the Wesenitz do not show large deviations between the cameras because velocity estimates showed low deviations, as well (Table 4). Solely the 1200D-II camera displays a lower discharge. The average discharge for all cameras amounts to 2.7 m3 s−1, which corresponds to the discharge measured by the ADCP. Deviations to the reference are below 4 %, highlighting the great potential of the UAV application to retrieve discharge estimates solely from image data in regular river cross sections. Standard deviations of the discharge estimations due to the consideration of the standard deviation of the surface flow velocities are small, ranging from 0.18 m3 s−1 (7 %) to 0.56 m3 s−1 (8 %) at the Wesenitz and Freiberger Mulde, respectively (Table 4).

At the Freiberger Mulde, discharge estimates do not fit as well to the reference measurements. Velocities are only observed in the main flow of the river, where flow velocities are higher. Deviations to the ADCP reference are larger for the terrestrial camera, whose measurements are only compared to profile 1, which shows a large range of flow velocity and depicts very low values outside the main flow (Fig. 1). Comparing single velocity values to nearby ADCP measurements, instead of comparing averaged cross-section information, reveals that the accuracies of image-based velocity measurements are indeed higher (Table 3). Neglecting the slower flow velocities in the shallower river region outside the main flow leads to overestimated discharge values for the irregularly shaped cross sections, which is in contrast to the regular cross section at the Wesenitz. In addition, using the average velocity coefficient is adverse, because the irregular profile shape indicates a changing coefficient (Kim et al., 2008).

Another important issue that needs to be noted is the circumstance that the image-based discharge estimation reveals a high variability that is sensitive to the defined wetted cross section extracted with the defined water level. For instance, at the Wesenitz already a 1 cm offset in the water level value causes a discharge difference of 0.08 m3 s−1 (3 %), and an offset of 3 cm causes a difference of 7 % (0.2 m3 s−1). Different studies already highlight that the correct water level is important for accurate discharge estimation due to the wetted cross-section area error but that it is less relevant for the accuracy of the flow velocities due to erroneous ortho-rectification (Dramais et al., 2011; Le Boursicaud et al., 2016; Leitão et al., 2018).

3.5 Limits and perspectives

In this study, a workflow for surface flow velocity and discharge measurements in rivers using terrestrial and UAV imagery was tested successfully. In general, three main processing steps are necessary, i.e. retrieving terrain information via SfM photogrammetry, estimating the flow velocity with PTV and eventually calculating the discharge with the information from both previous steps. However, some constraints need to be considered. The FlowVelo tool requires at least the video frames, the camera pose (either estimated within in the tool considering GCP information or provided externally), the water level and some estimates of the interior camera geometry (at least focal length and sensor size and resolution are needed). Furthermore, if the camera was not stable during the image acquisition, camera movements can be corrected automatically if sufficient shore areas are visible in the frames. With this information and pre-processing, scaled river surface velocities are retrievable fully automatically.

However, some characteristics of the tool have to be considered. One aspect is the shore visibility in the frames for the co-registration. To guarantee stable areas that are large enough at larger rivers, increasing the flying height might be necessary, potentially reducing the visibility of features to track. Alternatively, cameras with wider opening angles might be needed, potentially resulting in stronger lens distortions. Furthermore, assumptions about the flow characteristics need to be made for successful filtering, which implies either some experience with image velocimetry in riverine environments or some trials to find the most suitable filtering parameters. Consideration of a suitable choice of the threshold of the statistical outlier filter is important, as well. If the filter is chosen too strictly, it can lead to the loss of valid velocity tracks, which is especially probable in rivers with complex flow patterns and a large range of velocities. Another important factor of the image velocimetry tool to consider is the impact of the choices of thresholds on processing time. On the one hand, the more often features are detected and the more frames they are tracked across, the more reliable and robust tracking results are possible because track filtering will receive a larger sample for processing. However, tracking more features across an increased number of frames also increases processing time significantly, which is especially relevant for cameras with high frame rates and image resolutions. Nevertheless, in this study the maximum processing time (for the terrestrial cameras at the Wesenitz that captured videos with lengths of about 0.5 min) was still below 5 min on an average computer.

Measuring surface velocities implies sensitivities to external impacts such as winds, waves or raindrops, potentially falsifying an already established ratio between surface and average flow velocity, i.e. velocity coefficient, due to decreasing or increasing the surface velocity depending on the wind and wave direction and velocity. However, windy and rainy conditions should be avoided using any surface velocity measurement. The accuracy and reliability of the surface velocity measurement can be improved by adding traceable particles to increase the seeding density as shown by Detert et al. (2017). In this study, only natural particles floating at the river surfaces at both study areas were used, which did not cover the entire observed cross section, leading to data gaps complicating the retrieval of discharge from the sparsely distributed velocity values.

The FlowVelo tool does not provide discharge information, yet, because discharge estimation requires additional parameters which need to be determined prior to using image velocimetry as an accurate automatic remote sensing approach. For instance, the water level and the related cross-sectional area are needed, and the velocity coefficient has to be known, which is a point of uncertainty especially at irregular river reaches. In this study, the velocity coefficient was estimated from the ADCP measurements dividing the mean velocity of the cross section with the average surface velocity. However, alternative approaches, e.g. hydraulic modelling, should be analysed in more detail in future studies to evaluate if they can support the retrieval of more suitable velocity coefficients. This becomes especially interesting due to novel possibilities of high-resolution bathymetric and topographic data, e.g. using SfM approaches for river mapping.

In this study, we introduce a remote sensing workflow for automatic flow velocity calculation and discharge estimation. The approach can be applied to terrestrial as well as aerial imagery. Thus, the importance of the acquisition scheme is secondary. However, visibility of tracked particles across the entire river cross section is relevant as indicated by the comparison of three different terrestrial cameras observing nearly the same river reach but revealing variations in the velocity estimates.

Camera movements during the video acquisition are stabilised using an automatic image co-registration method. To estimate flow velocity, particles on the water surface are detected and tracked using PTV. A feature search area is defined automatically solely relying on information about the water level and the topography of the river reach. The detected and tracked particles are filtered with cluster analysis and by making assumptions about the flow characteristics. Discharge is retrieved using the depth-averaged flow velocity and the wetted cross section, which is derived from a 3D surface model reconstructed with multi-media photogrammetry applied to UAV imagery.

Two study sites have been observed with different terrestrial cameras and with a UAV platform. Comparing the results with ADCP reference measurements reveals a high accuracy potential for surface flow velocities calculated with PTV and automatic image co-registration, especially at standard gauging setups (maximal error of 4 %). At irregular cross sections, an accuracy assessment of velocity tracking is limited due to high demands of position accuracies of the reference measurements. Discharge estimates with maximal errors of 5 % could be achieved at the standard track cross section. At irregular profiles, discharge calculation reveals significantly higher differences to reference measurements of 7 %–31 %. This is, amongst other reasons, due to incomplete velocity measurements across the entire river cross section, leading to discharge overestimation when tracks are only retrieved in the faster-flowing river region. Thus, further improvements of the tool for irregular cross sections as well as considering artificial flow seeding is advisable in future studies.

The workflow, including the provided velocity tracking tool FlowVelo, allows for a contactless measurement of spatially distributed surface velocity fields and the estimation of river discharge in previously ungauged and unmeasured regions, making it especially suitable for applications to assess flood events.

The data used in this study and the tracking tool FlowVelo are available at https://doi.org/10.25532/OPARA-32 (Eltner, 2019) and https://github.com/AnetteEltner/FlowVeloTool (Eltner, 2020), respectively.

The supplement related to this article is available online at: https://doi.org/10.5194/hess-24-1429-2020-supplement.

AE conceptualised the study, wrote the Python tool and drafted the paper. AE, HS and JG acquired, processed and analysed the data. HS and JG reviewed the draft.

The authors declare that they have no conflict of interest.

These investigations are part of the research project “extreme events in small and medium catchments (EXTRUSO)”. We are grateful for data sources provided by Andreas Kaiser and the Saxon state company for the environment and agriculture. And we thank André Kutscher for helpful input regarding the tracking toolbox. The data used in this study and the tracking software FlowVelo are available at OPARA and https://github.com/AnetteEltner/FlowVeloTool (last access: 24 March 2020), respectively. Finally, we are grateful for the reviews provided by Salvatore Manfreda and one anonymous referee that helped to improve the original paper.

This research has been supported by the European Social Fund (grant no. 100270097).

This paper was edited by Giuliano Di Baldassarre and reviewed by Salvatore Manfreda and one anonymous referee.

Adler, M.: Messungen von Durchflüssen und Strömungsprofilen mit einem Ultraschall-Doppler-Gerät (ADCP), Wasserwirtschaft, 83, 192–196, 1993.

Blois, G., Best, J. L., Christensen, K. T., Cichella, V., Donahue, A., Hovakimyan, N., and Pakrasi, I.: UAV-based PIV for quantifying water-flow processes in large-scale natural environments, In 18th International Symposium on the Application of Laser and Imaging Techniques to Fluid Mechanics, 2016.

Bradski, G.: The OpenCV Library, Dr. Dobb's Journal of Software Tools, 2000.

Brevis, W., Niño, Y., and Jirka, G. H.: Integrating cross - correlation and relaxation algorithms for particle tracking velocimetry, Exp. Fluids, 50, 135–147, 2011.

Costa, J. E., Spicer, K. R., Cheng, R. T., Haeni, F. P., Melcher, N. B., Thurman, E. M., Plant, W. J., and Keller, W. C.: Measuring stream discharge by non-contact methods: A proof-of-concept experiment, Geophys. Res. Lett., 4, 553–556, 2000.

Creutin, J. D., Muste, M., Bradley, A. A., Kim, S. C., and Kruger, A.: River gauging using PIV techniques: a proof of concept experiment on the Iowa River, J. Hydrol., 277, 182–194, 2003.

Detert, M., Johnson, E. D., and Weitbrecht, V.: Proof-of-concept for low-cost and non-contact synoptic airborne river flow measurements. Int. J. Remote Sens., 38, 2780–2807, 2017.

Dietrich, J. T.: Bathymetric Structure-from-Motion: extracting shallow stream bathymetry from multi-view stereo photogrammetry, Earth Surface Proc. Land., 42, 355–364, 2017.

Dramais, G., Le Coz, J., Camenen, B., and Hauet, A.: Advantages of a mobile LSPIV method for measuring flood discharges and improving stage-discharge curves, Journal of Hydro-Environment Research, 5, 301–312, 2011.

Eltner, A.: Dataset (video sequences and orientation information) to measure river surface flow velocities, https://doi.org/10.25532/OPARA-32, 2019.

Eltner, A.: FlowVeloTool, available at: https://github.com/AnetteEltner/FlowVeloTool, last access: 24 March 2020.

Eltner, A. and Schneider, D.: Analysis of Different Methods for 3D Reconstruction of Natural Surfaces from Parallel-Axes UAV Images, Photogramm. Rec., 30, 279–299, 2015.

Eltner, A., Kaiser, A., Castillo, C., Rock, G., Neugirg, F., and Abellán, A.: Image-based surface reconstruction in geomorphometry – merits, limits and developments, Earth Surf. Dynam., 4, 359–389, https://doi.org/10.5194/esurf-4-359-2016, 2016.

Eltner, A., Elias, M., Sardemann, H., and Spieler, D. Automatic Image-Based Water Stage Measurement for Long- Term Observations in Ungauged Catchments, Water Resour. Res., 54, 10362–10371, 2018.

Fischler, M. A. and Bolles, R. C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography, Commun. ACM, 24, 381–395, 1981.

Fujita, I., Muste, M., and Kruger, A.: Large-scale particle image velocimetry for flow analysis in hydraulic engineering applications, J. Hydraul. Res., 36, 397–414, 1998.

Fujita, I., Watanabe, H., and Tsubaki, R.: Development of a non - intrusive and efficient flow monitoring technique: The space – time image velocimetry (STIV), International Journal of River Basin Management, 5, 105–114, 2007.

Fujita, I., Notoya, Y., and Shimono, M.: Development of UAV-based river surface velocity measurement by STIV based on high-accurate image stabilization techniques, E-proceedings of the 36th IAHR World Congress, 2015.

Genç, O., Ardıçlıoğlu, M., and Necati, A.: Calculation of mean velocity and discharge using water surface velocity in small streams, Flow Meas. Instrum., 41, 115–120, 2015.

Gravelle, R.: Discharge Estimation: Techniques and Equipment. In: Geomorphological Techniques, chap. 3, Sec. 3.5, British Society for Geomorphology, 2015.

Guillén, F., Patalano, A., García, C. M., and Bertoni, J. C.: Use of LSPIV in assessing urban flash flood vulnerability, Nat. Hazards, 87, 383–394, 2017.

Gunawan, B., Sun, X., Sterling, M., Shiono, K., Tsubaki, R., Rameshwaran, P., and Knight, D. W.: The application of LS-PIV to a small irregular river for inbank and overbank flows, Flow Meas. Instrum., 24, 1–12, 2012.

Harris, C. and Stephens, M.: A Combined Corner and Edge Detector, in: Proc. of 4th Alvey Vision Conference, 147–155, 1988.

Hauet, A., Kruger, A., Krajewski, W. F., Bradley, A., Muste, M., Creuting, J.-D., and Wilson, M.: Experimental System for Real-Time Discharge Estimation Using an Image-Based Method, J. Hydrol. Eng., 13, 105–110, 2008.

Herschy, R. W.: Streamflow Measurement. CRC Press, 3rd edition, 510 pp., 2008.

James, M., Chandler, J., Eltner, A., Fraser, C., Miller, P., Mills, J., Noble, T., Robson, S., and Lane, S.: Guidelines on the use of Structure from Motion Photogrammetry in Geomorphic Research, Earth Surf. Proc. Land., 44, 2081–2084, 2019.

Kim, Y., Muste, M., Hauet, A., Krajewski, W. F., Kruger, A., and Bradley, A.: Stream discharge using mobile large-scale particle image velocimetry: A proof of concept, Water Resour. Res., 44, W09502, https://doi.org/10.1029/2006WR005441, 2008.

Koutalakis, P., Tzoraki, O., and Zaimes, G.: UAVs for Hydrologic Scopes?: Application of a Low-Cost UAV to Estimate Surface Water Velocity by Using Three Different Image-Based Methods, Drones, 3, 14, https://doi.org/10.3390/drones3010014, 2019.

Le Boursicaud, R., Pénard, L., Hauet, A., Thollet, F., and Le Coz, J.: Gauging extreme floods on YouTube: Application of LSPIV to home movies for the post-event determination of stream discharges, Hydrol. Process., 30, 90–105, 2016.

Le Coz, J., Hauet, A., Pierrefeu, G., Dramais, G., and Camenen, B.: Performance of image-based velocimetry (LSPIV) applied to flash-flood discharge measurements in Mediterranean rivers, J. Hydrol., 394, 42–52, 2010.

Le Coz, Jérôme, Patalano, A., Collins, D., Guillén, N. F., García, C. M., Smart, G. M., Bind, J., Chiaverini, A., Le Boursicaud, R. L., Dramais, G., Braud, I., and Braud, I.: Crowdsourced data for flood hydrology: Feedback from recent citizen science projects in Argentina, France and New Zealand, J. Hydrol., 541, 766–777, 2016.

Legout, C., Darboux, F., Hauet, A., Esteves, M., Renaux, B., Denis, H., and Cordier, S.: High spatial resolution mapping of surface velocities and depths for shallow overland flow, Earth Surf. Proc. Land., 73, 984–993, 2012.

Leitão, J. P., Peña-haro, S., Lüthi, B., Scheidegger, A., Moy, M., and Vitry, D.: Urban overland runo ff velocity measurement with consumer-grade surveillance cameras and surface structure image velocimetry, J. Hydrol., 565, 791–804, 2018.

Lewis, Q. and Rhoads, B.: Resolving two-dimensional flow structure in rivers using large-scale particle image velocimetry: An example from a stream confluence, Water Resour. Res., 51, 7977–7994, 2015.

Lin, D., Grundmann, J., and Eltner, A.: Evaluating Image Tracking Approaches for Surface Velocimetry with Thermal Tracers, Water Resour. Res., 55, 3122–3136, 2019.

Lowe, D.: Distinctive Image Features from Scale-Invariant Keypoints, Int. J. Comput. Vision, 60, 91–110, 2004.

Lucas, B. and Kanade, T.: An iterative image registration technique with an application to stereo vision, IJCAI'81: Proceedings of the 7th international joint conference on Artificial intelligence, 2, 674–679, 1981.

Luhmann, T., Robson, S., Kyle, S., and Boehm, J.: Close-Range Photogrammetry and 3-D Imaging, 2nd edition, De Gruyter, Berlin, Germany, 683 pp., 2014.

Merz, J.: Discharge Measurements in Low Flow Conditions With ADCP Technology – First Experiences in Nepal, Journal of Hydrology and Meteorology, 7, 40–48, 2010.

Morgenschweis, G.: Hydrometrie, Springer-Verlag Berlin Heidelberg, 582 pp., 2010.

Mulsow, C., Kenner, R., Bühler, Y., Stoffel, A., and Maas, H.-G.: Subaquatic digital elevation models from UAV-imagery. International Archives of the Photogrammetry, Remote Sensing and Spatial Information Science, XLII-2, 739–744, 2018.

Muste, M., Fujita, I., and Hauet, A.: Large-scale particle image velocimetry for measurements in riverine environments, Water Resour. Res., 44, W00D14, https://doi.org/10.1029/2008WR006950, 2008.

Muste, M., Ho, H., and Kim, D.: Considerations on direct stream flow measurements using video imagery: Outlook and research needs, J. Hydro.-Environ. Res., 5, 289–300, 2011.

Muste, M., Hauet, A., Fujita, I., Legout, C., and Ho, H. C.: Capabilities of large-scale particle image velocimetry to characterize shallow free-surface flows, Adv. Water Resour., 70, 160–171, 2014.

Patalano, A., Marcelo Garcia, C., and Rodriguez, A.: Rectification of Image Velocity Results (RIVeR): A simple and user-friendly toolbox for large scale water surface Particle Image Velocimetry (PIV) and Particle Tracking Velocimetry (PTV), Comput. Geosci., 109, 323–333, 2017.

Perks, M. T., Russell, A. J., and Large, A. R. G.: Technical Note: Advances in flash flood monitoring using unmanned aerial vehicles (UAVs), Hydrol. Earth Syst. Sci., 20, 4005–4015, https://doi.org/10.5194/hess-20-4005-2016, 2016.

Puleo, J. A., Mckenna, T. E., Holland, K. T., and Calantoni, J.: Quantifying riverine surface currents from time sequences of thermal infrared imagery, Water Resources, 48, W01527, https://doi.org/10.1029/2011WR010770, 2012.

Ran, Q. H., Li, W., Liao, Q., Tang, H. L., and Wang, M. Y.: Application of an automated LSPIV system in a mountainous stream for continuous flood flow measurements, Hydrol. Process., 30, 3014–3029, 2016.

Rublee, E, Rabaud, V., Konolige, K., and Bradski, G.: ORB: An efficient alternative to SIFT or SURF, 2011 International Conference on Computer Vision, Barcelona, 2564–2571, https://doi.org/10.1109/ICCV.2011.6126544, 2011.

Shi, J. and Tomasi, C.: Computer vision and pattern recognition, Proceedings of IEEE Computer Society Conference on CVPR, 593–600, 1994.

Sidorchuk, A., Schmidt, J., and Cooper, G.: Variability of shallow overland flow velocity and soil aggregate transport observed with digital videography, Hydrol. Process., 22, 4035–4048, 2008.

Stumpf, A., Augereau, E., Delacourt, C., and Bonnier, J.: Photogrammetric discharge monitoring of small tropical mountain rivers: A case study at Rivière des Pluies, Réunion Island, Water Resour. Res., 52, WR018292, https://doi.org/10.1002/2015WR018292, 2016.

Tauro, F., Petroselli, A., and Arcangeletti, E.: Assessment of drone-based surface fl ow observations, Hydrol. Process., 30, 1114–1130, 2016.

Tauro, F., Piscopia, R., and Grimaldi, S.: Streamflow Observations From Cameras: Large-Scale Particle Image Velocimetry or Particle Tracking Velocimetry?, Water Resour. Res., 53, 10374–10394, 2017.

Tauro, F., Tosi, F., Mattoccia, S., Toth, E., Piscopia, R., and Grimaldi, S.: Optical Tracking Velocimetry (OTV): Leveraging Optical Flow and Trajectory-Based Filtering for Surface Streamflow Observations, Remote Sensing, 10, 2010, https://doi.org/10.3390/rs10122010, 2018.

Thielicke, W. and Stamhuis, E.: PIVlab – Towards User-friendly, Affordable and Accurate Digital Particle Image Velocimetry in MATLAB, J. Open Res. Softw., 2, e30, https://doi.org/10.5334/jors.bl, 2014.

Thumser, P., Haas, C., Tuhtan, J. A., Fuentes-Pérez, J. F., and Toming, G.: RAPTOR-UAV: Real-time particle tracking in rivers using an unmanned aerial vehicle, Earth Surf. Proc. Land., 42, 2439–2446, 2017.

Tsubaki, R., Fujita, I., and Tsutsumi, S.: Measurement of the flood discharge of a small-sized river using an existing digital video recording system, J. Hydro-Environ. Res., 5, 313–321, 2011.

Welber, M., Le Coz, J., Laronne, J., Zolezzi, G., Zamler, D., Dramais, G., Hauet, A., and Salvaro, M.: Field assessment of noncontact stream gauging using portable surface velocity radars (SVR), Water Resour. Res., 52, 1108–1126, 2016.

Woodget, A. S., Carbonneau, P. E., Visser, F., and Maddock, I. P.: Quantifying submerged fluvial topography using hyperspatial resolution UAS imagery and structure from motion photogrammetry, Earth Surf. Proc. Land., 40, 47–64, 2015.